## Issue Addressed

Closes https://github.com/sigp/lighthouse/issues/2327

## Proposed Changes

This is an extension of some ideas I implemented while working on `tree-states`:

- Cache the indexed attestations from blocks in the `ConsensusContext`. Previously we were re-computing them 3-4 times over.

- Clean up `import_block` by splitting each part into `import_block_XXX`.

- Move some stuff off hot paths, specifically:

- Relocate non-essential tasks that were running between receiving the payload verification status and priming the early attester cache. These tasks are moved after the cache priming:

- Attestation observation

- Validator monitor updates

- Slasher updates

- Updating the shuffling cache

- Fork choice attestation observation now happens at the end of block verification in parallel with payload verification (this seems to save 5-10ms).

- Payload verification now happens _before_ advancing the pre-state and writing it to disk! States were previously being written eagerly and adding ~20-30ms in front of verifying the execution payload. State catchup also sometimes takes ~500ms if we get a cache miss and need to rebuild the tree hash cache.

The remaining task that's taking substantial time (~20ms) is importing the block to fork choice. I _think_ this is because of pull-tips, and we should be able to optimise it out with a clever total active balance cache in the state (which would be computed in parallel with payload verification). I've decided to leave that for future work though. For now it can be observed via the new `beacon_block_processing_post_exec_pre_attestable_seconds` metric.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

#3704

## Proposed Changes

Adds is_syncing_finalized: bool parameter for block verification functions. Sets the payload_verification_status to Optimistic if is_syncing_finalized is true. Uses SyncState in NetworkGlobals in BeaconProcessor to retrieve the syncing status.

## Additional Info

I could implement FinalizedSignatureVerifiedBlock if you think it would be nicer.

## Issue Addressed

#3732

## Proposed Changes

Add a CLI flag to allow users to opt out of the restrictive permissions of the log files.

## Additional Info

This is not recommended for most users. The log files can contain sensitive information such as validator indices, public keys and API tokens (see #2438). However some users using a multi-user setup may find this helpful if they understand the risks involved.

## Issue Addressed

NA

## Proposed Changes

- Bump versions

- Pin the `nethermind` version since our method of getting the latest tags on `master` is giving us an old version (`1.14.1`).

- Increase timeout for execution engine startup.

## Additional Info

- [x] ~Awaiting further testing~

This PR adds some health endpoints for the beacon node and the validator client.

Specifically it adds the endpoint:

`/lighthouse/ui/health`

These are not entirely stable yet. But provide a base for modification for our UI.

These also may have issues with various platforms and may need modification.

## Issue Addressed

Part of https://github.com/sigp/lighthouse/issues/3651.

## Proposed Changes

Add a flag for enabling the light client server, which should be checked before gossip/RPC traffic is processed (e.g. https://github.com/sigp/lighthouse/pull/3693, https://github.com/sigp/lighthouse/pull/3711). The flag is available at runtime from `beacon_chain.config.enable_light_client_server`.

Additionally, a new method `BeaconChain::with_mutable_state_for_block` is added which I envisage being used for computing light client updates. Unfortunately its performance will be quite poor on average because it will only run quickly with access to the tree hash cache. Each slot the tree hash cache is only available for a brief window of time between the head block being processed and the state advance at 9s in the slot. When the state advance happens the cache is moved and mutated to get ready for the next slot, which makes it no longer useful for merkle proofs related to the head block. Rather than spend more time trying to optimise this I think we should continue prototyping with this code, and I'll make sure `tree-states` is ready to ship before we enable the light client server in prod (cf. https://github.com/sigp/lighthouse/pull/3206).

## Additional Info

I also fixed a bug in the implementation of `BeaconState::compute_merkle_proof` whereby the tree hash cache was moved with `.take()` but never put back with `.restore()`.

## Issue Addressed

This PR addresses partially #3651

## Proposed Changes

This PR adds the following methods:

* a new method to trait `TreeHash`, `hash_tree_leaves` which returns all the Merkle leaves of the ssz object.

* a new method to `BeaconState`: `compute_merkle_proof` which generates a specific merkle proof for given depth and index by using the `hash_tree_leaves` as leaves function.

## Additional Info

Now here is some rationale on why I decided to go down this route: adding a new function to commonly used trait is a pain but was necessary to make sure we have all merkle leaves for every object, that is why I just added `hash_tree_leaves` in the trait and not `compute_merkle_proof` as well. although it would make sense it gives us code duplication/harder review time and we just need it from one specific object in one specific usecase so not worth the effort YET. In my humble opinion.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

New lints for rust 1.65

## Proposed Changes

Notable change is the identification or parameters that are only used in recursion

## Additional Info

na

## Issue Addressed

Updates discv5

Pending on

- [x] #3547

- [x] Alex upgrades his deps

## Proposed Changes

updates discv5 and the enr crate. The only relevant change would be some clear indications of ipv4 usage in lighthouse

## Additional Info

Functionally, this should be equivalent to the prev version.

As draft pending a discv5 release

* add capella gossip boiler plate

* get everything compiling

Co-authored-by: realbigsean <sean@sigmaprime.io

Co-authored-by: Mark Mackey <mark@sigmaprime.io>

* small cleanup

* small cleanup

* cargo fix + some test cleanup

* improve block production

* add fixme for potential panic

Co-authored-by: Mark Mackey <mark@sigmaprime.io>

## Issue Addressed

Closes https://github.com/sigp/lighthouse/issues/2371

## Proposed Changes

Backport some changes from `tree-states` that remove duplicated calculations of the `proposer_index`.

With this change the proposer index should be calculated only once for each block, and then plumbed through to every place it is required.

## Additional Info

In future I hope to add more data to the consensus context that is cached on a per-epoch basis, like the effective balances of validators and the base rewards.

There are some other changes to remove indexing in tests that were also useful for `tree-states` (the `tree-states` types don't implement `Index`).

## Issue Addressed

Implements new optimistic sync test format from https://github.com/ethereum/consensus-specs/pull/2982.

## Proposed Changes

- Add parsing and runner support for the new test format.

- Extend the mock EL with a set of canned responses keyed by block hash. Although this doubles up on some of the existing functionality I think it's really nice to use compared to the `preloaded_responses` or static responses. I think we could write novel new opt sync tests using these primtives much more easily than the previous ones. Forks are natively supported, and different responses to `forkchoiceUpdated` and `newPayload` are also straight-forward.

## Additional Info

Blocked on merge of the spec PR and release of new test vectors.

## Issue Addressed

Which issue # does this PR address?

#2629

## Proposed Changes

Please list or describe the changes introduced by this PR.

1. ci would dowload the bls test cases from https://github.com/ethereum/bls12-381-tests/

2. all the bls test cases(except eth ones) would use cases in the archive from step one

3. The bls test cases from https://github.com/ethereum/consensus-spec-tests would stay there and no use . For the future , these bls test cases would be remove suggested from https://github.com/ethereum/consensus-spec-tests/issues/25 . So it would do no harm and compatible for future cases.

## Additional Info

Please provide any additional information. For example, future considerations

or information useful for reviewers.

Question:

I am not sure if I should implement tests about `deserialization_G1`, `deserialization_G2` and `hash_to_G2` for the issue.

## Issue Addressed

Adding CLI tests for logging flags: log-color and disable-log-timestamp

Which issue # does this PR address?

#3588

## Proposed Changes

Add CLI tests for logging flags as described in #3588

Please list or describe the changes introduced by this PR.

Added logger_config to client::Config as suggested. Implemented Default for LoggerConfig based on what was being done elsewhere in the repo. Created 2 tests for each flag addressed.

## Additional Info

Please provide any additional information. For example, future considerations

or information useful for reviewers.

## Issue Addressed

N/A

## Proposed Changes

With https://github.com/sigp/lighthouse/pull/3214 we made it such that you can either have 1 auth endpoint or multiple non auth endpoints. Now that we are post merge on all networks (testnets and mainnet), we cannot progress a chain without a dedicated auth execution layer connection so there is no point in having a non-auth eth1-endpoint for syncing deposit cache.

This code removes all fallback related code in the eth1 service. We still keep the single non-auth endpoint since it's useful for testing.

## Additional Info

This removes all eth1 fallback related metrics that were relevant for the monitoring service, so we might need to change the api upstream.

## Issue Addressed

Resolves#3573

## Proposed Changes

Fix the bytecode for the deposit contract deployment transaction and value for deposit transaction in the execution engine integration tests. Also verify that all published transaction make it to the execution payload and have a valid status.

## Issue Addressed

NA

## Proposed Changes

This PR removes duplicated block root computation.

Computing the `SignedBeaconBlock::canonical_root` has become more expensive since the merge as we need to compute the merke root of each transaction inside an `ExecutionPayload`.

Computing the root for [a mainnet block](https://beaconcha.in/slot/4704236) is taking ~10ms on my i7-8700K CPU @ 3.70GHz (no sha extensions). Given that our median seen-to-imported time for blocks is presently 300-400ms, removing a few duplicated block roots (~30ms) could represent an easy 10% improvement. When we consider that the seen-to-imported times include operations *after* the block has been placed in the early attester cache, we could expect the 30ms to be more significant WRT our seen-to-attestable times.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

I've noticed that our block hashing times increase significantly after the merge. I did some flamegraph-ing and noticed that we're allocating a `Vec` for each byte of each execution payload transaction. This seems like unnecessary work and a bit of a fragmentation risk.

This PR switches to `SmallVec<[u8; 32]>` for the packed encoding of `TreeHash`. I believe this is a nice simple optimisation with no downside.

### Benchmarking

These numbers were computed using #3580 on my desktop (i7 hex-core). You can see a bit of noise in the numbers, that's probably just my computer doing other things. Generally I found this change takes the time from 10-11ms to 8-9ms. I can also see all the allocations disappear from flamegraph.

This is the block being benchmarked: https://beaconcha.in/slot/4704236

#### Before

```

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 980: 10.553003ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 981: 10.563737ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 982: 10.646352ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 983: 10.628532ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 984: 10.552112ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 985: 10.587778ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 986: 10.640526ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 987: 10.587243ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 988: 10.554748ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 989: 10.551111ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 990: 11.559031ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 991: 11.944827ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 992: 10.554308ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 993: 11.043397ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 994: 11.043315ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 995: 11.207711ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 996: 11.056246ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 997: 11.049706ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 998: 11.432449ms

[2022-09-15T21:44:19Z INFO lcli::block_root] Run 999: 11.149617ms

```

#### After

```

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 980: 14.011653ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 981: 8.925314ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 982: 8.849563ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 983: 8.893689ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 984: 8.902964ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 985: 8.942067ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 986: 8.907088ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 987: 9.346101ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 988: 8.96142ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 989: 9.366437ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 990: 9.809334ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 991: 9.541561ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 992: 11.143518ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 993: 10.821181ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 994: 9.855973ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 995: 10.941006ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 996: 9.596155ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 997: 9.121739ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 998: 9.090019ms

[2022-09-15T21:41:49Z INFO lcli::block_root] Run 999: 9.071885ms

```

## Additional Info

Please provide any additional information. For example, future considerations

or information useful for reviewers.

## Issue Addressed

#3562

## Proposed Changes

Change the fork endpoint from `localhost` to `127.0.0.1` to match the ganache default listening host.

This way it doesn't try (and fail) to connect to `::1` on IPV6 machines.

## Additional Info

First PR

Add flag 'log-color' which preserves colors of log when stdout is redirected to a file.

This is my first lighthouse PR, please let me know if I'm not following contribution guidelines, I welcome meta-feeback (feedback on git commit messages, git branch naming, and the contents of the description of this PR.)

## Issue Addressed

Solves https://github.com/sigp/lighthouse/issues/3527

## Proposed Changes

Adding a flag which enables log color preserving when stdout is redirected to a file.

### Usage

Below I demonstrate current behaviour (without using the new flag) and the new behaviur (when using new flag).

In the screenshot below, I have to panes, one on top running `lighthouse` which redirects to file `~/Desktop/test.log` and one pane in the bottom which runs `tail -f ~/Desktop/test.log`.

#### Current behaviour

```sh

lighthouse --network prater vc |& tee -a ~/Desktop/test.log

```

**Result is no colors**

<img width="1624" alt="current" src="https://user-images.githubusercontent.com/864410/188258226-bfcf8271-4c9e-474c-848e-ac92a60df25c.png">

#### New behaviour

```sh

lighthouse --network prater vc --log-color |& tee -a ~/Desktop/test.log

```

**Result is colors** 🔴🟢🔵🟡

<img width="1624" alt="new" src="https://user-images.githubusercontent.com/864410/188258223-7d9ecf09-92c8-4cba-8f24-bd4d88fc0353.png">

## Additional Info

I chose American spelling of "color" instead of Brittish "colour' since that was aligned with `slog`'s API - method`force_color()`, let me know if you prefer spelling "colour" instead. I also chose to let it be an arg not taking any argument, just like `logfile-compress` flag, rather than having to write `--log-color true`.

## Issue Addressed

Resolves#3448

## Proposed Changes

Removes a known failure that wasn't actually a known failure. The tests declare this block invalid and we refuse to import it due to `ExecutionPayloadError(UnverifiedNonOptimisticCandidate)`.

This is correct since there is only one "eth1" block included in this test and two are required to trigger the merge (pre- and post-TTD blocks). It is slot 1 (tick = 12s) when this block is imported so the import must be prevented by `SAFE_SLOTS_TO_IMPORT_OPTIMISTICALLY`.

I'm not sure where I got the idea in #3448 that this test needed retrospective checking, that seems like a false assumption in hindsight.

## Additional Info

- Blocked on #3464

## Issue Addressed

Recent changes to the Nethermind codebase removed the `rocksdb` git submodule in favour of a `nuget` package.

This appears to have broken our ability to build the latest release of Nethermind inside our integration tests.

## Proposed Changes

~Temporarily pin the version used for the Nethermind integration tests to `master`. This ensures we use the packaged version of `rocksdb`. This is only necessary until a new release of Nethermind is available.~

Use `git submodule update --init --recursive` to ensure the required submodules are pulled before building.

Co-authored-by: Diva M <divma@protonmail.com>

## Issue Addressed

N/A

## Proposed Changes

Fix clippy lints for latest rust version 1.63. I have allowed the [derive_partial_eq_without_eq](https://rust-lang.github.io/rust-clippy/master/index.html#derive_partial_eq_without_eq) lint as satisfying this lint would result in more code that we might not want and I feel it's not required.

Happy to fix this lint across lighthouse if required though.

## Proposed Changes

Update the invalid head tests so that they work with the current default fork choice configuration.

Thanks @realbigsean for fixing the persistence test and the EF tests.

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

https://github.com/sigp/lighthouse/issues/3091

Extends https://github.com/sigp/lighthouse/pull/3062, adding pre-bellatrix block support on blinded endpoints and allowing the normal proposal flow (local payload construction) on blinded endpoints. This resulted in better fallback logic because the VC will not have to switch endpoints on failure in the BN <> Builder API, the BN can just fallback immediately and without repeating block processing that it shouldn't need to. We can also keep VC fallback from the VC<>BN API's blinded endpoint to full endpoint.

## Proposed Changes

- Pre-bellatrix blocks on blinded endpoints

- Add a new `PayloadCache` to the execution layer

- Better fallback-from-builder logic

## Todos

- [x] Remove VC transition logic

- [x] Add logic to only enable builder flow after Merge transition finalization

- [x] Tests

- [x] Fix metrics

- [x] Rustdocs

Co-authored-by: Mac L <mjladson@pm.me>

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

NA

## Proposed Changes

There are scenarios where the only viable head will have an invalid execution payload, in this scenario the `get_head` function on `proto_array` will return an error. We must recover from this scenario by importing blocks from the network.

This PR stops `BeaconChain::recompute_head` from returning an error so that we can't accidentally start down-scoring peers or aborting block import just because the current head has an invalid payload.

## Reviewer Notes

The following changes are included:

1. Allow `fork_choice.get_head` to fail gracefully in `BeaconChain::process_block` when trying to update the `early_attester_cache`; simply don't add the block to the cache rather than aborting the entire process.

1. Don't return an error from `BeaconChain::recompute_head_at_current_slot` and `BeaconChain::recompute_head` to defensively prevent calling functions from aborting any process just because the fork choice function failed to run.

- This should have practically no effect, since most callers were still continuing if recomputing the head failed.

- The outlier is that the API will return 200 rather than a 500 when fork choice fails.

1. Add the `ProtoArrayForkChoice::set_all_blocks_to_optimistic` function to recover from the scenario where we've rebooted and the persisted fork choice has an invalid head.

## Issue Addressed

Closes https://github.com/sigp/lighthouse/issues/3241

Closes https://github.com/sigp/lighthouse/issues/3242

## Proposed Changes

* [x] Implement logic to remove equivocating validators from fork choice per https://github.com/ethereum/consensus-specs/pull/2845

* [x] Update tests to v1.2.0-rc.1. The new test which exercises `equivocating_indices` is passing.

* [x] Pull in some SSZ abstractions from the `tree-states` branch that make implementing Vec-compatible encoding for types like `BTreeSet` and `BTreeMap`.

* [x] Implement schema upgrades and downgrades for the database (new schema version is V11).

* [x] Apply attester slashings from blocks to fork choice

## Additional Info

* This PR doesn't need the `BTreeMap` impl, but `tree-states` does, and I don't think there's any harm in keeping it. But I could also be convinced to drop it.

Blocked on #3322.

## Issue Addressed

The antithesis Docker builds starting failing once we made our MSRV later than 1.58. It seems like it was because there is a new "LLVM pass manager" used by rust by default in more recent versions. Adding a new flag disables usage of the new pass manager and allows builds to pass.

This adds a single flag to the antithesis `Dockerfile.libvoidstar`: `RUSTFLAGS="-Znew-llvm-pass-manager=no"`. But this flag requires us to use `nightly` so it also adds that, pinning to an arbitrary recent date.

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

#3369

## Proposed Changes

The goal is to make it possible to build Lighthouse without network access,

so builds can be reproducible.

This parallels the existing functionality in `common/deposit_contract/build.rs`,

which allows specifying a filename through the environment to avoid downloading

it. In this case, by specifying the version and making it available on the

filesystem, the existing logic will avoid a network download.

## Issue Addressed

Unblock CI for this failure: https://github.com/sigp/lighthouse/runs/7529551988

The root cause is a disagreement between the test and Nethermind over whether the appropriate status for a payload with an unknown parent is SYNCING or ACCEPTED. According to the spec, SYNCING is correct so we should update the test to expect this correct behaviour. However Geth still returns `ACCEPTED`, so for now we allow either.

## Issue Addressed

Add a flag that optionally enables unrealized vote tracking. Would like to test out on testnets and benchmark differences in methods of vote tracking. This PR includes a DB schema upgrade to enable to new vote tracking style.

Co-authored-by: realbigsean <sean@sigmaprime.io>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

Co-authored-by: sean <seananderson33@gmail.com>

Co-authored-by: Mac L <mjladson@pm.me>

## Issue Addressed

Closes https://github.com/sigp/lighthouse/issues/3189.

## Proposed Changes

- Always supply the justified block hash as the `safe_block_hash` when calling `forkchoiceUpdated` on the execution engine.

- Refactor the `get_payload` routine to use the new `ForkchoiceUpdateParameters` struct rather than just the `finalized_block_hash`. I think this is a nice simplification and that the old way of computing the `finalized_block_hash` was unnecessary, but if anyone sees reason to keep that approach LMK.

## Issue Addressed

The lcli and antithesis docker builds are failing in unstable so bumping all the versions here

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

#3302

## Proposed Changes

Move the `reqwest::Client` from being initialized per-validator, to being initialized per distinct Web3Signer.

This is done by placing the `Client` into a `HashMap` keyed by the definition of the Web3Signer as specified by the `ValidatorDefintion`. This will allow multiple Web3Signers to be used with a single VC and also maintains backwards compatibility.

## Additional Info

This was done to reduce the memory used by the VC when connecting to a Web3Signer.

I set up a local testnet using [a custom script](https://github.com/macladson/lighthouse/tree/web3signer-local-test/scripts/local_testnet_web3signer) and ran a VC with 200 validator keys:

VC with Web3Signer:

- `unstable`: ~200MB

- With fix: ~50MB

VC with Local Signer:

- `unstable`: ~35MB

- With fix: ~35MB

> I'm seeing some fragmentation with the VC using the Web3Signer, but not when using a local signer (this is most likely due to making lots of http requests and dealing with lots of JSON objects). I tested the above using `MALLOC_ARENA_MAX=1` to try to reduce the fragmentation. Without it, the values are around +50MB for both `unstable` and the fix.

## Issue Addressed

Resolves#3316

## Proposed Changes

This PR fixes an issue where lighthouse created a transition block with `block.execution_payload().timestamp == terminal_block.timestamp` if the terminal block was created at the slot boundary.

## Issue Addressed

N/A

## Proposed Changes

Make simulator merge compatible. Adds a `--post_merge` flag to the eth1 simulator that enables a ttd and simulates the merge transition. Uses the `MockServer` in the execution layer test utils to simulate a dummy execution node.

Adds the merge transition simulation to CI.

## Issue Addressed

Resolves#3159

## Proposed Changes

Sends transactions to the EE before requesting for a payload in the `execution_integration_tests`. Made some changes to the integration tests in order to be able to sign and publish transactions to the EE:

1. `genesis.json` for both geth and nethermind was modified to include pre-funded accounts that we know private keys for

2. Using the unauthenticated port again in order to make `eth_sendTransaction` and calls from the `personal` namespace to import keys

Also added a `fcu` call with `PayloadAttributes` before calling `getPayload` in order to give EEs sufficient time to pack transactions into the payload.

## Issue Addressed

Web3signer validators can't produce post-Bellatrix blocks.

## Proposed Changes

Add support for Bellatrix to web3signer validators.

## Additional Info

I am running validators with this code on Ropsten, but it may be a while for them to get a proposal.

## Issue Addressed

N/A

## Proposed Changes

Since Rust 1.62, we can use `#[derive(Default)]` on enums. ✨https://blog.rust-lang.org/2022/06/30/Rust-1.62.0.html#default-enum-variants

There are no changes to functionality in this PR, just replaced the `Default` trait implementation with `#[derive(Default)]`.

## Issue Addressed

* #3173

## Proposed Changes

Moved all `fee_recipient_file` related logic inside the `ValidatorStore` as it makes more sense to have this all together there. I tested this with the validators I have on `mainnet-shadow-fork-5` and everything appeared to work well. Only technicality is that I can't get the method to return `401` when the authorization header is not specified (it returns `400` instead). Fixing this is probably quite difficult given that none of `warp`'s rejections have code `401`.. I don't really think this matters too much though as long as it fails.

## Issue Addressed

Currently the execution-engine-integration test uses latest master for nethermind and geth, and right now the test fails using the latest unreleased commits.

## Proposed Changes

Fix the nethermind and geth revisions the test uses to the latest tag in each repo. This way we are not continuously testing unreleased code, which might even get reverted, and reduce the failures only to releases in each one.

Also improve error handling of the commands used to manage the git repos.

## Additional Info

na

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Overview

This rather extensive PR achieves two primary goals:

1. Uses the finalized/justified checkpoints of fork choice (FC), rather than that of the head state.

2. Refactors fork choice, block production and block processing to `async` functions.

Additionally, it achieves:

- Concurrent forkchoice updates to the EL and cache pruning after a new head is selected.

- Concurrent "block packing" (attestations, etc) and execution payload retrieval during block production.

- Concurrent per-block-processing and execution payload verification during block processing.

- The `Arc`-ification of `SignedBeaconBlock` during block processing (it's never mutated, so why not?):

- I had to do this to deal with sending blocks into spawned tasks.

- Previously we were cloning the beacon block at least 2 times during each block processing, these clones are either removed or turned into cheaper `Arc` clones.

- We were also `Box`-ing and un-`Box`-ing beacon blocks as they moved throughout the networking crate. This is not a big deal, but it's nice to avoid shifting things between the stack and heap.

- Avoids cloning *all the blocks* in *every chain segment* during sync.

- It also has the potential to clean up our code where we need to pass an *owned* block around so we can send it back in the case of an error (I didn't do much of this, my PR is already big enough 😅)

- The `BeaconChain::HeadSafetyStatus` struct was removed. It was an old relic from prior merge specs.

For motivation for this change, see https://github.com/sigp/lighthouse/pull/3244#issuecomment-1160963273

## Changes to `canonical_head` and `fork_choice`

Previously, the `BeaconChain` had two separate fields:

```

canonical_head: RwLock<Snapshot>,

fork_choice: RwLock<BeaconForkChoice>

```

Now, we have grouped these values under a single struct:

```

canonical_head: CanonicalHead {

cached_head: RwLock<Arc<Snapshot>>,

fork_choice: RwLock<BeaconForkChoice>

}

```

Apart from ergonomics, the only *actual* change here is wrapping the canonical head snapshot in an `Arc`. This means that we no longer need to hold the `cached_head` (`canonical_head`, in old terms) lock when we want to pull some values from it. This was done to avoid deadlock risks by preventing functions from acquiring (and holding) the `cached_head` and `fork_choice` locks simultaneously.

## Breaking Changes

### The `state` (root) field in the `finalized_checkpoint` SSE event

Consider the scenario where epoch `n` is just finalized, but `start_slot(n)` is skipped. There are two state roots we might in the `finalized_checkpoint` SSE event:

1. The state root of the finalized block, which is `get_block(finalized_checkpoint.root).state_root`.

4. The state root at slot of `start_slot(n)`, which would be the state from (1), but "skipped forward" through any skip slots.

Previously, Lighthouse would choose (2). However, we can see that when [Teku generates that event](de2b2801c8/data/beaconrestapi/src/main/java/tech/pegasys/teku/beaconrestapi/handlers/v1/events/EventSubscriptionManager.java (L171-L182)) it uses [`getStateRootFromBlockRoot`](de2b2801c8/data/provider/src/main/java/tech/pegasys/teku/api/ChainDataProvider.java (L336-L341)) which uses (1).

I have switched Lighthouse from (2) to (1). I think it's a somewhat arbitrary choice between the two, where (1) is easier to compute and is consistent with Teku.

## Notes for Reviewers

I've renamed `BeaconChain::fork_choice` to `BeaconChain::recompute_head`. Doing this helped ensure I broke all previous uses of fork choice and I also find it more descriptive. It describes an action and can't be confused with trying to get a reference to the `ForkChoice` struct.

I've changed the ordering of SSE events when a block is received. It used to be `[block, finalized, head]` and now it's `[block, head, finalized]`. It was easier this way and I don't think we were making any promises about SSE event ordering so it's not "breaking".

I've made it so fork choice will run when it's first constructed. I did this because I wanted to have a cached version of the last call to `get_head`. Ensuring `get_head` has been run *at least once* means that the cached values doesn't need to wrapped in an `Option`. This was fairly simple, it just involved passing a `slot` to the constructor so it knows *when* it's being run. When loading a fork choice from the store and a slot clock isn't handy I've just used the `slot` that was saved in the `fork_choice_store`. That seems like it would be a faithful representation of the slot when we saved it.

I added the `genesis_time: u64` to the `BeaconChain`. It's small, constant and nice to have around.

Since we're using FC for the fin/just checkpoints, we no longer get the `0x00..00` roots at genesis. You can see I had to remove a work-around in `ef-tests` here: b56be3bc2. I can't find any reason why this would be an issue, if anything I think it'll be better since the genesis-alias has caught us out a few times (0x00..00 isn't actually a real root). Edit: I did find a case where the `network` expected the 0x00..00 alias and patched it here: 3f26ac3e2.

You'll notice a lot of changes in tests. Generally, tests should be functionally equivalent. Here are the things creating the most diff-noise in tests:

- Changing tests to be `tokio::async` tests.

- Adding `.await` to fork choice, block processing and block production functions.

- Refactor of the `canonical_head` "API" provided by the `BeaconChain`. E.g., `chain.canonical_head.cached_head()` instead of `chain.canonical_head.read()`.

- Wrapping `SignedBeaconBlock` in an `Arc`.

- In the `beacon_chain/tests/block_verification`, we can't use the `lazy_static` `CHAIN_SEGMENT` variable anymore since it's generated with an async function. We just generate it in each test, not so efficient but hopefully insignificant.

I had to disable `rayon` concurrent tests in the `fork_choice` tests. This is because the use of `rayon` and `block_on` was causing a panic.

Co-authored-by: Mac L <mjladson@pm.me>

## Issue Addressed

This PR is a subset of the changes in #3134. Unstable will still not function correctly with the new builder spec once this is merged, #3134 should be used on testnets

## Proposed Changes

- Removes redundancy in "builders" (servers implementing the builder spec)

- Renames `payload-builder` flag to `builder`

- Moves from old builder RPC API to new HTTP API, but does not implement the validator registration API (implemented in https://github.com/sigp/lighthouse/pull/3194)

Co-authored-by: sean <seananderson33@gmail.com>

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

Lays the groundwork for builder API changes by implementing the beacon-API's new `register_validator` endpoint

## Proposed Changes

- Add a routine in the VC that runs on startup (re-try until success), once per epoch or whenever `suggested_fee_recipient` is updated, signing `ValidatorRegistrationData` and sending it to the BN.

- TODO: `gas_limit` config options https://github.com/ethereum/builder-specs/issues/17

- BN only sends VC registration data to builders on demand, but VC registration data *does update* the BN's prepare proposer cache and send an updated fcU to a local EE. This is necessary for fee recipient consistency between the blinded and full block flow in the event of fallback. Having the BN only send registration data to builders on demand gives feedback directly to the VC about relay status. Also, since the BN has no ability to sign these messages anyways (so couldn't refresh them if it wanted), and validator registration is independent of the BN head, I think this approach makes sense.

- Adds upcoming consensus spec changes for this PR https://github.com/ethereum/consensus-specs/pull/2884

- I initially applied the bit mask based on a configured application domain.. but I ended up just hard coding it here instead because that's how it's spec'd in the builder repo.

- Should application mask appear in the api?

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

Resolves#3069

## Proposed Changes

Unify the `eth1-endpoints` and `execution-endpoints` flags in a backwards compatible way as described in https://github.com/sigp/lighthouse/issues/3069#issuecomment-1134219221

Users have 2 options:

1. Use multiple non auth execution endpoints for deposit processing pre-merge

2. Use a single jwt authenticated execution endpoint for both execution layer and deposit processing post merge

Related https://github.com/sigp/lighthouse/issues/3118

To enable jwt authenticated deposit processing, this PR removes the calls to `net_version` as the `net` namespace is not exposed in the auth server in execution clients.

Moving away from using `networkId` is a good step in my opinion as it doesn't provide us with any added guarantees over `chainId`. See https://github.com/ethereum/consensus-specs/issues/2163 and https://github.com/sigp/lighthouse/issues/2115

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

Failures in our CI integration tests for Geth.

## Proposed Changes

Only connect to the authenticated execution endpoints during execution tests.

This is necessary now that it is impossible to connect to the `engine` api on an unauthenticated endpoint.

See https://github.com/ethereum/go-ethereum/pull/24997

## Additional Info

As these tests break semi-regularly, I have kept logs enabled to ease future debugging.

I've also updated the Nethermind tests, although these weren't broken. This should future-proof us if Nethermind decides to follow suit with Geth

## Issue Addressed

N/A

## Proposed Changes

Preemptively switch Nethermind integration tests to use the `master` branch along with the baked in `kiln` config.

## Additional Info

There have been some spurious timeouts across CI so this also increases the timeout to 20s.

## Issue Addressed

This fixes the low-hanging Clippy lints introduced in Rust 1.61 (due any hour now). It _ignores_ one lint, because fixing it requires a structural refactor of the validator client that needs to be done delicately. I've started on that refactor and will create another PR that can be reviewed in more depth in the coming days. I think we should merge this PR in the meantime to unblock CI.

## Issue Addressed

Web3Signer validators do not support client authentication. This means the `--tls-known-clients-file` option on Web3Signer can't be used with Lighthouse.

## Proposed Changes

Add two new fields to Web3Signer validators, `client_identity_path` and `client_identity_password`, which specify the path and password for a PKCS12 file containing a certificate and private key. If `client_identity_path` is present, use the certificate for SSL client authentication.

## Additional Info

I am successfully validating on Prater using client authentication with Web3Signer and client authentication.

## Proposed Changes

Reduce post-merge disk usage by not storing finalized execution payloads in Lighthouse's database.

⚠️ **This is achieved in a backwards-incompatible way for networks that have already merged** ⚠️. Kiln users and shadow fork enjoyers will be unable to downgrade after running the code from this PR. The upgrade migration may take several minutes to run, and can't be aborted after it begins.

The main changes are:

- New column in the database called `ExecPayload`, keyed by beacon block root.

- The `BeaconBlock` column now stores blinded blocks only.

- Lots of places that previously used full blocks now use blinded blocks, e.g. analytics APIs, block replay in the DB, etc.

- On finalization:

- `prune_abanonded_forks` deletes non-canonical payloads whilst deleting non-canonical blocks.

- `migrate_db` deletes finalized canonical payloads whilst deleting finalized states.

- Conversions between blinded and full blocks are implemented in a compositional way, duplicating some work from Sean's PR #3134.

- The execution layer has a new `get_payload_by_block_hash` method that reconstructs a payload using the EE's `eth_getBlockByHash` call.

- I've tested manually that it works on Kiln, using Geth and Nethermind.

- This isn't necessarily the most efficient method, and new engine APIs are being discussed to improve this: https://github.com/ethereum/execution-apis/pull/146.

- We're depending on the `ethers` master branch, due to lots of recent changes. We're also using a workaround for https://github.com/gakonst/ethers-rs/issues/1134.

- Payload reconstruction is used in the HTTP API via `BeaconChain::get_block`, which is now `async`. Due to the `async` fn, the `blocking_json` wrapper has been removed.

- Payload reconstruction is used in network RPC to serve blocks-by-{root,range} responses. Here the `async` adjustment is messier, although I think I've managed to come up with a reasonable compromise: the handlers take the `SendOnDrop` by value so that they can drop it on _task completion_ (after the `fn` returns). Still, this is introducing disk reads onto core executor threads, which may have a negative performance impact (thoughts appreciated).

## Additional Info

- [x] For performance it would be great to remove the cloning of full blocks when converting them to blinded blocks to write to disk. I'm going to experiment with a `put_block` API that takes the block by value, breaks it into a blinded block and a payload, stores the blinded block, and then re-assembles the full block for the caller.

- [x] We should measure the latency of blocks-by-root and blocks-by-range responses.

- [x] We should add integration tests that stress the payload reconstruction (basic tests done, issue for more extensive tests: https://github.com/sigp/lighthouse/issues/3159)

- [x] We should (manually) test the schema v9 migration from several prior versions, particularly as blocks have changed on disk and some migrations rely on being able to load blocks.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Proposed Changes

Recently, changes to Nethermind's Kiln branch have broken our integration tests.

This PR updates the chainspec to Kiln to ensure proper compatibility.

## Issue Addressed

N/A

## Proposed Changes

https://github.com/sigp/lighthouse/pull/3133 changed the rpc type limits to be fork aware i.e. if our current fork based on wall clock slot is Altair, then we apply only altair rpc type limits. This is a bug because phase0 blocks can still be sent over rpc and phase 0 block minimum size is smaller than altair block minimum size. So a phase0 block with `size < SIGNED_BEACON_BLOCK_ALTAIR_MIN` will return an `InvalidData` error as it doesn't pass the rpc types bound check.

This error can be seen when we try syncing pre-altair blocks with size smaller than `SIGNED_BEACON_BLOCK_ALTAIR_MIN`.

This PR fixes the issue by also accounting for forks earlier than current_fork in the rpc limits calculation in the `rpc_block_limits_by_fork` function. I decided to hardcode the limits in the function because that seemed simpler than calculating previous forks based on current fork and doing a min across forks. Adding a new fork variant is simple and can the limits can be easily checked in a review.

Adds unit tests and modifies the syncing simulator to check the syncing from across fork boundaries.

The syncing simulator's block 1 would always be of phase 0 minimum size (404 bytes) which is smaller than altair min block size (since block 1 contains no attestations).

## Proposed Changes

I did some gardening 🌳 in our dependency tree:

- Remove duplicate versions of `warp` (git vs patch)

- Remove duplicate versions of lots of small deps: `cpufeatures`, `ethabi`, `ethereum-types`, `bitvec`, `nix`, `libsecp256k1`.

- Update MDBX (should resolve#3028). I tested and Lighthouse compiles on Windows 11 now.

- Restore `psutil` back to upstream

- Make some progress updating everything to rand 0.8. There are a few crates stuck on 0.7.

Hopefully this puts us on a better footing for future `cargo audit` issues, and improves compile times slightly.

## Additional Info

Some crates are held back by issues with `zeroize`. libp2p-noise depends on [`chacha20poly1305`](https://crates.io/crates/chacha20poly1305) which depends on zeroize < v1.5, and we can only have one version of zeroize because it's post 1.0 (see https://github.com/rust-lang/cargo/issues/6584). The latest version of `zeroize` is v1.5.4, which is used by the new versions of many other crates (e.g. `num-bigint-dig`). Once a new version of chacha20poly1305 is released we can update libp2p-noise and upgrade everything to the latest `zeroize` version.

I've also opened a PR to `blst` related to zeroize: https://github.com/supranational/blst/pull/111

## Issue Addressed

MEV boost compatibility

## Proposed Changes

See #2987

## Additional Info

This is blocked on the stabilization of a couple specs, [here](https://github.com/ethereum/beacon-APIs/pull/194) and [here](https://github.com/flashbots/mev-boost/pull/20).

Additional TODO's and outstanding questions

- [ ] MEV boost JWT Auth

- [ ] Will `builder_proposeBlindedBlock` return the revealed payload for the BN to propogate

- [ ] Should we remove `private-tx-proposals` flag and communicate BN <> VC with blinded blocks by default once these endpoints enter the beacon-API's repo? This simplifies merge transition logic.

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Issue Addressed

Resolves#2936

## Proposed Changes

Adds functionality for calling [`validator/prepare_beacon_proposer`](https://ethereum.github.io/beacon-APIs/?urls.primaryName=dev#/Validator/prepareBeaconProposer) in advance.

There is a `BeaconChain::prepare_beacon_proposer` method which, which called, computes the proposer for the next slot. If that proposer has been registered via the `validator/prepare_beacon_proposer` API method, then the `beacon_chain.execution_layer` will be provided the `PayloadAttributes` for us in all future forkchoiceUpdated calls. An artificial forkchoiceUpdated call will be created 4s before each slot, when the head updates and when a validator updates their information.

Additionally, I added strict ordering for calls from the `BeaconChain` to the `ExecutionLayer`. I'm not certain the `ExecutionLayer` will always maintain this ordering, but it's a good start to have consistency from the `BeaconChain`. There are some deadlock opportunities introduced, they are documented in the code.

## Additional Info

- ~~Blocked on #2837~~

Co-authored-by: realbigsean <seananderson33@GMAIL.com>

## Issue Addressed

Resolves#3015

## Proposed Changes

Add JWT token based authentication to engine api requests. The jwt secret key is read from the provided file and is used to sign tokens that are used for authenticated communication with the EL node.

- [x] Interop with geth (synced `merge-devnet-4` with the `merge-kiln-v2` branch on geth)

- [x] Interop with other EL clients (nethermind on `merge-devnet-4`)

- [x] ~Implement `zeroize` for jwt secrets~

- [x] Add auth server tests with `mock_execution_layer`

- [x] Get auth working with the `execution_engine_integration` tests

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

As discussed on last-night's consensus call, the testnets next week will target the [Kiln Spec v2](https://hackmd.io/@n0ble/kiln-spec).

Presently, we support Kiln V1. V2 is backwards compatible, except for renaming `random` to `prev_randao` in:

- https://github.com/ethereum/execution-apis/pull/180

- https://github.com/ethereum/consensus-specs/pull/2835

With this PR we'll no longer be compatible with the existing Kintsugi and Kiln testnets, however we'll be ready for the testnets next week. I raised this breaking change in the call last night, we are all keen to move forward and break things.

We now target the [`merge-kiln-v2`](https://github.com/MariusVanDerWijden/go-ethereum/tree/merge-kiln-v2) branch for interop with Geth. This required adding the `--http.aauthport` to the tester to avoid a port conflict at startup.

### Changes to exec integration tests

There's some change in the `merge-kiln-v2` version of Geth that means it can't compile on a vanilla Github runner. Bumping the `go` version on the runner solved this issue.

Whilst addressing this, I refactored the `testing/execution_integration` crate to be a *binary* rather than a *library* with tests. This means that we don't need to run the `build.rs` and build Geth whenever someone runs `make lint` or `make test-release`. This is nice for everyday users, but it's also nice for CI so that we can have a specific runner for these tests and we don't need to ensure *all* runners support everything required to build all execution clients.

## More Info

- [x] ~~EF tests are failing since the rename has broken some tests that reference the old field name. I have been told there will be new tests released in the coming days (25/02/22 or 26/02/22).~~

## Issue Addressed

#3006

## Proposed Changes

This PR changes the default behaviour of lighthouse to ignore discovered IPs that are not globally routable. It adds a CLI flag, --enable-local-discovery to permit the non-global IPs in discovery.

NOTE: We should take care in merging this as I will break current set-ups that rely on local IP discovery. I made this the non-default behaviour because we dont really want to be wasting resources attempting to connect to non-routable addresses and we dont want to propagate these to others (on the chance we can connect to one of these local nodes), improving discoveries efficiency.

## Issue Addressed

NA

## Proposed Changes

Adds the functionality to allow blocks to be validated/invalidated after their import as per the [optimistic sync spec](https://github.com/ethereum/consensus-specs/blob/dev/sync/optimistic.md#how-to-optimistically-import-blocks). This means:

- Updating `ProtoArray` to allow flipping the `execution_status` of ancestors/descendants based on payload validity updates.

- Creating separation between `execution_layer` and the `beacon_chain` by creating a `PayloadStatus` struct.

- Refactoring how the `execution_layer` selects a `PayloadStatus` from the multiple statuses returned from multiple EEs.

- Adding testing framework for optimistic imports.

- Add `ExecutionBlockHash(Hash256)` new-type struct to avoid confusion between *beacon block roots* and *execution payload hashes*.

- Add `merge` to [`FORKS`](c3a793fd73/Makefile (L17)) in the `Makefile` to ensure we test the beacon chain with merge settings.

- Fix some tests here that were failing due to a missing execution layer.

## TODO

- [ ] Balance tests

Co-authored-by: Mark Mackey <mark@sigmaprime.io>

## Proposed Changes

Lots of lint updates related to `flat_map`, `unwrap_or_else` and string patterns. I did a little more creative refactoring in the op pool, but otherwise followed Clippy's suggestions.

## Additional Info

We need this PR to unblock CI.

## Issue Addressed

NA

## Proposed Changes

This PR extends #3018 to address my review comments there and add automated integration tests with Geth (and other implementations, in the future).

I've also de-duplicated the "unused port" logic by creating an `common/unused_port` crate.

## Additional Info

I'm not sure if we want to merge this PR, or update #3018 and merge that. I don't mind, I'm primarily opening this PR to make sure CI works.

Co-authored-by: Mark Mackey <mark@sigmaprime.io>

## Issue Addressed

Closes#3014

## Proposed Changes

- Rename `receipt_root` to `receipts_root`

- Rename `execute_payload` to `notify_new_payload`

- This is slightly weird since we modify everything except the actual HTTP call to the engine API. That change is expected to be implemented in #2985 (cc @ethDreamer)

- Enable "random" tests for Bellatrix.

## Notes

This will break *partially* compatibility with Kintusgi testnets in order to gain compatibility with [Kiln](https://hackmd.io/@n0ble/kiln-spec) testnets. I think it will only break the BN APIs due to the `receipts_root` change, however it might have some other effects too.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Removes all configurations and hard-coded rules related to the deprecated Pyrmont testnet.

## Additional Info

Pyrmont is deprecated/will be shut down after being used for scenario testing, this PR removes configurations related to it.

Co-authored-by: Zachinquarantine <zachinquarantine@yahoo.com>

## Issue Addressed

#2883

## Proposed Changes

* Added `suggested-fee-recipient` & `suggested-fee-recipient-file` flags to validator client (similar to graffiti / graffiti-file implementation).

* Added proposer preparation service to VC, which sends the fee-recipient of all known validators to the BN via [/eth/v1/validator/prepare_beacon_proposer](https://github.com/ethereum/beacon-APIs/pull/178) api once per slot

* Added [/eth/v1/validator/prepare_beacon_proposer](https://github.com/ethereum/beacon-APIs/pull/178) api endpoint and preparation data caching

* Added cleanup routine to remove cached proposer preparations when not updated for 2 epochs

## Additional Info

Changed the Implementation following the discussion in #2883.

Co-authored-by: pk910 <philipp@pk910.de>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

Co-authored-by: Philipp K <philipp@pk910.de>

## Issue Addressed

Closes#2938

## Proposed Changes

* Build and publish images with a `-modern` suffix which enable CPU optimizations for modern hardware.

* Add docs for the plethora of available images!

* Unify all the Docker workflows in `docker.yml` (including for tagged releases).

## Additional Info

The `Dockerfile` is no longer used by our Docker Hub builds, as we use `cross` and a generic approach for ARM and x86. There's a new CI job `docker-build-from-source` which tests the `Dockerfile` without publishing anything.

## Proposed Changes

Change the canonical fork name for the merge to Bellatrix. Keep other merge naming the same to avoid churn.

I've also fixed and enabled the `fork` and `transition` tests for Bellatrix, and the v1.1.7 fork choice tests.

Additionally, the `BellatrixPreset` has been added with tests. It gets served via the `/config/spec` API endpoint along with the other presets.

## Issue Addressed

Automates a build and push to antithesis servers on merges to unstable. They run tests against lighthouse daily and have requested more frequent pushes. Currently we are just manually pushing stable images when we have a new release.

## Proposed Changes

- Add a `Dockerfile.libvoidstar`

- Add the `libvoidstar.so` binary

- Add a new workflow to autmatically build and push on merges to unstable

## Additional Info

Requires adding the following secrets

-`ANTITHESIS_USERNAME`

-`ANTITHESIS_PASSWORD`

-`ANTITHESIS_REPOSITORY`

-`ANTITHESIS_SERVER`

Tested here: https://github.com/realbigsean/lighthouse/actions/runs/1612821446

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: realbigsean <sean@sigmaprime.io>

## Proposed Changes

Update `superstruct` to bring in @realbigsean's fixes necessary for MEV-compatible private beacon block types (a la #2795).

The refactoring is due to another change in superstruct that allows partial getters to be auto-generated.

## Issue Addressed

Successor to #2431

## Proposed Changes

* Add a `BlockReplayer` struct to abstract over the intricacies of calling `per_slot_processing` and `per_block_processing` while avoiding unnecessary tree hashing.

* Add a variant of the forwards state root iterator that does not require an `end_state`.

* Use the `BlockReplayer` when reconstructing states in the database. Use the efficient forwards iterator for frozen states.

* Refactor the iterators to remove `Arc<HotColdDB>` (this seems to be neater than making _everything_ an `Arc<HotColdDB>` as I did in #2431).

Supplying the state roots allow us to avoid building a tree hash cache at all when reconstructing historic states, which saves around 1 second flat (regardless of `slots-per-restore-point`). This is a small percentage of worst-case state load times with 200K validators and SPRP=2048 (~15s vs ~16s) but a significant speed-up for more frequent restore points: state loads with SPRP=32 should be now consistently <500ms instead of 1.5s (a ~3x speedup).

## Additional Info

Required by https://github.com/sigp/lighthouse/pull/2628

## Issue Addressed

Resolves: https://github.com/sigp/lighthouse/issues/2741

Includes: https://github.com/sigp/lighthouse/pull/2853 so that we can get ssz static tests passing here on v1.1.6. If we want to merge that first, we can make this diff slightly smaller

## Proposed Changes

- Changes the `justified_epoch` and `finalized_epoch` in the `ProtoArrayNode` each to an `Option<Checkpoint>`. The `Option` is necessary only for the migration, so not ideal. But does allow us to add a default logic to `None` on these fields during the database migration.

- Adds a database migration from a legacy fork choice struct to the new one, search for all necessary block roots in fork choice by iterating through blocks in the db.

- updates related to https://github.com/ethereum/consensus-specs/pull/2727

- We will have to update the persisted forkchoice to make sure the justified checkpoint stored is correct according to the updated fork choice logic. This boils down to setting the forkchoice store's justified checkpoint to the justified checkpoint of the block that advanced the finalized checkpoint to the current one.

- AFAICT there's no migration steps necessary for the update to allow applying attestations from prior blocks, but would appreciate confirmation on that

- I updated the consensus spec tests to v1.1.6 here, but they will fail until we also implement the proposer score boost updates. I confirmed that the previously failing scenario `new_finalized_slot_is_justified_checkpoint_ancestor` will now pass after the boost updates, but haven't confirmed _all_ tests will pass because I just quickly stubbed out the proposer boost test scenario formatting.

- This PR now also includes proposer boosting https://github.com/ethereum/consensus-specs/pull/2730

## Additional Info

I realized checking justified and finalized roots in fork choice makes it more likely that we trigger this bug: https://github.com/ethereum/consensus-specs/pull/2727

It's possible the combination of justified checkpoint and finalized checkpoint in the forkchoice store is different from in any block in fork choice. So when trying to startup our store's justified checkpoint seems invalid to the rest of fork choice (but it should be valid). When this happens we get an `InvalidBestNode` error and fail to start up. So I'm including that bugfix in this branch.

Todo:

- [x] Fix fork choice tests

- [x] Self review

- [x] Add fix for https://github.com/ethereum/consensus-specs/pull/2727

- [x] Rebase onto Kintusgi

- [x] Fix `num_active_validators` calculation as @michaelsproul pointed out

- [x] Clean up db migrations

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Changes required for the `merge-devnet-3`. Added some more non substantive renames on top of @realbigsean 's commit.

Note: this doesn't include the proposer boosting changes in kintsugi v3.

This devnet isn't running with the proposer boosting fork choice changes so if we are looking to merge https://github.com/sigp/lighthouse/pull/2822 into `unstable`, then I think we should just maintain this branch for the devnet temporarily.

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

New rust lints

## Proposed Changes

- Boxing some enum variants

- removing some unused fields (is the validator lockfile unused? seemed so to me)

## Additional Info

- some error fields were marked as dead code but are logged out in areas

- left some dead fields in our ef test code because I assume they are useful for debugging?

Co-authored-by: realbigsean <seananderson33@gmail.com>

* Fix fork choice after rebase

* Remove paulhauner warp dep

* Fix fork choice test compile errors

* Assume fork choice payloads are valid

* Add comment

* Ignore new tests

* Fix error in test skipping

* Fix arbitrary check kintsugi

* Add merge chain spec fields, and a function to determine which constant to use based on the state variant

* increment spec test version

* Remove `Transaction` enum wrapper

* Remove Transaction new-type

* Remove gas validations

* Add `--terminal-block-hash-epoch-override` flag

* Increment spec tests version to 1.1.5

* Remove extraneous gossip verification https://github.com/ethereum/consensus-specs/pull/2687

* - Remove unused Error variants

- Require both "terminal-block-hash-epoch-override" and "terminal-block-hash-override" when either flag is used

* - Remove a couple more unused Error variants

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* update initializing from eth1 for merge genesis

* read execution payload header from file lcli

* add `create-payload-header` command to `lcli`

* fix base fee parsing

* Apply suggestions from code review

* default `execution_payload_header` bool to false when deserializing `meta.yml` in EF tests

Co-authored-by: Paul Hauner <paul@paulhauner.com>

Added Execution Payload from Rayonism Fork

Updated new Containers to match Merge Spec

Updated BeaconBlockBody for Merge Spec

Completed updating BeaconState and BeaconBlockBody

Modified ExecutionPayload<T> to use Transaction<T>

Mostly Finished Changes for beacon-chain.md

Added some things for fork-choice.md

Update to match new fork-choice.md/fork.md changes

ran cargo fmt

Added Missing Pieces in eth2_libp2p for Merge

fix ef test

Various Changes to Conform Closer to Merge Spec

## Issue Addressed

Closes#1996

## Proposed Changes

Run a second `Logger` via `sloggers` which logs to a file in the background with:

- separate `debug-level` for background and terminal logging

- the ability to limit log size

- rotation through a customizable number of log files

- an option to compress old log files (`.gz` format)

Add the following new CLI flags:

- `--logfile-debug-level`: The debug level of the log files

- `--logfile-max-size`: The maximum size of each log file

- `--logfile-max-number`: The number of old log files to store

- `--logfile-compress`: Whether to compress old log files

By default background logging uses the `debug` log level and saves logfiles to:

- Beacon Node: `$HOME/.lighthouse/$network/beacon/logs/beacon.log`

- Validator Client: `$HOME/.lighthouse/$network/validators/logs/validator.log`

Or, when using the `--datadir` flag:

`$datadir/beacon/logs/beacon.log` and `$datadir/validators/logs/validator.log`

Once rotated, old logs are stored like so: `beacon.log.1`, `beacon.log.2` etc.

> Note: `beacon.log.1` is always newer than `beacon.log.2`.

## Additional Info

Currently the default value of `--logfile-max-size` is 200 (MB) and `--logfile-max-number` is 5.

This means that the maximum storage space that the logs will take up by default is 1.2GB.

(200MB x 5 from old log files + <200MB the current logfile being written to)

Happy to adjust these default values to whatever people think is appropriate.

It's also worth noting that when logging to a file, we lose our custom `slog` formatting. This means the logfile logs look like this:

```

Oct 27 16:02:50.305 INFO Lighthouse started, version: Lighthouse/v2.0.1-8edd9d4+, module: lighthouse:413

Oct 27 16:02:50.305 INFO Configured for network, name: prater, module: lighthouse:414

```

## Issue Addressed

Resolves#2775

## Proposed Changes

1. Reduces the target_peers to stop recursive discovery requests.

2. Changes the eth1 `auto_update_interval` config parameter to be equal to the eth1 block time in the simulator. Without this, the eth1 `latest_cached_block` wouldn't be updated for some slots around `eth1_voting_period` boundaries which would lead to the eth1 cache falling out of sync. This is what caused the `Syncing eth1 block cache` and `No valid eth1_data votes` logs.

## Issue Addressed

Resolves#2545

## Proposed Changes

Adds the long-overdue EF tests for fork choice. Although we had pretty good coverage via other implementations that closely followed our approach, it is nonetheless important for us to implement these tests too.

During testing I found that we were using a hard-coded `SAFE_SLOTS_TO_UPDATE_JUSTIFIED` value rather than one from the `ChainSpec`. This caused a failure during a minimal preset test. This doesn't represent a risk to mainnet or testnets, since the hard-coded value matched the mainnet preset.

## Failing Cases

There is one failing case which is presently marked as `SkippedKnownFailure`:

```

case 4 ("new_finalized_slot_is_justified_checkpoint_ancestor") from /home/paul/development/lighthouse/testing/ef_tests/consensus-spec-tests/tests/minimal/phase0/fork_choice/on_block/pyspec_tests/new_finalized_slot_is_justified_checkpoint_ancestor failed with NotEqual:

head check failed: Got Head { slot: Slot(40), root: 0x9183dbaed4191a862bd307d476e687277fc08469fc38618699863333487703e7 } | Expected Head { slot: Slot(24), root: 0x105b49b51bf7103c182aa58860b039550a89c05a4675992e2af703bd02c84570 }

```

This failure is due to #2741. It's not a particularly high priority issue at the moment, so we fix it after merging this PR.

## Issue Addressed

Currently, running the Web3Signer tests locally without having a java runtime environment installed and available on your PATH will result in the tests failing.

## Proposed Changes

Add a note regarding the Web3Signer tests being dependent on java (similar to what we have for `ganache-cli`)

## Issue Addressed

e895074ba updated the altair fork and now that we are a week away this test no longer panics.

## Proposed Changes

Remove the expected panic and explanatory note.

## Issue Addressed

When compiling with Rust 1.56.0 the compiler generates 3 instances of this warning:

```

warning: trailing semicolon in macro used in expression position

--> common/eth2_network_config/src/lib.rs:181:24

|

181 | })?;

| ^

...

195 | let deposit_contract_deploy_block = load_from_file!(DEPLOY_BLOCK_FILE);

| ---------------------------------- in this macro invocation

|

= note: `#[warn(semicolon_in_expressions_from_macros)]` on by default

= warning: this was previously accepted by the compiler but is being phased out; it will become a hard error in a future release!

= note: for more information, see issue #79813 <https://github.com/rust-lang/rust/issues/79813>

= note: this warning originates in the macro `load_from_file` (in Nightly builds, run with -Z macro-backtrace for more info)

```

This warning is completely harmless, but will be visible to users compiling Lighthouse v2.0.1 (or earlier) with Rust 1.56.0 (to be released October 21st). It is **completely safe** to ignore this warning, it's just a superficial change to Rust's syntax.

## Proposed Changes

This PR removes the semi-colon as recommended, and fixes the new Clippy lints from 1.56.0

## Issue Addressed

NA

## Proposed Changes

This is a wholesale rip-off of #2708, see that PR for more of a description.

I've made this PR since @realbigsean is offline and I can't merge his PR due to Github's frustrating `target-branch-check` bug. I also changed the branch to `unstable`, since I'm trying to minimize the diff between `merge-f2f`/`unstable`. I'll just rebase `merge-f2f` onto `unstable` after this PR merges.

When running `make lint` I noticed the following warning:

```

warning: patch for `fixed-hash` uses the features mechanism. default-features and features will not take effect because the patch dependency does not support this mechanism

```

So, I removed the `features` section from the patch.

## Additional Info

NA

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

NA

## Proposed Changes

This PR is near-identical to https://github.com/sigp/lighthouse/pull/2652, however it is to be merged into `unstable` instead of `merge-f2f`. Please see that PR for reasoning.

I'm making this duplicate PR to merge to `unstable` in an effort to shrink the diff between `unstable` and `merge-f2f` by doing smaller, lead-up PRs.

## Additional Info

NA

## Issue Addressed

Fix#2585

## Proposed Changes

Provide a canonical version of test_logger that can be used

throughout lighthouse.

## Additional Info

This allows tests to conditionally emit logging data by adding

test_logger as the default logger. And then when executing

`cargo test --features logging/test_logger` log output

will be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test --features logging/test_logger

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-e20115db6a5e3714)

running 1 test

Sep 10 12:53:45.212 INFO hi, module: test_logger:8

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Or, in normal scenarios where logging isn't needed, executing

`cargo test` the log output will not be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-02e02f8d41e8cf8a)

running 1 test

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

## Issue Addressed

NA

## Proposed Changes

Implements the "union" type from the SSZ spec for `ssz`, `ssz_derive`, `tree_hash` and `tree_hash_derive` so it may be derived for `enums`:

https://github.com/ethereum/consensus-specs/blob/v1.1.0-beta.3/ssz/simple-serialize.md#union

The union type is required for the merge, since the `Transaction` type is defined as a single-variant union `Union[OpaqueTransaction]`.

### Crate Updates

This PR will (hopefully) cause CI to publish new versions for the following crates:

- `eth2_ssz_derive`: `0.2.1` -> `0.3.0`

- `eth2_ssz`: `0.3.0` -> `0.4.0`

- `eth2_ssz_types`: `0.2.0` -> `0.2.1`

- `tree_hash`: `0.3.0` -> `0.4.0`

- `tree_hash_derive`: `0.3.0` -> `0.4.0`

These these crates depend on each other, I've had to add a workspace-level `[patch]` for these crates. A follow-up PR will need to remove this patch, ones the new versions are published.

### Union Behaviors

We already had SSZ `Encode` and `TreeHash` derive for enums, however it just did a "transparent" pass-through of the inner value. Since the "union" decoding from the spec is in conflict with the transparent method, I've required that all `enum` have exactly one of the following enum-level attributes:

#### SSZ

- `#[ssz(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[ssz(enum_behaviour = "transparent")]`

- maintains existing functionality

- not supported for `Decode` (never was)

#### TreeHash

- `#[tree_hash(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[tree_hash(enum_behaviour = "transparent")]`

- maintains existing functionality

This means that we can maintain the existing transparent behaviour, but all existing users will get a compile-time error until they explicitly opt-in to being transparent.

### Legacy Option Encoding

Before this PR, we already had a union-esque encoding for `Option<T>`. However, this was with the *old* SSZ spec where the union selector was 4 bytes. During merge specification, the spec was changed to use 1 byte for the selector.

Whilst the 4-byte `Option` encoding was never used in the spec, we used it in our database. Writing a migrate script for all occurrences of `Option` in the database would be painful, especially since it's used in the `CommitteeCache`. To avoid the migrate script, I added a serde-esque `#[ssz(with = "module")]` field-level attribute to `ssz_derive` so that we can opt into the 4-byte encoding on a field-by-field basis.

The `ssz::legacy::four_byte_impl!` macro allows a one-liner to define the module required for the `#[ssz(with = "module")]` for some `Option<T> where T: Encode + Decode`.

Notably, **I have removed `Encode` and `Decode` impls for `Option`**. I've done this to force a break on downstream users. Like I mentioned, `Option` isn't used in the spec so I don't think it'll be *that* annoying. I think it's nicer than quietly having two different union implementations or quietly breaking the existing `Option` impl.

### Crate Publish Ordering

I've modified the order in which CI publishes crates to ensure that we don't publish a crate without ensuring we already published a crate that it depends upon.

## TODO

- [ ] Queue a follow-up `[patch]`-removing PR.

[EIP-3030]: https://eips.ethereum.org/EIPS/eip-3030

[Web3Signer]: https://consensys.github.io/web3signer/web3signer-eth2.html

## Issue Addressed

Resolves#2498

## Proposed Changes

Allows the VC to call out to a [Web3Signer] remote signer to obtain signatures.

## Additional Info

### Making Signing Functions `async`

To allow remote signing, I needed to make all the signing functions `async`. This caused a bit of noise where I had to convert iterators into `for` loops.

In `duties_service.rs` there was a particularly tricky case where we couldn't hold a write-lock across an `await`, so I had to first take a read-lock, then grab a write-lock.

### Move Signing from Core Executor

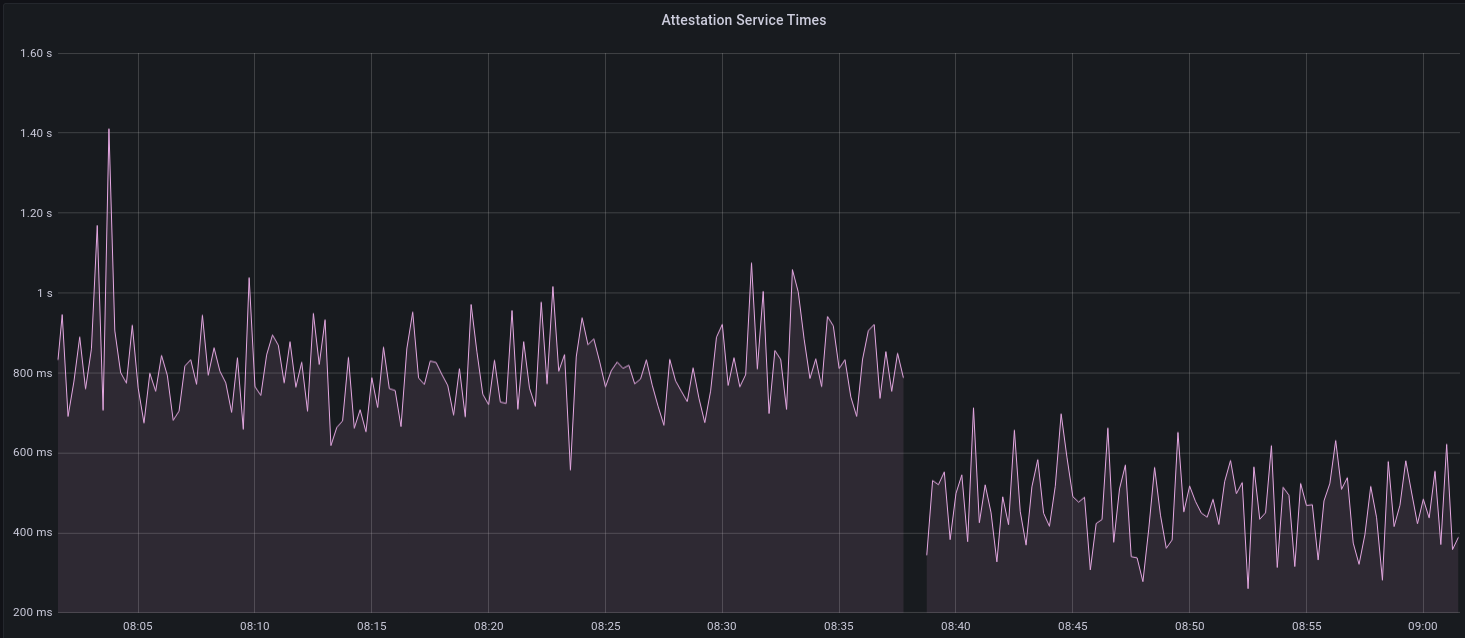

Whilst implementing this feature, I noticed that we signing was happening on the core tokio executor. I suspect this was causing the executor to temporarily lock and occasionally trigger some HTTP timeouts (and potentially SQL pool timeouts, but I can't verify this). Since moving all signing into blocking tokio tasks, I noticed a distinct drop in the "atttestations_http_get" metric on a Prater node:

I think this graph indicates that freeing the core executor allows the VC to operate more smoothly.

### Refactor TaskExecutor