Web3Signer support for VC (#2522)

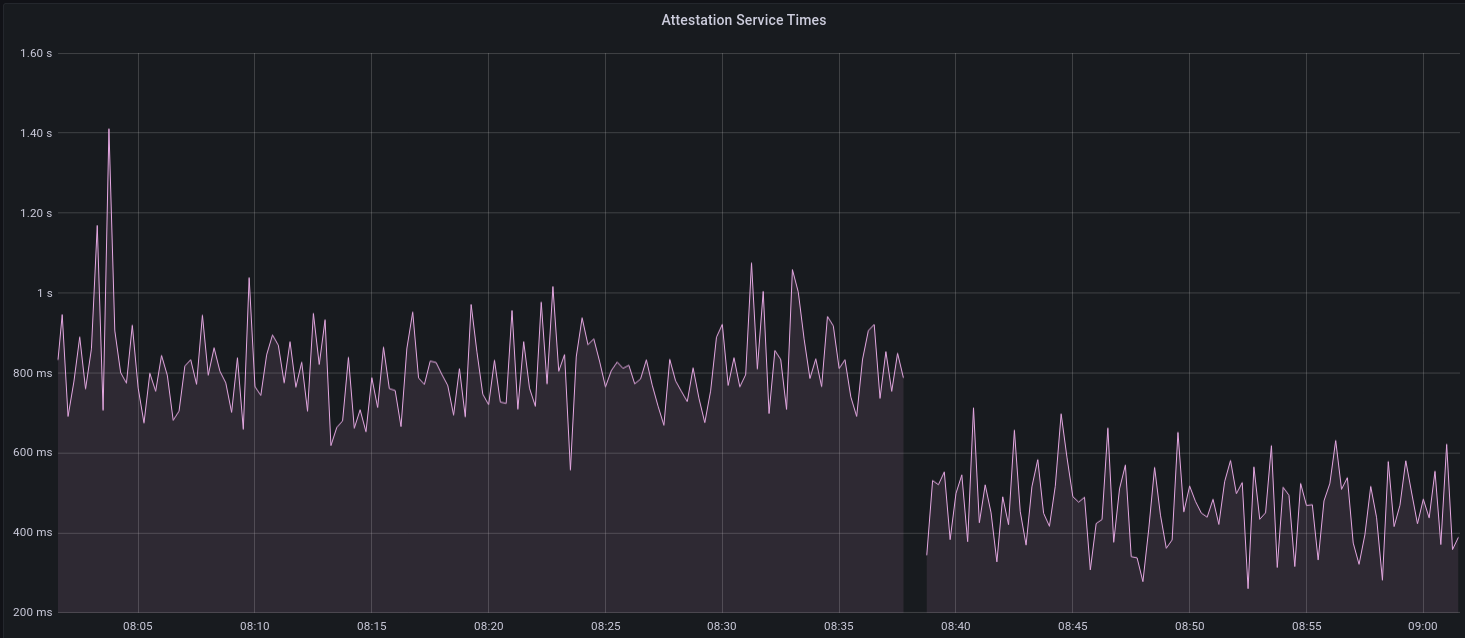

[EIP-3030]: https://eips.ethereum.org/EIPS/eip-3030 [Web3Signer]: https://consensys.github.io/web3signer/web3signer-eth2.html ## Issue Addressed Resolves #2498 ## Proposed Changes Allows the VC to call out to a [Web3Signer] remote signer to obtain signatures. ## Additional Info ### Making Signing Functions `async` To allow remote signing, I needed to make all the signing functions `async`. This caused a bit of noise where I had to convert iterators into `for` loops. In `duties_service.rs` there was a particularly tricky case where we couldn't hold a write-lock across an `await`, so I had to first take a read-lock, then grab a write-lock. ### Move Signing from Core Executor Whilst implementing this feature, I noticed that we signing was happening on the core tokio executor. I suspect this was causing the executor to temporarily lock and occasionally trigger some HTTP timeouts (and potentially SQL pool timeouts, but I can't verify this). Since moving all signing into blocking tokio tasks, I noticed a distinct drop in the "atttestations_http_get" metric on a Prater node:  I think this graph indicates that freeing the core executor allows the VC to operate more smoothly. ### Refactor TaskExecutor I noticed that the `TaskExecutor::spawn_blocking_handle` function would fail to spawn tasks if it were unable to obtain handles to some metrics (this can happen if the same metric is defined twice). It seemed that a more sensible approach would be to keep spawning tasks, but without metrics. To that end, I refactored the function so that it would still function without metrics. There are no other changes made. ## TODO - [x] Restructure to support multiple signing methods. - [x] Add calls to remote signer from VC. - [x] Documentation - [x] Test all endpoints - [x] Test HTTPS certificate - [x] Allow adding remote signer validators via the API - [x] Add Altair support via [21.8.1-rc1](https://github.com/ConsenSys/web3signer/releases/tag/21.8.1-rc1) - [x] Create issue to start using latest version of web3signer. (See #2570) ## Notes - ~~Web3Signer doesn't yet support the Altair fork for Prater. See https://github.com/ConsenSys/web3signer/issues/423.~~ - ~~There is not yet a release of Web3Signer which supports Altair blocks. See https://github.com/ConsenSys/web3signer/issues/391.~~

This commit is contained in:

parent

58012f85e1

commit

c5c7476518

27

Cargo.lock

generated

27

Cargo.lock

generated

@ -6972,6 +6972,7 @@ dependencies = [

|

||||

"parking_lot",

|

||||

"rand 0.7.3",

|

||||

"rayon",

|

||||

"reqwest",

|

||||

"ring",

|

||||

"safe_arith",

|

||||

"scrypt",

|

||||

@ -6990,6 +6991,7 @@ dependencies = [

|

||||

"tokio",

|

||||

"tree_hash 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"types",

|

||||

"url",

|

||||

"validator_dir",

|

||||

"warp",

|

||||

"warp_utils",

|

||||

@ -7295,6 +7297,31 @@ dependencies = [

|

||||

"url",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "web3signer_tests"

|

||||

version = "0.1.0"

|

||||

dependencies = [

|

||||

"account_utils",

|

||||

"environment",

|

||||

"eth2_keystore",

|

||||

"eth2_network_config",

|

||||

"exit-future",

|

||||

"futures",

|

||||

"reqwest",

|

||||

"serde",

|

||||

"serde_derive",

|

||||

"serde_json",

|

||||

"serde_yaml",

|

||||

"slot_clock",

|

||||

"task_executor",

|

||||

"tempfile",

|

||||

"tokio",

|

||||

"types",

|

||||

"url",

|

||||

"validator_client",

|

||||

"zip",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "webpki"

|

||||

version = "0.21.4"

|

||||

|

||||

@ -76,6 +76,7 @@ members = [

|

||||

"testing/node_test_rig",

|

||||

"testing/simulator",

|

||||

"testing/state_transition_vectors",

|

||||

"testing/web3signer_tests",

|

||||

|

||||

"validator_client",

|

||||

"validator_client/slashing_protection",

|

||||

|

||||

@ -32,6 +32,7 @@

|

||||

* [Advanced Usage](./advanced.md)

|

||||

* [Custom Data Directories](./advanced-datadir.md)

|

||||

* [Validator Graffiti](./graffiti.md)

|

||||

* [Remote Signing with Web3Signer](./validator-web3signer.md)

|

||||

* [Database Configuration](./advanced_database.md)

|

||||

* [Advanced Networking](./advanced_networking.md)

|

||||

* [Running a Slasher](./slasher.md)

|

||||

|

||||

@ -434,3 +434,43 @@ Typical Responses | 200

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

## `POST /lighthouse/validators/web3signer`

|

||||

|

||||

Create any number of new validators, all of which will refer to a

|

||||

[Web3Signer](https://docs.web3signer.consensys.net/en/latest/) server for signing.

|

||||

|

||||

### HTTP Specification

|

||||

|

||||

| Property | Specification |

|

||||

| --- |--- |

|

||||

Path | `/lighthouse/validators/web3signer`

|

||||

Method | POST

|

||||

Required Headers | [`Authorization`](./api-vc-auth-header.md)

|

||||

Typical Responses | 200, 400

|

||||

|

||||

### Example Request Body

|

||||

|

||||

```json

|

||||

[

|

||||

{

|

||||

"enable": true,

|

||||

"description": "validator_one",

|

||||

"graffiti": "Mr F was here",

|

||||

"voting_public_key": "0xa062f95fee747144d5e511940624bc6546509eeaeae9383257a9c43e7ddc58c17c2bab4ae62053122184c381b90db380",

|

||||

"url": "http://path-to-web3signer.com",

|

||||

"root_certificate_path": "/path/on/vc/filesystem/to/certificate.pem",

|

||||

"request_timeout_ms": 12000

|

||||

}

|

||||

]

|

||||

```

|

||||

|

||||

The following fields may be omitted or nullified to obtain default values:

|

||||

|

||||

- `graffiti`

|

||||

- `root_certificate_path`

|

||||

- `request_timeout_ms`

|

||||

|

||||

### Example Response Body

|

||||

|

||||

*No data is included in the response body.*

|

||||

|

||||

56

book/src/validator-web3signer.md

Normal file

56

book/src/validator-web3signer.md

Normal file

@ -0,0 +1,56 @@

|

||||

# Remote Signing with Web3Signer

|

||||

|

||||

[Web3Signer]: https://docs.web3signer.consensys.net/en/latest/

|

||||

[Consensys]: https://github.com/ConsenSys/

|

||||

[Teku]: https://github.com/consensys/teku

|

||||

|

||||

[Web3Signer] is a tool by Consensys which allows *remote signing*. Remote signing is when a

|

||||

Validator Client (VC) out-sources the signing of messages to remote server (e.g., via HTTPS). This

|

||||

means that the VC does not hold the validator private keys.

|

||||

|

||||

## Warnings

|

||||

|

||||

Using a remote signer comes with risks, please read the following two warnings before proceeding:

|

||||

|

||||

### Remote signing is complex and risky

|

||||

|

||||

Remote signing is generally only desirable for enterprise users or users with unique security

|

||||

requirements. Most users will find the separation between the Beacon Node (BN) and VC to be

|

||||

sufficient *without* introducing a remote signer.

|

||||

|

||||

**Using a remote signer introduces a new set of security and slashing risks and should only be

|

||||

undertaken by advanced users who fully understand the risks.**

|

||||

|

||||

### Web3Signer is not maintained by Lighthouse

|

||||

|

||||

The [Web3Signer] tool is maintained by [Consensys], the same team that maintains [Teku]. The

|

||||

Lighthouse team (Sigma Prime) does not maintain Web3Signer or make any guarantees about its safety

|

||||

or effectiveness.

|

||||

|

||||

## Usage

|

||||

|

||||

A remote signing validator is added to Lighthouse in much the same way as one that uses a local

|

||||

keystore, via the [`validator_definitions.yml`](./validator-management.md) file or via the `POST

|

||||

/lighthouse/validators/web3signer` API endpoint.

|

||||

|

||||

Here is an example of a `validator_definitions.yml` file containing one validator which uses a

|

||||

remote signer:

|

||||

|

||||

```yaml

|

||||

---

|

||||

- enabled: true

|

||||

voting_public_key: "0xa5566f9ec3c6e1fdf362634ebec9ef7aceb0e460e5079714808388e5d48f4ae1e12897fed1bea951c17fa389d511e477"

|

||||

type: web3signer

|

||||

url: "https://my-remote-signer.com:1234"

|

||||

root_certificate_path: /home/paul/my-certificates/my-remote-signer.pem

|

||||

```

|

||||

|

||||

When using this file, the Lighthouse VC will perform duties for the `0xa5566..` validator and defer

|

||||

to the `https://my-remote-signer.com:1234` server to obtain any signatures. It will load a

|

||||

"self-signed" SSL certificate from `/home/paul/my-certificates/my-remote-signer.pem` (on the

|

||||

filesystem of the VC) to encrypt the communications between the VC and Web3Signer.

|

||||

|

||||

> The `request_timeout_ms` key can also be specified. Use this key to override the default timeout

|

||||

> with a new timeout in milliseconds. This is the timeout before requests to Web3Signer are

|

||||

> considered to be failures. Setting a value that it too-long may create contention and late duties

|

||||

> in the VC. Setting it too short will result in failed signatures and therefore missed duties.

|

||||

@ -61,6 +61,21 @@ pub enum SigningDefinition {

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

voting_keystore_password: Option<ZeroizeString>,

|

||||

},

|

||||

/// A validator that defers to a Web3Signer HTTP server for signing.

|

||||

///

|

||||

/// https://github.com/ConsenSys/web3signer

|

||||

#[serde(rename = "web3signer")]

|

||||

Web3Signer {

|

||||

url: String,

|

||||

/// Path to a .pem file.

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

root_certificate_path: Option<PathBuf>,

|

||||

/// Specifies a request timeout.

|

||||

///

|

||||

/// The timeout is applied from when the request starts connecting until the response body has finished.

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

request_timeout_ms: Option<u64>,

|

||||

},

|

||||

}

|

||||

|

||||

/// A validator that may be initialized by this validator client.

|

||||

@ -116,6 +131,12 @@ impl ValidatorDefinition {

|

||||

#[derive(Default, Serialize, Deserialize)]

|

||||

pub struct ValidatorDefinitions(Vec<ValidatorDefinition>);

|

||||

|

||||

impl From<Vec<ValidatorDefinition>> for ValidatorDefinitions {

|

||||

fn from(vec: Vec<ValidatorDefinition>) -> Self {

|

||||

Self(vec)

|

||||

}

|

||||

}

|

||||

|

||||

impl ValidatorDefinitions {

|

||||

/// Open an existing file or create a new, empty one if it does not exist.

|

||||

pub fn open_or_create<P: AsRef<Path>>(validators_dir: P) -> Result<Self, Error> {

|

||||

@ -167,11 +188,13 @@ impl ValidatorDefinitions {

|

||||

let known_paths: HashSet<&PathBuf> = self

|

||||

.0

|

||||

.iter()

|

||||

.map(|def| match &def.signing_definition {

|

||||

.filter_map(|def| match &def.signing_definition {

|

||||

SigningDefinition::LocalKeystore {

|

||||

voting_keystore_path,

|

||||

..

|

||||

} => voting_keystore_path,

|

||||

} => Some(voting_keystore_path),

|

||||

// A Web3Signer validator does not use a local keystore file.

|

||||

SigningDefinition::Web3Signer { .. } => None,

|

||||

})

|

||||

.collect();

|

||||

|

||||

|

||||

@ -313,6 +313,22 @@ impl ValidatorClientHttpClient {

|

||||

self.post(path, &request).await

|

||||

}

|

||||

|

||||

/// `POST lighthouse/validators/web3signer`

|

||||

pub async fn post_lighthouse_validators_web3signer(

|

||||

&self,

|

||||

request: &[Web3SignerValidatorRequest],

|

||||

) -> Result<GenericResponse<ValidatorData>, Error> {

|

||||

let mut path = self.server.full.clone();

|

||||

|

||||

path.path_segments_mut()

|

||||

.map_err(|()| Error::InvalidUrl(self.server.clone()))?

|

||||

.push("lighthouse")

|

||||

.push("validators")

|

||||

.push("web3signer");

|

||||

|

||||

self.post(path, &request).await

|

||||

}

|

||||

|

||||

/// `PATCH lighthouse/validators/{validator_pubkey}`

|

||||

pub async fn patch_lighthouse_validators(

|

||||

&self,

|

||||

|

||||

@ -2,6 +2,7 @@ use account_utils::ZeroizeString;

|

||||

use eth2_keystore::Keystore;

|

||||

use graffiti::GraffitiString;

|

||||

use serde::{Deserialize, Serialize};

|

||||

use std::path::PathBuf;

|

||||

|

||||

pub use crate::lighthouse::Health;

|

||||

pub use crate::types::{GenericResponse, VersionData};

|

||||

@ -64,3 +65,20 @@ pub struct KeystoreValidatorsPostRequest {

|

||||

pub keystore: Keystore,

|

||||

pub graffiti: Option<GraffitiString>,

|

||||

}

|

||||

|

||||

#[derive(Debug, Clone, PartialEq, Serialize, Deserialize)]

|

||||

pub struct Web3SignerValidatorRequest {

|

||||

pub enable: bool,

|

||||

pub description: String,

|

||||

#[serde(default)]

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

pub graffiti: Option<GraffitiString>,

|

||||

pub voting_public_key: PublicKey,

|

||||

pub url: String,

|

||||

#[serde(default)]

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

pub root_certificate_path: Option<PathBuf>,

|

||||

#[serde(default)]

|

||||

#[serde(skip_serializing_if = "Option::is_none")]

|

||||

pub request_timeout_ms: Option<u64>,

|

||||

}

|

||||

|

||||

@ -283,6 +283,18 @@ pub fn set_gauge_vec(int_gauge_vec: &Result<IntGaugeVec>, name: &[&str], value:

|

||||

}

|

||||

}

|

||||

|

||||

pub fn inc_gauge_vec(int_gauge_vec: &Result<IntGaugeVec>, name: &[&str]) {

|

||||

if let Some(gauge) = get_int_gauge(int_gauge_vec, name) {

|

||||

gauge.inc();

|

||||

}

|

||||

}

|

||||

|

||||

pub fn dec_gauge_vec(int_gauge_vec: &Result<IntGaugeVec>, name: &[&str]) {

|

||||

if let Some(gauge) = get_int_gauge(int_gauge_vec, name) {

|

||||

gauge.dec();

|

||||

}

|

||||

}

|

||||

|

||||

pub fn set_gauge(gauge: &Result<IntGauge>, value: i64) {

|

||||

if let Ok(gauge) = gauge {

|

||||

gauge.set(value);

|

||||

|

||||

@ -237,39 +237,33 @@ impl TaskExecutor {

|

||||

{

|

||||

let log = self.log.clone();

|

||||

|

||||

if let Some(metric) = metrics::get_histogram(&metrics::BLOCKING_TASKS_HISTOGRAM, &[name]) {

|

||||

if let Some(int_gauge) = metrics::get_int_gauge(&metrics::BLOCKING_TASKS_COUNT, &[name])

|

||||

{

|

||||

let int_gauge_1 = int_gauge;

|

||||

let timer = metric.start_timer();

|

||||

let join_handle = if let Some(runtime) = self.runtime.upgrade() {

|

||||

runtime.spawn_blocking(task)

|

||||

} else {

|

||||

debug!(self.log, "Couldn't spawn task. Runtime shutting down");

|

||||

return None;

|

||||

};

|

||||

let timer = metrics::start_timer_vec(&metrics::BLOCKING_TASKS_HISTOGRAM, &[name]);

|

||||

metrics::inc_gauge_vec(&metrics::BLOCKING_TASKS_COUNT, &[name]);

|

||||

|

||||

Some(async move {

|

||||

let result = match join_handle.await {

|

||||

Ok(result) => {

|

||||

trace!(log, "Blocking task completed"; "task" => name);

|

||||

Ok(result)

|

||||

}

|

||||

Err(e) => {

|

||||

debug!(log, "Blocking task ended unexpectedly"; "error" => %e);

|

||||

Err(e)

|

||||

}

|

||||

};

|

||||

timer.observe_duration();

|

||||

int_gauge_1.dec();

|

||||

result

|

||||

})

|

||||

} else {

|

||||

None

|

||||

}

|

||||

let join_handle = if let Some(runtime) = self.runtime.upgrade() {

|

||||

runtime.spawn_blocking(task)

|

||||

} else {

|

||||

None

|

||||

}

|

||||

debug!(self.log, "Couldn't spawn task. Runtime shutting down");

|

||||

return None;

|

||||

};

|

||||

|

||||

let future = async move {

|

||||

let result = match join_handle.await {

|

||||

Ok(result) => {

|

||||

trace!(log, "Blocking task completed"; "task" => name);

|

||||

Ok(result)

|

||||

}

|

||||

Err(e) => {

|

||||

debug!(log, "Blocking task ended unexpectedly"; "error" => %e);

|

||||

Err(e)

|

||||

}

|

||||

};

|

||||

drop(timer);

|

||||

metrics::dec_gauge_vec(&metrics::BLOCKING_TASKS_COUNT, &[name]);

|

||||

result

|

||||

};

|

||||

|

||||

Some(future)

|

||||

}

|

||||

|

||||

pub fn runtime(&self) -> Weak<Runtime> {

|

||||

|

||||

@ -9,7 +9,7 @@ use crate::{test_utils::TestRandom, Hash256, Slot};

|

||||

|

||||

use super::{

|

||||

AggregateSignature, AttestationData, BitList, ChainSpec, Domain, EthSpec, Fork, SecretKey,

|

||||

SignedRoot,

|

||||

Signature, SignedRoot,

|

||||

};

|

||||

|

||||

#[derive(Debug, PartialEq)]

|

||||

@ -60,6 +60,25 @@ impl<T: EthSpec> Attestation<T> {

|

||||

fork: &Fork,

|

||||

genesis_validators_root: Hash256,

|

||||

spec: &ChainSpec,

|

||||

) -> Result<(), Error> {

|

||||

let domain = spec.get_domain(

|

||||

self.data.target.epoch,

|

||||

Domain::BeaconAttester,

|

||||

fork,

|

||||

genesis_validators_root,

|

||||

);

|

||||

let message = self.data.signing_root(domain);

|

||||

|

||||

self.add_signature(&secret_key.sign(message), committee_position)

|

||||

}

|

||||

|

||||

/// Adds `signature` to `self` and sets the `committee_position`'th bit of `aggregation_bits` to `true`.

|

||||

///

|

||||

/// Returns an `AlreadySigned` error if the `committee_position`'th bit is already `true`.

|

||||

pub fn add_signature(

|

||||

&mut self,

|

||||

signature: &Signature,

|

||||

committee_position: usize,

|

||||

) -> Result<(), Error> {

|

||||

if self

|

||||

.aggregation_bits

|

||||

@ -72,15 +91,7 @@ impl<T: EthSpec> Attestation<T> {

|

||||

.set(committee_position, true)

|

||||

.map_err(Error::SszTypesError)?;

|

||||

|

||||

let domain = spec.get_domain(

|

||||

self.data.target.epoch,

|

||||

Domain::BeaconAttester,

|

||||

fork,

|

||||

genesis_validators_root,

|

||||

);

|

||||

let message = self.data.signing_root(domain);

|

||||

|

||||

self.signature.add_assign(&secret_key.sign(message));

|

||||

self.signature.add_assign(signature);

|

||||

|

||||

Ok(())

|

||||

}

|

||||

|

||||

@ -12,6 +12,7 @@ use tree_hash_derive::TreeHash;

|

||||

)]

|

||||

pub struct SyncAggregatorSelectionData {

|

||||

pub slot: Slot,

|

||||

#[serde(with = "eth2_serde_utils::quoted_u64")]

|

||||

pub subcommittee_index: u64,

|

||||

}

|

||||

|

||||

|

||||

35

testing/web3signer_tests/Cargo.toml

Normal file

35

testing/web3signer_tests/Cargo.toml

Normal file

@ -0,0 +1,35 @@

|

||||

[package]

|

||||

name = "web3signer_tests"

|

||||

version = "0.1.0"

|

||||

edition = "2018"

|

||||

|

||||

build = "build.rs"

|

||||

|

||||

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

|

||||

|

||||

[dependencies]

|

||||

|

||||

[dev-dependencies]

|

||||

eth2_keystore = { path = "../../crypto/eth2_keystore" }

|

||||

types = { path = "../../consensus/types" }

|

||||

tempfile = "3.1.0"

|

||||

tokio = { version = "1.10.0", features = ["rt-multi-thread", "macros"] }

|

||||

reqwest = { version = "0.11.0", features = ["json","stream"] }

|

||||

url = "2.2.2"

|

||||

validator_client = { path = "../../validator_client" }

|

||||

slot_clock = { path = "../../common/slot_clock" }

|

||||

futures = "0.3.7"

|

||||

exit-future = "0.2.0"

|

||||

task_executor = { path = "../../common/task_executor" }

|

||||

environment = { path = "../../lighthouse/environment" }

|

||||

account_utils = { path = "../../common/account_utils" }

|

||||

serde = "1.0.116"

|

||||

serde_derive = "1.0.116"

|

||||

serde_yaml = "0.8.13"

|

||||

eth2_network_config = { path = "../../common/eth2_network_config" }

|

||||

|

||||

[build-dependencies]

|

||||

tokio = { version = "1.10.0", features = ["rt-multi-thread", "macros"] }

|

||||

reqwest = { version = "0.11.0", features = ["json","stream"] }

|

||||

serde_json = "1.0.58"

|

||||

zip = "0.5.13"

|

||||

100

testing/web3signer_tests/build.rs

Normal file

100

testing/web3signer_tests/build.rs

Normal file

@ -0,0 +1,100 @@

|

||||

//! This build script downloads the latest Web3Signer release and places it in the `OUT_DIR` so it

|

||||

//! can be used for integration testing.

|

||||

|

||||

use reqwest::Client;

|

||||

use serde_json::Value;

|

||||

use std::env;

|

||||

use std::fs;

|

||||

use std::path::PathBuf;

|

||||

use zip::ZipArchive;

|

||||

|

||||

/// Use `None` to download the latest Github release.

|

||||

/// Use `Some("21.8.1")` to download a specific version.

|

||||

const FIXED_VERSION_STRING: Option<&str> = None;

|

||||

|

||||

#[tokio::main]

|

||||

async fn main() {

|

||||

let out_dir = env::var("OUT_DIR").unwrap();

|

||||

download_binary(out_dir.into()).await;

|

||||

}

|

||||

|

||||

pub async fn download_binary(dest_dir: PathBuf) {

|

||||

let version_file = dest_dir.join("version");

|

||||

|

||||

let client = Client::builder()

|

||||

// Github gives a 403 without a user agent.

|

||||

.user_agent("web3signer_tests")

|

||||

.build()

|

||||

.unwrap();

|

||||

|

||||

let version = if let Some(version) = FIXED_VERSION_STRING {

|

||||

version.to_string()

|

||||

} else {

|

||||

// Get the latest release of the web3 signer repo.

|

||||

let latest_response: Value = client

|

||||

.get("https://api.github.com/repos/ConsenSys/web3signer/releases/latest")

|

||||

.send()

|

||||

.await

|

||||

.unwrap()

|

||||

.error_for_status()

|

||||

.unwrap()

|

||||

.json()

|

||||

.await

|

||||

.unwrap();

|

||||

latest_response

|

||||

.get("tag_name")

|

||||

.unwrap()

|

||||

.as_str()

|

||||

.unwrap()

|

||||

.to_string()

|

||||

};

|

||||

|

||||

if version_file.exists() && fs::read(&version_file).unwrap() == version.as_bytes() {

|

||||

// The latest version is already downloaded, do nothing.

|

||||

return;

|

||||

} else {

|

||||

// Ignore the result since we don't care if the version file already exists.

|

||||

let _ = fs::remove_file(&version_file);

|

||||

}

|

||||

|

||||

// Download the latest release zip.

|

||||

let zip_url = format!("https://artifacts.consensys.net/public/web3signer/raw/names/web3signer.zip/versions/{}/web3signer-{}.zip", version, version);

|

||||

let zip_response = client

|

||||

.get(zip_url)

|

||||

.send()

|

||||

.await

|

||||

.unwrap()

|

||||

.error_for_status()

|

||||

.unwrap()

|

||||

.bytes()

|

||||

.await

|

||||

.unwrap();

|

||||

|

||||

// Write the zip to a file.

|

||||

let zip_path = dest_dir.join(format!("{}.zip", version));

|

||||

fs::write(&zip_path, zip_response).unwrap();

|

||||

// Unzip the zip.

|

||||

let mut zip_file = fs::File::open(&zip_path).unwrap();

|

||||

ZipArchive::new(&mut zip_file)

|

||||

.unwrap()

|

||||

.extract(&dest_dir)

|

||||

.unwrap();

|

||||

|

||||

// Rename the web3signer directory so it doesn't include the version string. This ensures the

|

||||

// path to the binary is predictable.

|

||||

let web3signer_dir = dest_dir.join("web3signer");

|

||||

if web3signer_dir.exists() {

|

||||

fs::remove_dir_all(&web3signer_dir).unwrap();

|

||||

}

|

||||

fs::rename(

|

||||

dest_dir.join(format!("web3signer-{}", version)),

|

||||

web3signer_dir,

|

||||

)

|

||||

.unwrap();

|

||||

|

||||

// Delete zip and unzipped dir.

|

||||

fs::remove_file(&zip_path).unwrap();

|

||||

|

||||

// Update the version file to avoid duplicate downloads.

|

||||

fs::write(&version_file, version).unwrap();

|

||||

}

|

||||

589

testing/web3signer_tests/src/lib.rs

Normal file

589

testing/web3signer_tests/src/lib.rs

Normal file

@ -0,0 +1,589 @@

|

||||

//! This crate provides a series of integration tests between the Lighthouse `ValidatorStore` and

|

||||

//! Web3Signer by Consensys.

|

||||

//!

|

||||

//! These tests aim to ensure that:

|

||||

//!

|

||||

//! - Lighthouse can issue valid requests to Web3Signer.

|

||||

//! - The signatures generated by Web3Signer are identical to those which Lighthouse generates.

|

||||

//!

|

||||

//! There is a build script in this crate which obtains the latest version of Web3Signer and makes

|

||||

//! it available via the `OUT_DIR`.

|

||||

|

||||

#[cfg(all(test, unix, not(debug_assertions)))]

|

||||

mod tests {

|

||||

use account_utils::validator_definitions::{

|

||||

SigningDefinition, ValidatorDefinition, ValidatorDefinitions,

|

||||

};

|

||||

use eth2_keystore::KeystoreBuilder;

|

||||

use eth2_network_config::Eth2NetworkConfig;

|

||||

use reqwest::Client;

|

||||

use serde::Serialize;

|

||||

use slot_clock::{SlotClock, TestingSlotClock};

|

||||

use std::env;

|

||||

use std::fmt::Debug;

|

||||

use std::fs::{self, File};

|

||||

use std::future::Future;

|

||||

use std::path::PathBuf;

|

||||

use std::process::{Child, Command, Stdio};

|

||||

use std::sync::Arc;

|

||||

use std::time::{Duration, Instant};

|

||||

use task_executor::TaskExecutor;

|

||||

use tempfile::TempDir;

|

||||

use tokio::time::sleep;

|

||||

use types::*;

|

||||

use url::Url;

|

||||

use validator_client::{

|

||||

initialized_validators::{load_pem_certificate, InitializedValidators},

|

||||

validator_store::ValidatorStore,

|

||||

SlashingDatabase, SLASHING_PROTECTION_FILENAME,

|

||||

};

|

||||

|

||||

/// If the we are unable to reach the Web3Signer HTTP API within this time out then we will

|

||||

/// assume it failed to start.

|

||||

const UPCHECK_TIMEOUT: Duration = Duration::from_secs(20);

|

||||

|

||||

/// Set to `true` to send the Web3Signer logs to the console during tests. Logs are useful when

|

||||

/// debugging.

|

||||

const SUPPRESS_WEB3SIGNER_LOGS: bool = true;

|

||||

|

||||

type E = MainnetEthSpec;

|

||||

|

||||

/// This marker trait is implemented for objects that we wish to compare to ensure Web3Signer

|

||||

/// and Lighthouse agree on signatures.

|

||||

///

|

||||

/// The purpose of this trait is to prevent accidentally comparing useless values like `()`.

|

||||

trait SignedObject: PartialEq + Debug {}

|

||||

|

||||

impl SignedObject for Signature {}

|

||||

impl SignedObject for Attestation<E> {}

|

||||

impl SignedObject for SignedBeaconBlock<E> {}

|

||||

impl SignedObject for SignedAggregateAndProof<E> {}

|

||||

impl SignedObject for SelectionProof {}

|

||||

impl SignedObject for SyncSelectionProof {}

|

||||

impl SignedObject for SyncCommitteeMessage {}

|

||||

impl SignedObject for SignedContributionAndProof<E> {}

|

||||

|

||||

/// A file format used by Web3Signer to discover and unlock keystores.

|

||||

#[derive(Serialize)]

|

||||

struct Web3SignerKeyConfig {

|

||||

#[serde(rename = "type")]

|

||||

config_type: String,

|

||||

#[serde(rename = "keyType")]

|

||||

key_type: String,

|

||||

#[serde(rename = "keystoreFile")]

|

||||

keystore_file: String,

|

||||

#[serde(rename = "keystorePasswordFile")]

|

||||

keystore_password_file: String,

|

||||

}

|

||||

|

||||

const KEYSTORE_PASSWORD: &str = "hi mum";

|

||||

const WEB3SIGNER_LISTEN_ADDRESS: &str = "127.0.0.1";

|

||||

|

||||

/// A deterministic, arbitrary keypair.

|

||||

fn testing_keypair() -> Keypair {

|

||||

// Just an arbitrary secret key.

|

||||

let sk = SecretKey::deserialize(&[

|

||||

85, 40, 245, 17, 84, 193, 234, 155, 24, 234, 181, 58, 171, 193, 209, 164, 120, 147, 10,

|

||||

174, 189, 228, 119, 48, 181, 19, 117, 223, 2, 240, 7, 108,

|

||||

])

|

||||

.unwrap();

|

||||

let pk = sk.public_key();

|

||||

Keypair::from_components(pk, sk)

|

||||

}

|

||||

|

||||

/// The location of the Web3Signer binary generated by the build script.

|

||||

fn web3signer_binary() -> PathBuf {

|

||||

PathBuf::from(env::var("OUT_DIR").unwrap())

|

||||

.join("web3signer")

|

||||

.join("bin")

|

||||

.join("web3signer")

|

||||

}

|

||||

|

||||

/// The location of a directory where we keep some files for testing TLS.

|

||||

fn tls_dir() -> PathBuf {

|

||||

PathBuf::from(env::var("CARGO_MANIFEST_DIR").unwrap()).join("tls")

|

||||

}

|

||||

|

||||

fn root_certificate_path() -> PathBuf {

|

||||

tls_dir().join("cert.pem")

|

||||

}

|

||||

|

||||

/// A testing rig which holds a live Web3Signer process.

|

||||

struct Web3SignerRig {

|

||||

keypair: Keypair,

|

||||

_keystore_dir: TempDir,

|

||||

keystore_path: PathBuf,

|

||||

web3signer_child: Child,

|

||||

http_client: Client,

|

||||

url: Url,

|

||||

}

|

||||

|

||||

impl Drop for Web3SignerRig {

|

||||

fn drop(&mut self) {

|

||||

self.web3signer_child.kill().unwrap();

|

||||

}

|

||||

}

|

||||

|

||||

impl Web3SignerRig {

|

||||

pub async fn new(network: &str, listen_address: &str, listen_port: u16) -> Self {

|

||||

let keystore_dir = TempDir::new().unwrap();

|

||||

let keypair = testing_keypair();

|

||||

let keystore =

|

||||

KeystoreBuilder::new(&keypair, KEYSTORE_PASSWORD.as_bytes(), "".to_string())

|

||||

.unwrap()

|

||||

.build()

|

||||

.unwrap();

|

||||

let keystore_filename = "keystore.json";

|

||||

let keystore_path = keystore_dir.path().join(keystore_filename);

|

||||

let keystore_file = File::create(&keystore_path).unwrap();

|

||||

keystore.to_json_writer(&keystore_file).unwrap();

|

||||

|

||||

let keystore_password_filename = "password.txt";

|

||||

let keystore_password_path = keystore_dir.path().join(keystore_password_filename);

|

||||

fs::write(&keystore_password_path, KEYSTORE_PASSWORD.as_bytes()).unwrap();

|

||||

|

||||

let key_config = Web3SignerKeyConfig {

|

||||

config_type: "file-keystore".to_string(),

|

||||

key_type: "BLS".to_string(),

|

||||

keystore_file: keystore_filename.to_string(),

|

||||

keystore_password_file: keystore_password_filename.to_string(),

|

||||

};

|

||||

let key_config_file =

|

||||

File::create(&keystore_dir.path().join("key-config.yaml")).unwrap();

|

||||

serde_yaml::to_writer(key_config_file, &key_config).unwrap();

|

||||

|

||||

let tls_keystore_file = tls_dir().join("key.p12");

|

||||

let tls_keystore_password_file = tls_dir().join("password.txt");

|

||||

|

||||

let stdio = || {

|

||||

if SUPPRESS_WEB3SIGNER_LOGS {

|

||||

Stdio::null()

|

||||

} else {

|

||||

Stdio::inherit()

|

||||

}

|

||||

};

|

||||

|

||||

let web3signer_child = Command::new(web3signer_binary())

|

||||

.arg(format!(

|

||||

"--key-store-path={}",

|

||||

keystore_dir.path().to_str().unwrap()

|

||||

))

|

||||

.arg(format!("--http-listen-host={}", listen_address))

|

||||

.arg(format!("--http-listen-port={}", listen_port))

|

||||

.arg("--tls-allow-any-client=true")

|

||||

.arg(format!(

|

||||

"--tls-keystore-file={}",

|

||||

tls_keystore_file.to_str().unwrap()

|

||||

))

|

||||

.arg(format!(

|

||||

"--tls-keystore-password-file={}",

|

||||

tls_keystore_password_file.to_str().unwrap()

|

||||

))

|

||||

.arg("eth2")

|

||||

.arg(format!("--network={}", network))

|

||||

.arg("--slashing-protection-enabled=false")

|

||||

.stdout(stdio())

|

||||

.stderr(stdio())

|

||||

.spawn()

|

||||

.unwrap();

|

||||

|

||||

let url = Url::parse(&format!("https://{}:{}", listen_address, listen_port)).unwrap();

|

||||

|

||||

let certificate = load_pem_certificate(root_certificate_path()).unwrap();

|

||||

let http_client = Client::builder()

|

||||

.add_root_certificate(certificate)

|

||||

.build()

|

||||

.unwrap();

|

||||

|

||||

let s = Self {

|

||||

keypair,

|

||||

_keystore_dir: keystore_dir,

|

||||

keystore_path,

|

||||

web3signer_child,

|

||||

http_client,

|

||||

url,

|

||||

};

|

||||

|

||||

s.wait_until_up(UPCHECK_TIMEOUT).await;

|

||||

|

||||

s

|

||||

}

|

||||

|

||||

pub async fn wait_until_up(&self, timeout: Duration) {

|

||||

let start = Instant::now();

|

||||

loop {

|

||||

if self.upcheck().await.is_ok() {

|

||||

return;

|

||||

} else if Instant::now().duration_since(start) > timeout {

|

||||

panic!("upcheck failed with timeout {:?}", timeout)

|

||||

} else {

|

||||

sleep(Duration::from_secs(1)).await;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

pub async fn upcheck(&self) -> Result<(), ()> {

|

||||

let url = self.url.join("upcheck").unwrap();

|

||||

self.http_client

|

||||

.get(url)

|

||||

.send()

|

||||

.await

|

||||

.map_err(|_| ())?

|

||||

.error_for_status()

|

||||

.map(|_| ())

|

||||

.map_err(|_| ())

|

||||

}

|

||||

}

|

||||

|

||||

/// A testing rig which holds a `ValidatorStore`.

|

||||

struct ValidatorStoreRig {

|

||||

validator_store: Arc<ValidatorStore<TestingSlotClock, E>>,

|

||||

_validator_dir: TempDir,

|

||||

runtime: Arc<tokio::runtime::Runtime>,

|

||||

_runtime_shutdown: exit_future::Signal,

|

||||

}

|

||||

|

||||

impl ValidatorStoreRig {

|

||||

pub async fn new(validator_definitions: Vec<ValidatorDefinition>, spec: ChainSpec) -> Self {

|

||||

let log = environment::null_logger().unwrap();

|

||||

let validator_dir = TempDir::new().unwrap();

|

||||

|

||||

let validator_definitions = ValidatorDefinitions::from(validator_definitions);

|

||||

let initialized_validators = InitializedValidators::from_definitions(

|

||||

validator_definitions,

|

||||

validator_dir.path().into(),

|

||||

log.clone(),

|

||||

)

|

||||

.await

|

||||

.unwrap();

|

||||

|

||||

let voting_pubkeys: Vec<_> = initialized_validators.iter_voting_pubkeys().collect();

|

||||

|

||||

let runtime = Arc::new(

|

||||

tokio::runtime::Builder::new_multi_thread()

|

||||

.enable_all()

|

||||

.build()

|

||||

.unwrap(),

|

||||

);

|

||||

let (runtime_shutdown, exit) = exit_future::signal();

|

||||

let (shutdown_tx, _) = futures::channel::mpsc::channel(1);

|

||||

let executor =

|

||||

TaskExecutor::new(Arc::downgrade(&runtime), exit, log.clone(), shutdown_tx);

|

||||

|

||||

let slashing_db_path = validator_dir.path().join(SLASHING_PROTECTION_FILENAME);

|

||||

let slashing_protection = SlashingDatabase::open_or_create(&slashing_db_path).unwrap();

|

||||

slashing_protection

|

||||

.register_validators(voting_pubkeys.iter().copied())

|

||||

.unwrap();

|

||||

|

||||

let slot_clock =

|

||||

TestingSlotClock::new(Slot::new(0), Duration::from_secs(0), Duration::from_secs(1));

|

||||

|

||||

let validator_store = ValidatorStore::<_, E>::new(

|

||||

initialized_validators,

|

||||

slashing_protection,

|

||||

Hash256::repeat_byte(42),

|

||||

spec,

|

||||

None,

|

||||

slot_clock,

|

||||

executor,

|

||||

log.clone(),

|

||||

);

|

||||

|

||||

Self {

|

||||

validator_store: Arc::new(validator_store),

|

||||

_validator_dir: validator_dir,

|

||||

runtime,

|

||||

_runtime_shutdown: runtime_shutdown,

|

||||

}

|

||||

}

|

||||

|

||||

pub fn shutdown(self) {

|

||||

Arc::try_unwrap(self.runtime).unwrap().shutdown_background()

|

||||

}

|

||||

}

|

||||

|

||||

/// A testing rig which holds multiple `ValidatorStore` rigs and one `Web3Signer` rig.

|

||||

///

|

||||

/// The intent of this rig is to allow testing a `ValidatorStore` using `Web3Signer` against

|

||||

/// another `ValidatorStore` using a local keystore and ensure that both `ValidatorStore`s

|

||||

/// behave identically.

|

||||

struct TestingRig {

|

||||

_signer_rig: Web3SignerRig,

|

||||

validator_rigs: Vec<ValidatorStoreRig>,

|

||||

validator_pubkey: PublicKeyBytes,

|

||||

}

|

||||

|

||||

impl Drop for TestingRig {

|

||||

fn drop(&mut self) {

|

||||

for rig in std::mem::take(&mut self.validator_rigs) {

|

||||

rig.shutdown();

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl TestingRig {

|

||||

pub async fn new(network: &str, spec: ChainSpec, listen_port: u16) -> Self {

|

||||

let signer_rig =

|

||||

Web3SignerRig::new(network, WEB3SIGNER_LISTEN_ADDRESS, listen_port).await;

|

||||

let validator_pubkey = signer_rig.keypair.pk.clone();

|

||||

|

||||

let local_signer_validator_store = {

|

||||

let validator_definition = ValidatorDefinition {

|

||||

enabled: true,

|

||||

voting_public_key: validator_pubkey.clone(),

|

||||

graffiti: None,

|

||||

description: String::default(),

|

||||

signing_definition: SigningDefinition::LocalKeystore {

|

||||

voting_keystore_path: signer_rig.keystore_path.clone(),

|

||||

voting_keystore_password_path: None,

|

||||

voting_keystore_password: Some(KEYSTORE_PASSWORD.to_string().into()),

|

||||

},

|

||||

};

|

||||

ValidatorStoreRig::new(vec![validator_definition], spec.clone()).await

|

||||

};

|

||||

|

||||

let remote_signer_validator_store = {

|

||||

let validator_definition = ValidatorDefinition {

|

||||

enabled: true,

|

||||

voting_public_key: validator_pubkey.clone(),

|

||||

graffiti: None,

|

||||

description: String::default(),

|

||||

signing_definition: SigningDefinition::Web3Signer {

|

||||

url: signer_rig.url.to_string(),

|

||||

root_certificate_path: Some(root_certificate_path()),

|

||||

request_timeout_ms: None,

|

||||

},

|

||||

};

|

||||

ValidatorStoreRig::new(vec![validator_definition], spec).await

|

||||

};

|

||||

|

||||

Self {

|

||||

_signer_rig: signer_rig,

|

||||

validator_rigs: vec![local_signer_validator_store, remote_signer_validator_store],

|

||||

validator_pubkey: PublicKeyBytes::from(&validator_pubkey),

|

||||

}

|

||||

}

|

||||

|

||||

/// Run the `generate_sig` function across all validator stores on `self` and assert that

|

||||

/// they all return the same value.

|

||||

pub async fn assert_signatures_match<F, R, S>(

|

||||

self,

|

||||

case_name: &str,

|

||||

generate_sig: F,

|

||||

) -> Self

|

||||

where

|

||||

F: Fn(PublicKeyBytes, Arc<ValidatorStore<TestingSlotClock, E>>) -> R,

|

||||

R: Future<Output = S>,

|

||||

// We use the `SignedObject` trait to white-list objects for comparison. This avoids

|

||||

// accidentally comparing something meaningless like a `()`.

|

||||

S: SignedObject,

|

||||

{

|

||||

let mut prev_signature = None;

|

||||

for (i, validator_rig) in self.validator_rigs.iter().enumerate() {

|

||||

let signature =

|

||||

generate_sig(self.validator_pubkey, validator_rig.validator_store.clone())

|

||||

.await;

|

||||

|

||||

if let Some(prev_signature) = &prev_signature {

|

||||

assert_eq!(

|

||||

prev_signature, &signature,

|

||||

"signature mismatch at index {} for case {}",

|

||||

i, case_name

|

||||

);

|

||||

}

|

||||

|

||||

prev_signature = Some(signature)

|

||||

}

|

||||

assert!(prev_signature.is_some(), "sanity check");

|

||||

self

|

||||

}

|

||||

}

|

||||

|

||||

/// Get a generic, arbitrary attestation for signing.

|

||||

fn get_attestation() -> Attestation<E> {

|

||||

Attestation {

|

||||

aggregation_bits: BitList::with_capacity(1).unwrap(),

|

||||

data: AttestationData {

|

||||

slot: <_>::default(),

|

||||

index: <_>::default(),

|

||||

beacon_block_root: <_>::default(),

|

||||

source: Checkpoint {

|

||||

epoch: <_>::default(),

|

||||

root: <_>::default(),

|

||||

},

|

||||

target: Checkpoint {

|

||||

epoch: <_>::default(),

|

||||

root: <_>::default(),

|

||||

},

|

||||

},

|

||||

signature: AggregateSignature::empty(),

|

||||

}

|

||||

}

|

||||

|

||||

/// Test all the "base" (phase 0) types.

|

||||

async fn test_base_types(network: &str, listen_port: u16) {

|

||||

let network_config = Eth2NetworkConfig::constant(network).unwrap().unwrap();

|

||||

let spec = &network_config.chain_spec::<E>().unwrap();

|

||||

|

||||

TestingRig::new(network, spec.clone(), listen_port)

|

||||

.await

|

||||

.assert_signatures_match("randao_reveal", |pubkey, validator_store| async move {

|

||||

validator_store

|

||||

.randao_reveal(pubkey, Epoch::new(0))

|

||||

.await

|

||||

.unwrap()

|

||||

})

|

||||

.await

|

||||

.assert_signatures_match("beacon_block_base", |pubkey, validator_store| async move {

|

||||

let block = BeaconBlock::Base(BeaconBlockBase::empty(spec));

|

||||

let block_slot = block.slot();

|

||||

validator_store

|

||||

.sign_block(pubkey, block, block_slot)

|

||||

.await

|

||||

.unwrap()

|

||||

})

|

||||

.await

|

||||

.assert_signatures_match("attestation", |pubkey, validator_store| async move {

|

||||

let mut attestation = get_attestation();

|

||||

validator_store

|

||||

.sign_attestation(pubkey, 0, &mut attestation, Epoch::new(0))

|

||||

.await

|

||||

.unwrap();

|

||||

attestation

|

||||

})

|

||||

.await

|

||||

.assert_signatures_match("signed_aggregate", |pubkey, validator_store| async move {

|

||||

let attestation = get_attestation();

|

||||

validator_store

|

||||

.produce_signed_aggregate_and_proof(

|

||||

pubkey,

|

||||

0,

|

||||

attestation,

|

||||

SelectionProof::from(Signature::empty()),

|

||||

)

|

||||

.await

|

||||

.unwrap()

|

||||

})

|

||||

.await

|

||||

.assert_signatures_match("selection_proof", |pubkey, validator_store| async move {

|

||||

validator_store

|

||||

.produce_selection_proof(pubkey, Slot::new(0))

|

||||

.await

|

||||

.unwrap()

|

||||

})

|

||||

.await;

|

||||

}

|

||||

|

||||

/// Test all the Altair types.

|

||||

async fn test_altair_types(network: &str, listen_port: u16) {

|

||||

let network_config = Eth2NetworkConfig::constant(network).unwrap().unwrap();

|

||||

let spec = &network_config.chain_spec::<E>().unwrap();

|

||||

let altair_fork_slot = spec

|

||||

.altair_fork_epoch

|

||||

.unwrap()

|

||||

.start_slot(E::slots_per_epoch());

|

||||

|

||||

TestingRig::new(network, spec.clone(), listen_port)

|

||||

.await

|

||||

.assert_signatures_match(

|

||||

"beacon_block_altair",

|

||||

|pubkey, validator_store| async move {

|

||||

let mut altair_block = BeaconBlockAltair::empty(spec);

|

||||

altair_block.slot = altair_fork_slot;

|

||||

validator_store

|

||||

.sign_block(pubkey, BeaconBlock::Altair(altair_block), altair_fork_slot)

|

||||

.await

|

||||

.unwrap()

|

||||

},

|

||||

)

|

||||

.await

|

||||

.assert_signatures_match(

|

||||

"sync_selection_proof",

|

||||

|pubkey, validator_store| async move {

|

||||

validator_store

|

||||

.produce_sync_selection_proof(

|

||||

&pubkey,

|

||||

altair_fork_slot,

|

||||

SyncSubnetId::from(0),

|

||||

)

|

||||

.await

|

||||

.unwrap()

|

||||

},

|

||||

)

|

||||

.await

|

||||

.assert_signatures_match(

|

||||

"sync_committee_signature",

|

||||

|pubkey, validator_store| async move {

|

||||

validator_store

|

||||

.produce_sync_committee_signature(

|

||||

altair_fork_slot,

|

||||

Hash256::zero(),

|

||||

0,

|

||||

&pubkey,

|

||||

)

|

||||

.await

|

||||

.unwrap()

|

||||

},

|

||||

)

|

||||

.await

|

||||

.assert_signatures_match(

|

||||

"signed_contribution_and_proof",

|

||||

|pubkey, validator_store| async move {

|

||||

let contribution = SyncCommitteeContribution {

|

||||

slot: altair_fork_slot,

|

||||

beacon_block_root: <_>::default(),

|

||||

subcommittee_index: <_>::default(),

|

||||

aggregation_bits: <_>::default(),

|

||||

signature: AggregateSignature::empty(),

|

||||

};

|

||||

validator_store

|

||||

.produce_signed_contribution_and_proof(

|

||||

0,

|

||||

pubkey,

|

||||

contribution,

|

||||

SyncSelectionProof::from(Signature::empty()),

|

||||

)

|

||||

.await

|

||||

.unwrap()

|

||||

},

|

||||

)

|

||||

.await;

|

||||

}

|

||||

|

||||

#[tokio::test]

|

||||

async fn mainnet_base_types() {

|

||||

test_base_types("mainnet", 4242).await

|

||||

}

|

||||

|

||||

/* The Altair fork for mainnet has not been announced, so this test will always fail.

|

||||

*

|

||||

* If this test starts failing, it's likely that the fork has been decided and we should remove

|

||||

* the `#[should_panic]`

|

||||

*/

|

||||

#[tokio::test]

|

||||

#[should_panic]

|

||||

async fn mainnet_altair_types() {

|

||||

test_altair_types("mainnet", 4243).await

|

||||

}

|

||||

|

||||

#[tokio::test]

|

||||

async fn pyrmont_base_types() {

|

||||

test_base_types("pyrmont", 4244).await

|

||||

}

|

||||

|

||||

#[tokio::test]

|

||||

async fn pyrmont_altair_types() {

|

||||

test_altair_types("pyrmont", 4245).await

|

||||

}

|

||||

|

||||

#[tokio::test]

|

||||

async fn prater_base_types() {

|

||||

test_base_types("prater", 4246).await

|

||||

}

|

||||

|

||||

#[tokio::test]

|

||||

async fn prater_altair_types() {

|

||||

test_altair_types("prater", 4247).await

|

||||

}

|

||||

}

|

||||

6

testing/web3signer_tests/tls/README.md

Normal file

6

testing/web3signer_tests/tls/README.md

Normal file

@ -0,0 +1,6 @@

|

||||

## TLS Testing Files

|

||||

|

||||

The files in this directory are used for testing TLS with web3signer. We store them in this

|

||||

repository since the are not sensitive and it's simpler than regenerating them for each test.

|

||||

|

||||

The files were generated using the `./generate.sh` script.

|

||||

32

testing/web3signer_tests/tls/cert.pem

Normal file

32

testing/web3signer_tests/tls/cert.pem

Normal file

@ -0,0 +1,32 @@

|

||||

-----BEGIN CERTIFICATE-----

|

||||

MIIFmTCCA4GgAwIBAgIUd6yn4o1bKr2YpzTxcBmoiM4PorkwDQYJKoZIhvcNAQEL

|

||||

BQAwajELMAkGA1UEBhMCVVMxCzAJBgNVBAgMAlZBMREwDwYDVQQHDAhTb21lQ2l0

|

||||

eTESMBAGA1UECgwJTXlDb21wYW55MRMwEQYDVQQLDApNeURpdmlzaW9uMRIwEAYD

|

||||

VQQDDAkxMjcuMC4wLjEwIBcNMjEwOTA2MDgxMDU2WhgPMjEyMTA4MTMwODEwNTZa

|

||||

MGoxCzAJBgNVBAYTAlVTMQswCQYDVQQIDAJWQTERMA8GA1UEBwwIU29tZUNpdHkx

|

||||

EjAQBgNVBAoMCU15Q29tcGFueTETMBEGA1UECwwKTXlEaXZpc2lvbjESMBAGA1UE

|

||||

AwwJMTI3LjAuMC4xMIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEAx/a1

|

||||

SRqehj/D18166GcJh/zOyDtZCbeoLWcVfS1aBq+J1FFy4LYKWgwNhOYsrxHLhsIr

|

||||

/LpHpRm/FFqLPxGNoEPMcJi1dLcELPcJAG1l+B0Ur52V/nxOmzn71Mi0WQv0oOFx

|

||||

hOtUOToY3heVW0JXgrILhdD834mWdsxBWPhq1LeLZcMth4woMgD9AH4KzxUNtFvo

|

||||

8i8IneEYvoDIQ8dGZ5lHnFV5kaC8Is0hevMljTw83E9BD0B/bpp+o2rByccVulsy

|

||||

/WK763tFteDxK5eZZ3/5rRId+uoN5+D4oRnG6zuki0t7+eTZo1cUPi28IIDTNjPR

|

||||

Xvw35dt+SdTDjtI/FUf8VWhLIHZZXaevFliuBbcuOMpWCdjAdwb7Uf9WpMnxzZtK

|

||||

fatAC9dk3VPsehFcf6w/H+ah3tu/szAaDJ5zZb0m05cAxDZekZ9SccBIPglccM3f

|

||||

vzNjrDIoi4z7uCiTJc2FW0qb2MzusQsGjtLW53n7IGoSIFDvOhiZa9D+vOE2wG6o

|

||||

VNf2K9/QvwNDCzRvW81mcUCRr/BhcAmX5drwYPwUEcdBXQeFPt6nZ33fmIgl2Cbv

|

||||

io9kUJzjlQWOZ6BX5FmC69dWAedcfHGY693tG6LQKk9a5B+NiuIB4m1bHcvjYhsh

|

||||

GqVrw980YIN52RmIoskGRdt34/gKHWcqjIEK0+kCAwEAAaM1MDMwCwYDVR0PBAQD

|

||||

AgQwMBMGA1UdJQQMMAoGCCsGAQUFBwMBMA8GA1UdEQQIMAaHBH8AAAEwDQYJKoZI

|

||||

hvcNAQELBQADggIBAILVu5ppYnumyxvchgSLAi/ahBZV/wmtI3X8vxOHuQwYF8rZ

|

||||

7b2gd+PClJBuhxeOEJZTtCSDMMUdlBXsxnoftp0TcDhFXeAlSp0JQe38qGAlX94l

|

||||

4ZH39g+Ut5kVpImb/nI/iQhdOSDzQHaivTMjhNlBW+0EqvVJ1YsjjovtcxXh8gbv

|

||||

4lKpGkuT6xVRrSGsZh0LQiVtngKNqte8vBvFWBQfj9JFyoYmpSvYl/LaYjYkmCya

|

||||

V2FbfrhDXDI0IereknqMKDs8rF4Ik6i22b+uG91yyJsRFh63x7agEngpoxYKYV6V

|

||||

5YXIzH5kLX8hklHnLgVhES2ZjhheDgC8pCRUCPqR4+KVnQcFRHP9MJCqcEIFAppD

|

||||

oHITdiFDs/qE0EDV9WW1iOWgBmdgxUZ8dh1CfW+7B72+Uy0/eXWdnlrRDe5cN/hs

|

||||

xXpnLCMfzSDEMA4WmImabpU/fRXL7pazZENJj7iyIAr/pEL34+QjqVfWaXkWrHoN

|

||||

KsrkxTdoZNVdarBDSw9JtMUECmnWYOjMaOm1O8waib9H1SlPSSPrK5pGT/6h1g0d

|

||||

LM982X36Ej8XyW33E5l6qWiLVRye7SaAvZbVLsyd+cfemi6BPsK+y09eCs4a+Qp7

|

||||

9YWZOPT6s/ahJYdTGF961JZ62ypIioimW6wx8hAMCkKKfhn1WI0+0RlOrjbw

|

||||

-----END CERTIFICATE-----

|

||||

19

testing/web3signer_tests/tls/config

Normal file

19

testing/web3signer_tests/tls/config

Normal file

@ -0,0 +1,19 @@

|

||||

[req]

|

||||

default_bits = 4096

|

||||

default_md = sha256

|

||||

distinguished_name = req_distinguished_name

|

||||

x509_extensions = v3_req

|

||||

prompt = no

|

||||

[req_distinguished_name]

|

||||

C = US

|

||||

ST = VA

|

||||

L = SomeCity

|

||||

O = MyCompany

|

||||

OU = MyDivision

|

||||

CN = 127.0.0.1

|

||||

[v3_req]

|

||||

keyUsage = keyEncipherment, dataEncipherment

|

||||

extendedKeyUsage = serverAuth

|

||||

subjectAltName = @alt_names

|

||||

[alt_names]

|

||||

IP.1 = 127.0.0.1

|

||||

4

testing/web3signer_tests/tls/generate.sh

Executable file

4

testing/web3signer_tests/tls/generate.sh

Executable file

@ -0,0 +1,4 @@

|

||||

#!/bin/bash

|

||||

openssl req -x509 -sha256 -nodes -days 36500 -newkey rsa:4096 -keyout key.key -out cert.pem -config config &&

|

||||

openssl pkcs12 -export -out key.p12 -inkey key.key -in cert.pem -password pass:$(cat password.txt)

|

||||

|

||||

52

testing/web3signer_tests/tls/key.key

Normal file

52

testing/web3signer_tests/tls/key.key

Normal file

@ -0,0 +1,52 @@

|

||||

-----BEGIN PRIVATE KEY-----

|

||||

MIIJRAIBADANBgkqhkiG9w0BAQEFAASCCS4wggkqAgEAAoICAQDH9rVJGp6GP8PX

|

||||

zXroZwmH/M7IO1kJt6gtZxV9LVoGr4nUUXLgtgpaDA2E5iyvEcuGwiv8ukelGb8U

|

||||

Wos/EY2gQ8xwmLV0twQs9wkAbWX4HRSvnZX+fE6bOfvUyLRZC/Sg4XGE61Q5Ohje

|

||||

F5VbQleCsguF0PzfiZZ2zEFY+GrUt4tlwy2HjCgyAP0AfgrPFQ20W+jyLwid4Ri+

|

||||

gMhDx0ZnmUecVXmRoLwizSF68yWNPDzcT0EPQH9umn6jasHJxxW6WzL9Yrvre0W1

|

||||

4PErl5lnf/mtEh366g3n4PihGcbrO6SLS3v55NmjVxQ+LbwggNM2M9Fe/Dfl235J

|

||||

1MOO0j8VR/xVaEsgdlldp68WWK4Fty44ylYJ2MB3BvtR/1akyfHNm0p9q0AL12Td

|

||||

U+x6EVx/rD8f5qHe27+zMBoMnnNlvSbTlwDENl6Rn1JxwEg+CVxwzd+/M2OsMiiL

|

||||

jPu4KJMlzYVbSpvYzO6xCwaO0tbnefsgahIgUO86GJlr0P684TbAbqhU1/Yr39C/

|

||||

A0MLNG9bzWZxQJGv8GFwCZfl2vBg/BQRx0FdB4U+3qdnfd+YiCXYJu+Kj2RQnOOV

|

||||

BY5noFfkWYLr11YB51x8cZjr3e0botAqT1rkH42K4gHibVsdy+NiGyEapWvD3zRg

|

||||

g3nZGYiiyQZF23fj+AodZyqMgQrT6QIDAQABAoICAGMICuZGmaXxJIPXDvzUMsM3

|

||||

cA14XvNSEqdRuzHAaSqQexk8sUEaxuurtnJQMGcP0BVQSsqiUuMwahKheP7mKZbq

|

||||

nPBSoONJ1HaUbc/ZXjvP4zPKPsPHOoLj55WNRMwpAKFApaDnj1G8NR6g3WZR59ch

|

||||

aFWAmAv5LxxsshxnAzmQIShnzj+oKSwCk0pQIfhG+/+L2UVAB+tw1HlcfFIc+gBK

|

||||

yE1jg46c5S/zGZaznrBg2d9eHOF51uKm/vrd31WYFGmzyv/0iw7ngTG/UpF9Rgsd

|

||||

NUECjPh8PCDPqTLX+kz7v9UAsEiljye2856LtfT++BuK9DEvhlt/Jf9YsPUlqPl3

|

||||

3wUG8yiqBQrlGTUY1KUdHsulmbTiq4Q9ch5QLcvazk+9c7hlB6WP+/ofqgIPSlDt

|

||||

fOHkROmO7GURz78lVM8+E/pRgy6qDq+yM1uVMeWWme4hKfOAL2lnJDTO4PKNQA4b

|

||||

03YXsdVSz4mm9ppnyHIPXei6/qHpU/cRRf261HNEI16eC0ZnoIAxhORJtxo6kMns

|

||||

am4yuhHm9qLjbOI1uJPAgpR/o0O5NaBgkdEzJ102pmv2grf2U743n9bqu+y/vJF9

|

||||

HRmMDdJgZSmcYxQuLe0INzLDnTzOdmjbqjB6lDsSwtrEo/KLtXIStrFMKSHIE/QV

|

||||

96u8nWPomN83HqkVvQmBAoIBAQDrs8eKAQ3meWtmsSqlzCNVAsJA1xV4DtNaWBTz

|

||||

MJXwRWywem/sHCoPsJ7c5UTUjQDOfNEUu8iW/m60dt0U+81/O9TLBP1Td6jxLg8X

|

||||

92atLs8wHQDUqrgouce0lyS7to+R3K+N8YtWL2y9w9jbf/XT9iTL5TXGc8RFrmMg

|

||||

nDQ1EShojU0U0I1lKpDJTx2R1FANfyd3iHSsENRwYj5MF8iQSag79Ek06BKLWHHt

|

||||

OJj2oiO3VIAKQYVA9aKxfiiOWXWumPHq7r6UoNJK3UNzfBvguhEzl8k6VjZBCR9q

|

||||

WwvSTba4mOgHMIXdV/9Wr3y8Cus2lX5YGOK4OUx/ZaCdaBtZAoIBAQDZLwwZDHen

|

||||

Iw1412m/D/6HBS38bX78t+0hL7LNqgVpiZdNbLq57SGRbUnZZ/jlmtyLw3be6BV3

|

||||

IcLyflYW+4Wi8AAqVADlXjMC+GIuDNCCicwWxJeIFaAGM7Jt6Fa08H/loIAMM7NC

|

||||

y1CmQnCR9OnHRdcBaU1y4ForP4f8B/hwh3hSQEFPKgF/MQwDnR7UzPgRrUOTovN/

|

||||

4D7j1Wx6FpYX9hGZL0i2K1ygRZE03t6VV7xhCkne96VvDEj1Zo/S4HFaEmDD+EjR

|

||||

pvXVhPRed7GZ6AMs2JxOPhRiu3G+AQL1HPMDlA8QiPtTh0Zf99j/5NXKBEyH/fp1

|

||||

V04L1s7wf7sRAoIBAQCb3/ftJ0dXDSNe9Xl7ziXrmXh3wwYasMtLawbn0VDHZlI7

|

||||

36zW28VhPO/CrAi5/En1RIxNBubgHIF/7T/GGcRMCXhvjuwtX+wlG821jtKjY1p3

|

||||

uiaLfh9uJ3aP0ojjbxdBYk3jNENuisyCLtviRZyAQb8R7JKEnJjHcE10CnloQuGT

|

||||

SycXxdhMeDrqNt0aTOtoEZg7L83g4PxtGjuSvQPRkDSm+aXUTEm/R42IUS6vpIi0

|

||||

PDi1D6GdVRT0BrexdC4kelc6hAsbZcPM6MkrvX7+Pm8TzKSyZMNafTr+bhnCScy2

|

||||

BcEkyA0vVXuyizmVbi8hmPnGLyb4qEQT2FTA5FF5AoIBAQCEj0vCCjMKB8IUTN7V

|

||||

aGzBeq7b0PVeSODqjZOEJk9RYFLCRigejZccjWky0lw/wGr2v6JRYbSgVzIHEod3

|

||||

VaP2lKh1LXqyhPF70aETXGz0EClKiEm5HQHkZy90GAi8PcLCpFkjmXbDwRcDs6/D

|

||||

1onOQFmAGgbUpA1FMmzMrwy7mmQdR+zU5d2uBYDAv+jumACdwXRqq14WYgfgxgaE

|

||||

6j5Id7+8EPk/f230wSFk9NdErh1j2YTHG76U7hml9yi33JgzEt6PHn9Lv61y2sjQ

|

||||

1BvJxawSdk/JDekhbil5gGKOu1G0kG01eXZ1QC77Kmr/nWvD9yXDJ4j0kAop/b2n

|

||||

Wz8RAoIBAQDn1ZZGOJuVRUoql2A65zwtu34IrYD+2zQQCBf2hGHtwXT6ovqRFqPV

|

||||

vcQ7KJP+zVT4GimFlZy7lUx8H4j7+/Bxn+PpUHHoDYjVURr12wk2w8pxwcKnbiIw

|

||||

qaMkF5KG2IUVb7F8STEuKv4KKeuRlB4K2HC2J8GZOLXO21iOqNMhMRO11wp9jkKI

|

||||

n83wtLH34lLRz4VzIW3rfvPeVoP1zoDkLvD8k/Oyjrf4Bishg9vCHyhQkB1JDtMU

|

||||

1bfH8mxwKozakpJa23a8lE5NLoc9NOZrKM4+cefY1MZ3FjlaZfkS5jlhY4Qhx+fl

|

||||

+9j5xRPaH+mkJHaJIqzQad+b1A2eIa+L

|

||||

-----END PRIVATE KEY-----

|

||||

BIN

testing/web3signer_tests/tls/key.p12

Normal file

BIN

testing/web3signer_tests/tls/key.p12

Normal file

Binary file not shown.

1

testing/web3signer_tests/tls/password.txt

Normal file

1

testing/web3signer_tests/tls/password.txt

Normal file

@ -0,0 +1 @@

|

||||

meow

|

||||

@ -68,3 +68,5 @@ itertools = "0.10.0"

|

||||

monitoring_api = { path = "../common/monitoring_api" }

|

||||

sensitive_url = { path = "../common/sensitive_url" }

|

||||

task_executor = { path = "../common/task_executor" }

|

||||

reqwest = { version = "0.11.0", features = ["json","stream"] }

|

||||

url = "2.2.2"

|

||||

|

||||

@ -5,6 +5,7 @@ use crate::{

|

||||

validator_store::ValidatorStore,

|

||||

};

|

||||

use environment::RuntimeContext;

|

||||

use futures::future::join_all;

|

||||

use slog::{crit, error, info, trace};

|

||||

use slot_clock::SlotClock;

|

||||

use std::collections::HashMap;

|

||||

@ -288,7 +289,7 @@ impl<T: SlotClock + 'static, E: EthSpec> AttestationService<T, E> {

|

||||

// Then download, sign and publish a `SignedAggregateAndProof` for each

|

||||

// validator that is elected to aggregate for this `slot` and

|

||||

// `committee_index`.

|

||||

self.produce_and_publish_aggregates(attestation_data, &validator_duties)

|

||||

self.produce_and_publish_aggregates(&attestation_data, &validator_duties)

|

||||

.await

|

||||

.map_err(move |e| {

|

||||

crit!(

|

||||

@ -350,10 +351,11 @@ impl<T: SlotClock + 'static, E: EthSpec> AttestationService<T, E> {

|

||||

.await

|

||||

.map_err(|e| e.to_string())?;

|

||||

|

||||

let mut attestations = Vec::with_capacity(validator_duties.len());

|

||||

|

||||

for duty_and_proof in validator_duties {

|

||||

// Create futures to produce signed `Attestation` objects.

|

||||

let attestation_data_ref = &attestation_data;

|

||||

let signing_futures = validator_duties.iter().map(|duty_and_proof| async move {

|

||||

let duty = &duty_and_proof.duty;

|

||||

let attestation_data = attestation_data_ref;

|

||||

|

||||

// Ensure that the attestation matches the duties.

|

||||

#[allow(clippy::suspicious_operation_groupings)]

|

||||

@ -368,7 +370,7 @@ impl<T: SlotClock + 'static, E: EthSpec> AttestationService<T, E> {

|

||||

"duty_index" => duty.committee_index,

|

||||

"attestation_index" => attestation_data.index,

|

||||

);

|

||||

continue;

|

||||

return None;

|

||||

}

|

||||

|

||||

let mut attestation = Attestation {

|

||||

@ -377,26 +379,38 @@ impl<T: SlotClock + 'static, E: EthSpec> AttestationService<T, E> {

|

||||

signature: AggregateSignature::infinity(),

|

||||

};

|

||||

|

||||

if let Err(e) = self.validator_store.sign_attestation(

|

||||

duty.pubkey,

|

||||

duty.validator_committee_index as usize,

|

||||

&mut attestation,

|

||||

current_epoch,

|

||||

) {

|

||||

crit!(

|

||||

log,

|

||||

"Failed to sign attestation";

|

||||

"error" => ?e,

|

||||

"committee_index" => committee_index,

|

||||

"slot" => slot.as_u64(),

|

||||

);

|

||||

continue;

|

||||

} else {

|

||||

attestations.push(attestation);

|

||||

match self

|

||||

.validator_store

|

||||

.sign_attestation(

|

||||

duty.pubkey,

|

||||

duty.validator_committee_index as usize,

|

||||

&mut attestation,

|

||||

current_epoch,

|

||||

)

|

||||

.await

|

||||

{

|

||||

Ok(()) => Some(attestation),

|

||||

Err(e) => {

|

||||

crit!(

|

||||

log,

|

||||

"Failed to sign attestation";

|

||||

"error" => ?e,

|

||||

"committee_index" => committee_index,

|

||||

"slot" => slot.as_u64(),

|

||||

);

|

||||

None

|

||||

}

|

||||

}

|

||||

}

|

||||

});

|

||||

|

||||

let attestations_slice = attestations.as_slice();

|

||||

// Execute all the futures in parallel, collecting any successful results.

|