## Issue Addressed

Closes#2286Closes#2538Closes#2342

## Proposed Changes

Part II of major slasher optimisations after #2767

These changes will be backwards-incompatible due to the move to MDBX (and the schema change) 😱

* [x] Shrink attester keys from 16 bytes to 7 bytes.

* [x] Shrink attester records from 64 bytes to 6 bytes.

* [x] Separate `DiskConfig` from regular `Config`.

* [x] Add configuration for the LRU cache size.

* [x] Add a "migration" that deletes any legacy LMDB database.

## Issue Addressed

Successor to #2431

## Proposed Changes

* Add a `BlockReplayer` struct to abstract over the intricacies of calling `per_slot_processing` and `per_block_processing` while avoiding unnecessary tree hashing.

* Add a variant of the forwards state root iterator that does not require an `end_state`.

* Use the `BlockReplayer` when reconstructing states in the database. Use the efficient forwards iterator for frozen states.

* Refactor the iterators to remove `Arc<HotColdDB>` (this seems to be neater than making _everything_ an `Arc<HotColdDB>` as I did in #2431).

Supplying the state roots allow us to avoid building a tree hash cache at all when reconstructing historic states, which saves around 1 second flat (regardless of `slots-per-restore-point`). This is a small percentage of worst-case state load times with 200K validators and SPRP=2048 (~15s vs ~16s) but a significant speed-up for more frequent restore points: state loads with SPRP=32 should be now consistently <500ms instead of 1.5s (a ~3x speedup).

## Additional Info

Required by https://github.com/sigp/lighthouse/pull/2628

## Issue Addressed

#2834

## Proposed Changes

Change log message severity from error to debug in attestation verification when attestation state is finalized.

## Issue Addressed

#2841

## Proposed Changes

Not counting dialing peers while deciding if we have reached the target peers in case of outbound peers.

## Additional Info

Checked this running in nodes and bandwidth looks normal, peer count looks normal too

## Proposed Changes

Remove the `is_first_block_in_epoch` logic from the balances cache update logic, as it was incorrect in the case of skipped slots. The updated code is simpler because regardless of whether the block is the first in the epoch we can check if an entry for the epoch boundary root already exists in the cache, and update the cache accordingly.

Additionally, to assist with flip-flopping justified epochs, move to cloning the balance cache rather than moving it. This should still be very fast in practice because the balances cache is a ~1.6MB `Vec`, and this operation is expected to only occur infrequently.

## Issue Addressed

Resolves: https://github.com/sigp/lighthouse/issues/2741

Includes: https://github.com/sigp/lighthouse/pull/2853 so that we can get ssz static tests passing here on v1.1.6. If we want to merge that first, we can make this diff slightly smaller

## Proposed Changes

- Changes the `justified_epoch` and `finalized_epoch` in the `ProtoArrayNode` each to an `Option<Checkpoint>`. The `Option` is necessary only for the migration, so not ideal. But does allow us to add a default logic to `None` on these fields during the database migration.

- Adds a database migration from a legacy fork choice struct to the new one, search for all necessary block roots in fork choice by iterating through blocks in the db.

- updates related to https://github.com/ethereum/consensus-specs/pull/2727

- We will have to update the persisted forkchoice to make sure the justified checkpoint stored is correct according to the updated fork choice logic. This boils down to setting the forkchoice store's justified checkpoint to the justified checkpoint of the block that advanced the finalized checkpoint to the current one.

- AFAICT there's no migration steps necessary for the update to allow applying attestations from prior blocks, but would appreciate confirmation on that

- I updated the consensus spec tests to v1.1.6 here, but they will fail until we also implement the proposer score boost updates. I confirmed that the previously failing scenario `new_finalized_slot_is_justified_checkpoint_ancestor` will now pass after the boost updates, but haven't confirmed _all_ tests will pass because I just quickly stubbed out the proposer boost test scenario formatting.

- This PR now also includes proposer boosting https://github.com/ethereum/consensus-specs/pull/2730

## Additional Info

I realized checking justified and finalized roots in fork choice makes it more likely that we trigger this bug: https://github.com/ethereum/consensus-specs/pull/2727

It's possible the combination of justified checkpoint and finalized checkpoint in the forkchoice store is different from in any block in fork choice. So when trying to startup our store's justified checkpoint seems invalid to the rest of fork choice (but it should be valid). When this happens we get an `InvalidBestNode` error and fail to start up. So I'm including that bugfix in this branch.

Todo:

- [x] Fix fork choice tests

- [x] Self review

- [x] Add fix for https://github.com/ethereum/consensus-specs/pull/2727

- [x] Rebase onto Kintusgi

- [x] Fix `num_active_validators` calculation as @michaelsproul pointed out

- [x] Clean up db migrations

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Changes required for the `merge-devnet-3`. Added some more non substantive renames on top of @realbigsean 's commit.

Note: this doesn't include the proposer boosting changes in kintsugi v3.

This devnet isn't running with the proposer boosting fork choice changes so if we are looking to merge https://github.com/sigp/lighthouse/pull/2822 into `unstable`, then I think we should just maintain this branch for the devnet temporarily.

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Proposed Changes

In the event of a late block, keep the block in the snapshot cache by cloning it. This helps us process new blocks quickly in the event the late block was re-org'd.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

We were calculating justified balances incorrectly on cache misses in `set_justified_checkpoint`

## Proposed Changes

Use the `get_effective_balances` method as opposed to `state.balances`, which returns exact balances

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

New rust lints

## Proposed Changes

- Boxing some enum variants

- removing some unused fields (is the validator lockfile unused? seemed so to me)

## Additional Info

- some error fields were marked as dead code but are logged out in areas

- left some dead fields in our ef test code because I assume they are useful for debugging?

Co-authored-by: realbigsean <seananderson33@gmail.com>

Fix max packet sizes

Fix max_payload_size function

Add merge block test

Fix max size calculation; fix up test

Clear comments

Add a payload_size_function

Use safe arith for payload calculation

Return an error if block too big in block production

Separate test to check if block is over limit

* Fix fork choice after rebase

* Remove paulhauner warp dep

* Fix fork choice test compile errors

* Assume fork choice payloads are valid

* Add comment

* Ignore new tests

* Fix error in test skipping

* Change base_fee_per_gas to Uint256

* Add custom (de)serialization to ExecutionPayload

* Fix errors

* Add a quoted_u256 module

* Remove unused function

* lint

* Add test

* Remove extra line

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* Fix arbitrary check kintsugi

* Add merge chain spec fields, and a function to determine which constant to use based on the state variant

* increment spec test version

* Remove `Transaction` enum wrapper

* Remove Transaction new-type

* Remove gas validations

* Add `--terminal-block-hash-epoch-override` flag

* Increment spec tests version to 1.1.5

* Remove extraneous gossip verification https://github.com/ethereum/consensus-specs/pull/2687

* - Remove unused Error variants

- Require both "terminal-block-hash-epoch-override" and "terminal-block-hash-override" when either flag is used

* - Remove a couple more unused Error variants

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* update initializing from eth1 for merge genesis

* read execution payload header from file lcli

* add `create-payload-header` command to `lcli`

* fix base fee parsing

* Apply suggestions from code review

* default `execution_payload_header` bool to false when deserializing `meta.yml` in EF tests

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* Add payload verification status to fork choice

* Pass payload verification status to import_block

* Add valid back-propagation

* Add head safety status latch to API

* Remove ExecutionLayerStatus

* Add execution info to client notifier

* Update notifier logs

* Change use of "hash" to refer to beacon block

* Shutdown on invalid finalized block

* Tidy, add comments

* Fix failing FC tests

* Allow blocks with unsafe head

* Fix forkchoiceUpdate call on startup

* Ignore payload errors

* Only return payload handle on valid response

* Push some engine logs down to debug

* Push ee fork choice log to debug

* Push engine call failure to debug

* Push some more errors to debug

* Fix panic at startup

* Reject some HTTP endpoints when EL is not ready

* Restrict more endpoints

* Add watchdog task

* Change scheduling

* Update to new schedule

* Add "syncing" concept

* Remove RequireSynced

* Add is_merge_complete to head_info

* Cache latest_head in Engines

* Call consensus_forkchoiceUpdate on startup

* Thread eth1_block_hash into interop genesis state

* Add merge-fork-epoch flag

* Build LH with minimal spec by default

* Add verbose logs to execution_layer

* Add --http-allow-sync-stalled flag

* Update lcli new-testnet to create genesis state

* Fix http test

* Fix compile errors in tests

* Start adding merge tests

* Expose MockExecutionLayer

* Add mock_execution_layer to BeaconChainHarness

* Progress with merge test

* Return more detailed errors with gas limit issues

* Use a better gas limit in block gen

* Ensure TTD is met in block gen

* Fix basic_merge tests

* Start geth testing

* Fix conflicts after rebase

* Remove geth tests

* Improve merge test

* Address clippy lints

* Make pow block gen a pure function

* Add working new test, breaking existing test

* Fix test names

* Add should_panic

* Don't run merge tests in debug

* Detect a tokio runtime when starting MockServer

* Fix clippy lint, include merge tests

* - Update the fork choice `ProtoNode` to include `is_merge_complete`

- Add database migration for the persisted fork choice

* update tests

* Small cleanup

* lints

* store execution block hash in fork choice rather than bool

Added Execution Payload from Rayonism Fork

Updated new Containers to match Merge Spec

Updated BeaconBlockBody for Merge Spec

Completed updating BeaconState and BeaconBlockBody

Modified ExecutionPayload<T> to use Transaction<T>

Mostly Finished Changes for beacon-chain.md

Added some things for fork-choice.md

Update to match new fork-choice.md/fork.md changes

ran cargo fmt

Added Missing Pieces in eth2_libp2p for Merge

fix ef test

Various Changes to Conform Closer to Merge Spec

## Issue Addressed

Closes#1996

## Proposed Changes

Run a second `Logger` via `sloggers` which logs to a file in the background with:

- separate `debug-level` for background and terminal logging

- the ability to limit log size

- rotation through a customizable number of log files

- an option to compress old log files (`.gz` format)

Add the following new CLI flags:

- `--logfile-debug-level`: The debug level of the log files

- `--logfile-max-size`: The maximum size of each log file

- `--logfile-max-number`: The number of old log files to store

- `--logfile-compress`: Whether to compress old log files

By default background logging uses the `debug` log level and saves logfiles to:

- Beacon Node: `$HOME/.lighthouse/$network/beacon/logs/beacon.log`

- Validator Client: `$HOME/.lighthouse/$network/validators/logs/validator.log`

Or, when using the `--datadir` flag:

`$datadir/beacon/logs/beacon.log` and `$datadir/validators/logs/validator.log`

Once rotated, old logs are stored like so: `beacon.log.1`, `beacon.log.2` etc.

> Note: `beacon.log.1` is always newer than `beacon.log.2`.

## Additional Info

Currently the default value of `--logfile-max-size` is 200 (MB) and `--logfile-max-number` is 5.

This means that the maximum storage space that the logs will take up by default is 1.2GB.

(200MB x 5 from old log files + <200MB the current logfile being written to)

Happy to adjust these default values to whatever people think is appropriate.

It's also worth noting that when logging to a file, we lose our custom `slog` formatting. This means the logfile logs look like this:

```

Oct 27 16:02:50.305 INFO Lighthouse started, version: Lighthouse/v2.0.1-8edd9d4+, module: lighthouse:413

Oct 27 16:02:50.305 INFO Configured for network, name: prater, module: lighthouse:414

```

## Issue Addressed

Users are experiencing `Status'd peer not found` errors

## Proposed Changes

Although I cannot reproduce this error, this is only one connection state change that is not addressed in the peer manager (that I could see). The error occurs because the number of disconnected peers in the peerdb becomes out of sync with the actual number of disconnected peers. From what I can tell almost all possible connection state changes are handled, except for the case when a disconnected peer changes to be disconnecting. This can potentially happen at the peer connection limit, where a previously connected peer switches to disconnecting.

This PR decrements the disconnected counter when this event occurs and from what I can tell, covers all possible disconnection state changes in the peer manager.

We were batch removing chains when purging, and then updating the status of the collection for each of those. This makes the range status be out of sync with the real status. This represented no harm to the global sync status, but I've changed it to comply with a correct debug assertion that I got triggered while doing some testing.

Also added tests and improved code quality as per @paulhauner 's suggestions.

## Issue Addressed

N/A

## Proposed Changes

1. Don't disconnect peer from dht on connection limit errors

2. Bump up `PRIORITY_PEER_EXCESS` to allow for dialing upto 60 peers by default.

Co-authored-by: Diva M <divma@protonmail.com>

I had this change but it seems to have been lost in chaos of network upgrades.

The swarm dialing event seems to miss some cases where we dial via the behaviour. This causes an error to be logged as the peer manager doesn't know about some dialing events.

This shifts the logic to the behaviour to inform the peer manager.

## Issue Addressed

Part of a bigger effort to make the network globals read only. This moves all writes to the `PeerDB` to the `eth2_libp2p` crate. Limiting writes to the peer manager is a slightly more complicated issue for a next PR, to keep things reviewable.

## Proposed Changes

- Make the peers field in the globals a private field.

- Allow mutable access to the peers field to `eth2_libp2p` for now.

- Add a new network message to update the sync state.

Co-authored-by: Age Manning <Age@AgeManning.com>

## Issue Addressed

Running a beacon node I triggered a sync debug panic. And so finally the time to create tests for sync arrived. Fortunately, te bug was not in the sync algorithm itself but a wrong assertion

## Proposed Changes

- Split Range's impl from the BeaconChain via a trait. This is needed for testing. The TestingRig/Harness is way bigger than needed and does not provide the modification functionalities that are needed to test sync. I find this simpler, tho some could disagree.

- Add a regression test for sync that fails before the changes.

- Fix the wrong assertion.

RPC Responses are for some reason not removing their timeout when they are completing.

As an example:

```

Nov 09 01:18:20.256 DEBG Received BlocksByRange Request step: 1, start_slot: 728465, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:20.263 DEBG Received BlocksByRange Request step: 1, start_slot: 728593, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:20.483 DEBG BlocksByRange Response sent returned: 63, requested: 64, current_slot: 2466389, start_slot: 728465, msg: Failed to return all requested blocks, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:20.500 DEBG BlocksByRange Response sent returned: 64, requested: 64, current_slot: 2466389, start_slot: 728593, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:21.068 DEBG Received BlocksByRange Request step: 1, start_slot: 728529, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:21.272 DEBG BlocksByRange Response sent returned: 63, requested: 64, current_slot: 2466389, start_slot: 728529, msg: Failed to return all requested blocks, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:23.434 DEBG Received BlocksByRange Request step: 1, start_slot: 728657, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:23.665 DEBG BlocksByRange Response sent returned: 64, requested: 64, current_slot: 2466390, start_slot: 728657, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:25.851 DEBG Received BlocksByRange Request step: 1, start_slot: 728337, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:25.851 DEBG Received BlocksByRange Request step: 1, start_slot: 728401, count: 64, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:26.094 DEBG BlocksByRange Response sent returned: 62, requested: 64, current_slot: 2466390, start_slot: 728401, msg: Failed to return all requested blocks, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:26.100 DEBG BlocksByRange Response sent returned: 63, requested: 64, current_slot: 2466390, start_slot: 728337, msg: Failed to return all requested blocks, peer: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw

Nov 09 01:18:31.070 DEBG RPC Error direction: Incoming, score: 0, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw, client: Prysm: version: a80b1c252a9b4773493b41999769bf3134ac373f, os_version: unknown, err: Stream Timeout, protocol: beacon_blocks_by_range, service: libp2p

Nov 09 01:18:31.070 WARN Timed out to a peer's request. Likely insufficient resources, reduce peer count, service: libp2p

Nov 09 01:18:31.085 DEBG RPC Error direction: Incoming, score: 0, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw, client: Prysm: version: a80b1c252a9b4773493b41999769bf3134ac373f, os_version: unknown, err: Stream Timeout, protocol: beacon_blocks_by_range, service: libp2p

Nov 09 01:18:31.085 WARN Timed out to a peer's request. Likely insufficient resources, reduce peer count, service: libp2p

Nov 09 01:18:31.459 DEBG RPC Error direction: Incoming, score: 0, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw, client: Prysm: version: a80b1c252a9b4773493b41999769bf3134ac373f, os_version: unknown, err: Stream Timeout, protocol: beacon_blocks_by_range, service: libp2p

Nov 09 01:18:31.459 WARN Timed out to a peer's request. Likely insufficient resources, reduce peer count, service: libp2p

Nov 09 01:18:34.129 DEBG RPC Error direction: Incoming, score: 0, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw, client: Prysm: version: a80b1c252a9b4773493b41999769bf3134ac373f, os_version: unknown, err: Stream Timeout, protocol: beacon_blocks_by_range, service: libp2p

Nov 09 01:18:34.130 WARN Timed out to a peer's request. Likely insufficient resources, reduce peer count, service: libp2p

Nov 09 01:18:35.686 DEBG Peer Manager disconnecting peer reason: Too many peers, peer_id: 16Uiu2HAmEmBURejquBUMgKAqxViNoPnSptTWLA2CfgSPnnKENBNw, service: libp2p

```

This PR is to investigate and correct the issue.

~~My current thoughts are that for some reason we are not closing the streams correctly, or fast enough, or the executor is not registering the closes and waking up.~~ - Pretty sure this is not the case, see message below for a more accurate reason.

~~I've currently added a timeout to stream closures in an attempt to force streams to close and the future to always complete.~~ I removed this

## Issue Addressed

Resolves#2545

## Proposed Changes

Adds the long-overdue EF tests for fork choice. Although we had pretty good coverage via other implementations that closely followed our approach, it is nonetheless important for us to implement these tests too.

During testing I found that we were using a hard-coded `SAFE_SLOTS_TO_UPDATE_JUSTIFIED` value rather than one from the `ChainSpec`. This caused a failure during a minimal preset test. This doesn't represent a risk to mainnet or testnets, since the hard-coded value matched the mainnet preset.

## Failing Cases

There is one failing case which is presently marked as `SkippedKnownFailure`:

```

case 4 ("new_finalized_slot_is_justified_checkpoint_ancestor") from /home/paul/development/lighthouse/testing/ef_tests/consensus-spec-tests/tests/minimal/phase0/fork_choice/on_block/pyspec_tests/new_finalized_slot_is_justified_checkpoint_ancestor failed with NotEqual:

head check failed: Got Head { slot: Slot(40), root: 0x9183dbaed4191a862bd307d476e687277fc08469fc38618699863333487703e7 } | Expected Head { slot: Slot(24), root: 0x105b49b51bf7103c182aa58860b039550a89c05a4675992e2af703bd02c84570 }

```

This failure is due to #2741. It's not a particularly high priority issue at the moment, so we fix it after merging this PR.

This simply moves some functions that were "swarm notifications" to a network behaviour implementation.

Notes

------

- We could disconnect from the peer manager but we would lose the rpc shutdown message

- We still notify from the swarm since this is the most reliable way to get some events. Ugly but best for now

- Events need to be pushed with "add event" to wake the waker

Co-authored-by: Divma <26765164+divagant-martian@users.noreply.github.com>

## Issue Addressed

Closes https://github.com/sigp/lighthouse/issues/2112

Closes https://github.com/sigp/lighthouse/issues/1861

## Proposed Changes

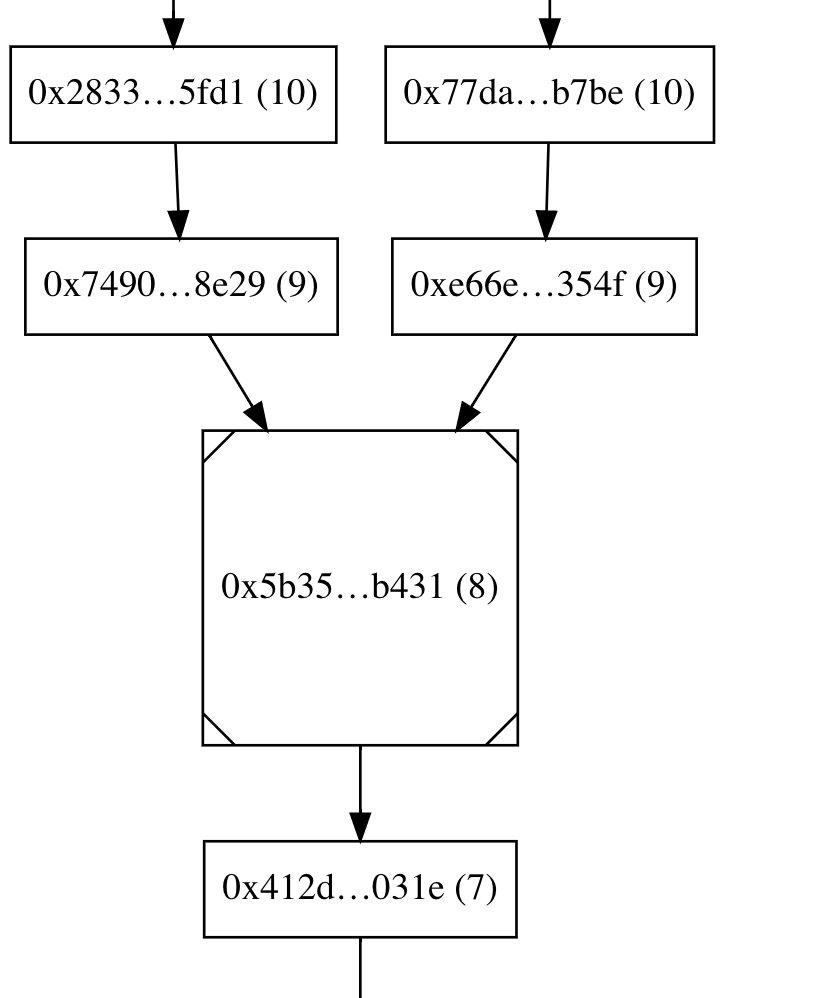

Collect attestations by validator index in the slasher, and use the magic of reference counting to automatically discard redundant attestations. This results in us storing only 1-2% of the attestations observed when subscribed to all subnets, which carries over to a 50-100x reduction in data stored 🎉

## Additional Info

There's some nuance to the configuration of the `slot-offset`. It has a profound effect on the effictiveness of de-duplication, see the docs added to the book for an explanation: 5442e695e5/book/src/slasher.md (slot-offset)

## Issue Addressed

I've done this change in a couple of WIPs already so I might as well submit it on its own. This changes no functionality but reduces coupling in a 0.0001%. It also helps new people who need to work in the peer manager to better understand what it actually needs from the outside

## Proposed Changes

Add a config to the peer manager

## Issue Addressed

The computation of metrics in the network service can be expensive. This disables the computation unless the cli flag `metrics` is set.

## Additional Info

Metrics in other parts of the network are still updated, since most are simple metrics and checking if metrics are enabled each time each metric is updated doesn't seem like a gain.

## Issue Addressed

@paulhauner noticed that when we send head events, we use the block root from `new_head` in `fork_choice_internal`, but calculate `dependent_root` and `previous_dependent_root` using the `canonical_head`. This is normally fine because `new_head` updates the `canonical_head` in `fork_choice_internal`, but it's possible we have a reorg updating `canonical_head` before our head events are sent. So this PR ensures `dependent_root` and `previous_dependent_root` are always derived from the state associated with `new_head`.

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

Resolves#2612

## Proposed Changes

Implements both the checks mentioned in the original issue.

1. Verifies the `randao_reveal` in the beacon node

2. Cross checks the proposer index after getting back the block from the beacon node.

## Additional info

The block production time increases by ~10x because of the signature verification on the beacon node (based on the `beacon_block_production_process_seconds` metric) when running on a local testnet.

## Proposed Changes

Add several metrics for the number of attestations in the op pool. These give us a way to observe the number of valid, non-trivial attestations during block packing rather than just the size of the entire op pool.

## Issue Addressed

Getting too many peers kicked due to slightly late sync committee messages as tested on.. under-performant hardware.

## Proposed Changes

Only penalize if the message is more than one slot late. Still ignore the message-

Co-authored-by: Divma <26765164+divagant-martian@users.noreply.github.com>

This is a pre-cursor to the next libp2p upgrade.

It is currently being used for staging a number of PR upgrades which are contingent on the latest libp2p.

## Issue Addressed

The [p2p-interface section of the `altair` spec](https://github.com/ethereum/consensus-specs/blob/dev/specs/altair/p2p-interface.md#transitioning-the-gossip) says you should subscribe to the topics for a fork "In advance of the fork" and unsubscribe from old topics `2 Epochs` after the new fork is activated. We've chosen to subscribe to new fork topics `2 slots` before the fork is initiated.

This function is supposed to return the required fork digests at any given time but as it was currently written, it doesn't return the fork digest for a previous fork if you've switched to the current fork less than 2 epoch's ago. Also this function required modification for every new fork we add.

## Proposed Changes

Make this function fork-agnostic and correctly handle the previous fork topic digests when you've only just switched to the new fork.

## Issue Addressed

Resolves#2611

## Proposed Changes

Adds a duplicate block root cache to the `BeaconProcessor`. Adds the block root to the cache before calling `process_gossip_block` and `process_rpc_block`. Since `process_rpc_block` is called only for single block lookups, we don't have to worry about batched block imports.

The block is imported from the source(gossip/rpc) that arrives first. The block that arrives second is not imported to avoid the db access issue.

There are 2 cases:

1. Block that arrives second is from rpc: In this case, we return an optimistic `BlockError::BlockIsAlreadyKnown` to sync.

2. Block that arrives second is from gossip: In this case, we only do gossip verification and forwarding but don't import the block into the the beacon chain.

## Additional info

Splits up `process_gossip_block` function to `process_gossip_unverified_block` and `process_gossip_verified_block`.

## Proposed Changes

* Add the `Eth-Consensus-Version` header to the HTTP API for the block and state endpoints. This is part of the v2.1.0 API that was recently released: https://github.com/ethereum/beacon-APIs/pull/170

* Add tests for the above. I refactored the `eth2` crate's helper functions to make this more straight-forward, and introduced some new mixin traits that I think greatly improve readability and flexibility.

* Add a new `map_with_fork!` macro which is useful for decoding a superstruct type without naming all its variants. It is now used for SSZ-decoding `BeaconBlock` and `BeaconState`, and for JSON-decoding `SignedBeaconBlock` in the API.

## Additional Info

The `map_with_fork!` changes will conflict with the Merge changes, but when resolving the conflict the changes from this branch should be preferred (it is no longer necessary to enumerate every fork). The merge fork _will_ need to be added to `map_fork_name_with`.

## Issue Addressed

Currently, if you launch the beacon node with the `--purge-db` flag and the `beacon` directory exists, but one (or both) of the `chain_db` or `freezer-db` directories are missing, it will error unnecessarily with:

```

Failed to remove chain_db: No such file or directory (os error 2)

```

This is an edge case which can occur in cases of manual intervention (a user deleted the directory) or if you had previously run with the `--purge-db` flag and Lighthouse errored before it could initialize the db directories.

## Proposed Changes

Check if the `chain_db`/`freezer_db` exists before attempting to remove them. This prevents unnecessary errors.

## Issue Addressed

Attempts to fix#2701 but I doubt this is the reason behind that.

## Proposed Changes

maintain a waker in the rpc handler and call it if an event is received

## Issue Addressed

In the backfill sync the state was maintained twice, once locally and also in the globals. This makes it so that it's maintained only once.

The only behavioral change is that when backfill sync in paused, the global backfill state is updated. I asked @AgeManning about this and he deemed it a bug, so this solves it.

## Issue Addressed

When compiling with Rust 1.56.0 the compiler generates 3 instances of this warning:

```

warning: trailing semicolon in macro used in expression position

--> common/eth2_network_config/src/lib.rs:181:24

|

181 | })?;

| ^

...

195 | let deposit_contract_deploy_block = load_from_file!(DEPLOY_BLOCK_FILE);

| ---------------------------------- in this macro invocation

|

= note: `#[warn(semicolon_in_expressions_from_macros)]` on by default

= warning: this was previously accepted by the compiler but is being phased out; it will become a hard error in a future release!

= note: for more information, see issue #79813 <https://github.com/rust-lang/rust/issues/79813>

= note: this warning originates in the macro `load_from_file` (in Nightly builds, run with -Z macro-backtrace for more info)

```

This warning is completely harmless, but will be visible to users compiling Lighthouse v2.0.1 (or earlier) with Rust 1.56.0 (to be released October 21st). It is **completely safe** to ignore this warning, it's just a superficial change to Rust's syntax.

## Proposed Changes

This PR removes the semi-colon as recommended, and fixes the new Clippy lints from 1.56.0

## Issue Addressed

Mitigates #1096

## Proposed Changes

Add a flag to the beacon node called `--disable-lock-timeouts` which allows opting out of lock timeouts.

The lock timeouts serve a dual purpose:

1. They prevent any single operation from hogging the lock for too long. When a timeout occurs it logs a nasty error which indicates that there's suboptimal lock use occurring, which we can then act on.

2. They allow deadlock detection. We're fairly sure there are no deadlocks left in Lighthouse anymore but the timeout locks offer a safeguard against that.

However, timeouts on locks are not without downsides:

They allow for the possibility of livelock, particularly on slower hardware. If lock timeouts keep failing spuriously the node can be prevented from making any progress, even if it would be able to make progress slowly without the timeout. One particularly concerning scenario which could occur would be if a DoS attack succeeded in slowing block signature verification times across the network, and all Lighthouse nodes got livelocked because they timed out repeatedly. This could also occur on just a subset of nodes (e.g. dual core VPSs or Raspberri Pis).

By making the behaviour runtime configurable this PR allows us to choose the behaviour we want depending on circumstance. I suspect that long term we could make the timeout-free approach the default (#2381 moves in this direction) and just enable the timeouts on our testnet nodes for debugging purposes. This PR conservatively leaves the default as-is so we can gain some more experience before switching the default.

## Description

The `eth2_libp2p` crate was originally named and designed to incorporate a simple libp2p integration into lighthouse. Since its origins the crates purpose has expanded dramatically. It now houses a lot more sophistication that is specific to lighthouse and no longer just a libp2p integration.

As of this writing it currently houses the following high-level lighthouse-specific logic:

- Lighthouse's implementation of the eth2 RPC protocol and specific encodings/decodings

- Integration and handling of ENRs with respect to libp2p and eth2

- Lighthouse's discovery logic, its integration with discv5 and logic about searching and handling peers.

- Lighthouse's peer manager - This is a large module handling various aspects of Lighthouse's network, such as peer scoring, handling pings and metadata, connection maintenance and recording, etc.

- Lighthouse's peer database - This is a collection of information stored for each individual peer which is specific to lighthouse. We store connection state, sync state, last seen ips and scores etc. The data stored for each peer is designed for various elements of the lighthouse code base such as syncing and the http api.

- Gossipsub scoring - This stores a collection of gossipsub 1.1 scoring mechanisms that are continuously analyssed and updated based on the ethereum 2 networks and how Lighthouse performs on these networks.

- Lighthouse specific types for managing gossipsub topics, sync status and ENR fields

- Lighthouse's network HTTP API metrics - A collection of metrics for lighthouse network monitoring

- Lighthouse's custom configuration of all networking protocols, RPC, gossipsub, discovery, identify and libp2p.

Therefore it makes sense to rename the crate to be more akin to its current purposes, simply that it manages the majority of Lighthouse's network stack. This PR renames this crate to `lighthouse_network`

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

- Update versions to `v2.0.1` in anticipation for a release early next week.

- Add `--ignore` to `cargo audit`. See #2727.

## Additional Info

NA

## Description

This PR updates the peer connection transition logic. It is acceptable for a peer to immediately transition from a disconnected state to a disconnecting state. This can occur when we are at our peer limit and a new peer's dial us.

## Issue Addressed

NA

## Proposed Changes

Adds some more testing for Altair to the op pool. Credits to @michaelsproul for some appropriated efforts here.

## Additional Info

NA

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Proposed Changes

Clone the proposer pubkeys during backfill signature verification to reduce the time that the pubkey cache lock is held for. Cloning such a small number of pubkeys has negligible impact on the total running time, but greatly reduces lock contention.

On a Ryzen 5950X, the setup step seems to take around 180us regardless of whether the key is cloned or not, while the verification takes 7ms. When Lighthouse is limited to 10% of one core using `sudo cpulimit --pid <pid> --limit 10` the total time jumps up to 800ms, but the setup step remains only 250us. This means that under heavy load this PR could cut the time the lock is held for from 800ms to 250us, which is a huge saving of 99.97%!

## Issue Addressed

#2695

## Proposed Changes

This updates discovery to the latest version which has patched a panic that occurred due to a race condition in the bucket logic.

## Issue Addressed

NA

## Proposed Changes

This PR is near-identical to https://github.com/sigp/lighthouse/pull/2652, however it is to be merged into `unstable` instead of `merge-f2f`. Please see that PR for reasoning.

I'm making this duplicate PR to merge to `unstable` in an effort to shrink the diff between `unstable` and `merge-f2f` by doing smaller, lead-up PRs.

## Additional Info

NA

Currently, the beacon node has no ability to serve the HTTP API over TLS.

Adding this functionality would be helpful for certain use cases, such as when you need a validator client to connect to a backup beacon node which is outside your local network, and the use of an SSH tunnel or reverse proxy would be inappropriate.

## Proposed Changes

- Add three new CLI flags to the beacon node

- `--http-enable-tls`: enables TLS

- `--http-tls-cert`: to specify the path to the certificate file

- `--http-tls-key`: to specify the path to the key file

- Update the HTTP API to optionally use `warp`'s [`TlsServer`](https://docs.rs/warp/0.3.1/warp/struct.TlsServer.html) depending on the presence of the `--http-enable-tls` flag

- Update tests and docs

- Use a custom branch for `warp` to ensure proper error handling

## Additional Info

Serving the API over TLS should currently be considered experimental. The reason for this is that it uses code from an [unmerged PR](https://github.com/seanmonstar/warp/pull/717). This commit provides the `try_bind_with_graceful_shutdown` method to `warp`, which is helpful for controlling error flow when the TLS configuration is invalid (cert/key files don't exist, incorrect permissions, etc).

I've implemented the same code in my [branch here](https://github.com/macladson/warp/tree/tls).

Once the code has been reviewed and merged upstream into `warp`, we can remove the dependency on my branch and the feature can be considered more stable.

Currently, the private key file must not be password-protected in order to be read into Lighthouse.

## Proposed Changes

This is a refactor of the PeerDB and PeerManager. A number of bugs have been surfacing around the connection state of peers and their interaction with the score state.

This refactor tightens the mutability properties of peers such that only specific modules are able to modify the state of peer information preventing inadvertant state changes that can lead to our local peer manager db being out of sync with libp2p.

Further, the logic around connection and scoring was quite convoluted and the distinction between the PeerManager and Peerdb was not well defined. Although these issues are not fully resolved, this PR is step to cleaning up this logic. The peerdb solely manages most mutability operations of peers leaving high-order logic to the peer manager.

A single `update_connection_state()` function has been added to the peer-db making it solely responsible for modifying the peer's connection state. The way the peer's scores can be modified have been reduced to three simple functions (`update_scores()`, `update_gossipsub_scores()` and `report_peer()`). This prevents any add-hoc modifications of scores and only natural processes of score modification is allowed which simplifies the reasoning of score and state changes.

## Issue Addressed

Fix `cargo audit` failures on `unstable`

Closes#2698

## Proposed Changes

The main culprit is `nix`, which is vulnerable for versions below v0.23.0. We can't get by with a straight-forward `cargo update` because `psutil` depends on an old version of `nix` (cf. https://github.com/rust-psutil/rust-psutil/pull/93). Hence I've temporarily forked `psutil` under the `sigp` org, where I've included the update to `nix` v0.23.0.

Additionally, I took the chance to update the `time` dependency to v0.3, which removed a bunch of stale deps including `stdweb` which is no longer maintained. Lighthouse only uses the `time` crate in the notifier to do some pretty printing, and so wasn't affected by any of the breaking changes in v0.3 ([changelog here](https://github.com/time-rs/time/blob/main/CHANGELOG.md#030-2021-07-30)).

## Issue Addressed

Resolves#2563

Replacement for #2653 as I'm not able to reopen that PR after force pushing.

## Proposed Changes

Fixes all broken api links. Cherry picked changes in #2590 and updated a few more links.

Co-authored-by: Mason Stallmo <masonstallmo@gmail.com>

## Issue Addressed

Resolves#2555

## Proposed Changes

Don't log errors on resubscribing to topics. Also don't log errors if we are setting already set attnet/syncnet bits.

## Issue Addressed

Fix#2585

## Proposed Changes

Provide a canonical version of test_logger that can be used

throughout lighthouse.

## Additional Info

This allows tests to conditionally emit logging data by adding

test_logger as the default logger. And then when executing

`cargo test --features logging/test_logger` log output

will be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test --features logging/test_logger

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-e20115db6a5e3714)

running 1 test

Sep 10 12:53:45.212 INFO hi, module: test_logger:8

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Or, in normal scenarios where logging isn't needed, executing

`cargo test` the log output will not be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-02e02f8d41e8cf8a)

running 1 test

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

## Issue Addressed

This PR addresses an issue found by @YorickDowne during testing of v2.0.0-rc.0.

Due to a lack of atomic database writes on checkpoint sync start-up, it was possible for the database to get into an inconsistent state from which it couldn't recover without `--purge-db`. The core of the issue was that the store's anchor info was being stored _before_ the `PersistedBeaconChain`. If a crash occured so that anchor info was stored but _not_ the `PersistedBeaconChain`, then on restart Lighthouse would think the database was unitialized and attempt to compare-and-swap a `None` value, but would actually find the stale info from the previous run.

## Proposed Changes

The issue is fixed by writing the anchor info, the split point, and the `PersistedBeaconChain` atomically on start-up. Some type-hinting ugliness was required, which could possibly be cleaned up in future refactors.

## Issue Addressed

This PR addresses issue #2657

## Proposed Changes

Changes `/eth/v1/config/deposit_contract` endpoint to return the chain ID from the loaded chain spec instead of eth1::DEFAULT_NETWORK_ID which is the Goerli chain ID of 5.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Proposed Changes

Cut the first release candidate for v2.0.0, in preparation for testing and release this week

## Additional Info

Builds on #2632, which should either be merged first or in the same batch

## Issue Addressed

N/A

## Proposed Changes

When peers switching to a disconnecting state, decrement the disconnected peers counter. This also downgrades some crit logs to errors.

I've also added a re-sync point when peers get unbanned the disconnected peer count will match back to the number of disconnected peers if it has gone out of sync previously.

## Issue Addressed

Closes#2528

## Proposed Changes

- Add `BlockTimesCache` to provide block timing information to `BeaconChain`. This allows additional metrics to be calculated for blocks that are set as head too late.

- Thread the `seen_timestamp` of blocks received from RPC responses (except blocks from syncing) through to the sync manager, similar to what is done for blocks from gossip.

## Additional Info

This provides the following additional metrics:

- `BEACON_BLOCK_OBSERVED_SLOT_START_DELAY_TIME`

- The delay between the start of the slot and when the block was first observed.

- `BEACON_BLOCK_IMPORTED_OBSERVED_DELAY_TIME`

- The delay between when the block was first observed and when the block was imported.

- `BEACON_BLOCK_HEAD_IMPORTED_DELAY_TIME`

- The delay between when the block was imported and when the block was set as head.

The metric `BEACON_BLOCK_IMPORTED_SLOT_START_DELAY_TIME` was removed.

A log is produced when a block is set as head too late, e.g.:

```

Aug 27 03:46:39.006 DEBG Delayed head block set_as_head_delay: Some(21.731066ms), imported_delay: Some(119.929934ms), observed_delay: Some(3.864596988s), block_delay: 4.006257988s, slot: 1931331, proposer_index: 24294, block_root: 0x937602c89d3143afa89088a44bdf4b4d0d760dad082abacb229495c048648a9e, service: beacon

```

## Issue Addressed

Resolves#2552

## Proposed Changes

Offers some improvement in inclusion distance calculation in the validator monitor.

When registering an attestation from a block, instead of doing `block.slot() - attesstation.data.slot()` to get the inclusion distance, we now pass the parent block slot from the beacon chain and do `parent_slot.saturating_sub(attestation.data.slot())`. This allows us to give best effort inclusion distance in scenarios where the attestation was included right after a skip slot. Note that this does not give accurate results in scenarios where the attestation was included few blocks after the skip slot.

In this case, if the attestation slot was `b1` and was included in block `b2` with a skip slot in between, we would get the inclusion delay as 0 (by ignoring the skip slot) which is the best effort inclusion delay.

```

b1 <- missed <- b2

```

Here, if the attestation slot was `b1` and was included in block `b3` with a skip slot and valid block `b2` in between, then we would get the inclusion delay as 2 instead of 1 (by ignoring the skip slot).

```

b1 <- missed <- b2 <- b3

```

A solution for the scenario 2 would be to count number of slots between included slot and attestation slot ignoring the skip slots in the beacon chain and pass the value to the validator monitor. But I'm concerned that it could potentially lead to db accesses for older blocks in extreme cases.

This PR also uses the validator monitor data for logging per epoch inclusion distance. This is useful as we won't get inclusion data in post-altair summaries.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

NA

## Proposed Changes

Implements the "union" type from the SSZ spec for `ssz`, `ssz_derive`, `tree_hash` and `tree_hash_derive` so it may be derived for `enums`:

https://github.com/ethereum/consensus-specs/blob/v1.1.0-beta.3/ssz/simple-serialize.md#union

The union type is required for the merge, since the `Transaction` type is defined as a single-variant union `Union[OpaqueTransaction]`.

### Crate Updates

This PR will (hopefully) cause CI to publish new versions for the following crates:

- `eth2_ssz_derive`: `0.2.1` -> `0.3.0`

- `eth2_ssz`: `0.3.0` -> `0.4.0`

- `eth2_ssz_types`: `0.2.0` -> `0.2.1`

- `tree_hash`: `0.3.0` -> `0.4.0`

- `tree_hash_derive`: `0.3.0` -> `0.4.0`

These these crates depend on each other, I've had to add a workspace-level `[patch]` for these crates. A follow-up PR will need to remove this patch, ones the new versions are published.

### Union Behaviors

We already had SSZ `Encode` and `TreeHash` derive for enums, however it just did a "transparent" pass-through of the inner value. Since the "union" decoding from the spec is in conflict with the transparent method, I've required that all `enum` have exactly one of the following enum-level attributes:

#### SSZ

- `#[ssz(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[ssz(enum_behaviour = "transparent")]`

- maintains existing functionality

- not supported for `Decode` (never was)

#### TreeHash

- `#[tree_hash(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[tree_hash(enum_behaviour = "transparent")]`

- maintains existing functionality

This means that we can maintain the existing transparent behaviour, but all existing users will get a compile-time error until they explicitly opt-in to being transparent.

### Legacy Option Encoding

Before this PR, we already had a union-esque encoding for `Option<T>`. However, this was with the *old* SSZ spec where the union selector was 4 bytes. During merge specification, the spec was changed to use 1 byte for the selector.

Whilst the 4-byte `Option` encoding was never used in the spec, we used it in our database. Writing a migrate script for all occurrences of `Option` in the database would be painful, especially since it's used in the `CommitteeCache`. To avoid the migrate script, I added a serde-esque `#[ssz(with = "module")]` field-level attribute to `ssz_derive` so that we can opt into the 4-byte encoding on a field-by-field basis.

The `ssz::legacy::four_byte_impl!` macro allows a one-liner to define the module required for the `#[ssz(with = "module")]` for some `Option<T> where T: Encode + Decode`.

Notably, **I have removed `Encode` and `Decode` impls for `Option`**. I've done this to force a break on downstream users. Like I mentioned, `Option` isn't used in the spec so I don't think it'll be *that* annoying. I think it's nicer than quietly having two different union implementations or quietly breaking the existing `Option` impl.

### Crate Publish Ordering

I've modified the order in which CI publishes crates to ensure that we don't publish a crate without ensuring we already published a crate that it depends upon.

## TODO

- [ ] Queue a follow-up `[patch]`-removing PR.

## Issue Addressed

NA

## Proposed Changes

Adds the ability to verify batches of aggregated/unaggregated attestations from the network.

When the `BeaconProcessor` finds there are messages in the aggregated or unaggregated attestation queues, it will first check the length of the queue:

- `== 1` verify the attestation individually.

- `>= 2` take up to 64 of those attestations and verify them in a batch.

Notably, we only perform batch verification if the queue has a backlog. We don't apply any artificial delays to attestations to try and force them into batches.

### Batching Details

To assist with implementing batches we modify `beacon_chain::attestation_verification` to have two distinct categories for attestations:

- *Indexed* attestations: those which have passed initial validation and were valid enough for us to derive an `IndexedAttestation`.

- *Verified* attestations: those attestations which were indexed *and also* passed signature verification. These are well-formed, interesting messages which were signed by validators.

The batching functions accept `n` attestations and then return `n` attestation verification `Result`s, where those `Result`s can be any combination of `Ok` or `Err`. In other words, we attempt to verify as many attestations as possible and return specific per-attestation results so peer scores can be updated, if required.

When we batch verify attestations, we first try to map all those attestations to *indexed* attestations. If any of those attestations were able to be indexed, we then perform batch BLS verification on those indexed attestations. If the batch verification succeeds, we convert them into *verified* attestations, disabling individual signature checking. If the batch fails, we convert to verified attestations with individual signature checking enabled.

Ultimately, we optimistically try to do a batch verification of attestation signatures and fall-back to individual verification if it fails. This opens an attach vector for "poisoning" the attestations and causing us to waste a batch verification. I argue that peer scoring should do a good-enough job of defending against this and the typical-case gains massively outweigh the worst-case losses.

## Additional Info

Before this PR, attestation verification took the attestations by value (instead of by reference). It turns out that this was unnecessary and, in my opinion, resulted in some undesirable ergonomics (e.g., we had to pass the attestation back in the `Err` variant to avoid clones). In this PR I've modified attestation verification so that it now takes a reference.

I refactored the `beacon_chain/tests/attestation_verification.rs` tests so they use a builder-esque "tester" struct instead of a weird macro. It made it easier for me to test individual/batch with the same set of tests and I think it was a nice tidy-up. Notably, I did this last to try and make sure my new refactors to *actual* production code would pass under the existing test suite.

## Issue Addressed

Closes#1891Closes#1784

## Proposed Changes

Implement checkpoint sync for Lighthouse, enabling it to start from a weak subjectivity checkpoint.

## Additional Info

- [x] Return unavailable status for out-of-range blocks requested by peers (#2561)

- [x] Implement sync daemon for fetching historical blocks (#2561)

- [x] Verify chain hashes (either in `historical_blocks.rs` or the calling module)

- [x] Consistency check for initial block + state

- [x] Fetch the initial state and block from a beacon node HTTP endpoint

- [x] Don't crash fetching beacon states by slot from the API

- [x] Background service for state reconstruction, triggered by CLI flag or API call.

Considered out of scope for this PR:

- Drop the requirement to provide the `--checkpoint-block` (this would require some pretty heavy refactoring of block verification)

Co-authored-by: Diva M <divma@protonmail.com>

In previous network updates we have made our libp2p connections more lean by limiting the maximum number of connections a lighthouse node will accept before libp2p rejects new connections.

However, we still maintain the logic that at maximum connections, we try to dial extra peers if they are needed by a validator client to publish messages on a specific subnet. The dials typically result in failures due the libp2p connection limits.

This PR adds an extra factor, `PRIORITY_PEER_EXCESS` which sets aside a new allocation of peers we are able to dial in case we need these peers for the VC client. This allocation sits along side the excess peer (which allows extra incoming peers on top of our target peer limit).

The drawback here, is that libp2p now allows extra peers to connect to us (beyond the standard peer limit) which the peer manager should subsequently reject.

This PR in general improves the handling around peer banning.

Specifically there were issues when multiple peers under a single IP connected to us after we banned the IP for poor behaviour.

This PR should now handle these peers gracefully as well as make some improvements around how we previously disconnected and banned peers.

The logic now goes as follows:

- Once a peer gets banned, its gets registered with its known IP addresses

- Once enough banned peers exist under a single IP that IP is banned

- We retain connections with existing peers under this IP

- Any new connections under this IP are rejected

## Issue Addressed

N/A

## Proposed Changes

Add a fork_digest to `ForkContext` only if it is set in the config.

Reject gossip messages on post fork topics before the fork happens.

Edit: Instead of rejecting gossip messages on post fork topics, we now subscribe to post fork topics 2 slots before the fork.

Co-authored-by: Age Manning <Age@AgeManning.com>

# Description

A few changes have been made to discovery. In particular a custom re-write of an LRU cache which previously was read/write O(N) for all our sessions ~5k, to a more reasonable hashmap-style O(1).

Further there has been reported issues in the current discv5, so added error handling to help identify the issue has been added.

The identify network debug logs can get quite noisy and are unnecessary to print on every request/response.

This PR reduces debug noise by only printing messages for identify messages that offer some new information.

## Proposed Changes

Cache the total active balance for the current epoch in the `BeaconState`. Computing this value takes around 1ms, and this was negatively impacting block processing times on Prater, particularly when reconstructing states.

With a large number of attestations in each block, I saw the `process_attestations` function taking 150ms, which means that reconstructing hot states can take up to 4.65s (31 * 150ms), and reconstructing freezer states can take up to 307s (2047 * 150ms).

I opted to add the cache to the beacon state rather than computing the total active balance at the start of state processing and threading it through. Although this would be simpler in a way, it would waste time, particularly during block replay, as the total active balance doesn't change for the duration of an epoch. So we save ~32ms for hot states, and up to 8.1s for freezer states (using `--slots-per-restore-point 8192`).

## Issue Addressed

Related to: #2259

Made an attempt at all the necessary updates here to publish the crates to crates.io. I incremented the minor versions on all the crates that have been previously published. We still might run into some issues as we try to publish because I'm not able to test this out but I think it's a good starting point.

## Proposed Changes

- Add description and license to `ssz_types` and `serde_util`

- rename `serde_util` to `eth2_serde_util`

- increment minor versions

- remove path dependencies

- remove patch dependencies

## Additional Info

Crates published:

- [x] `tree_hash` -- need to publish `tree_hash_derive` and `eth2_hashing` first

- [x] `eth2_ssz_types` -- need to publish `eth2_serde_util` first

- [x] `tree_hash_derive`

- [x] `eth2_ssz`

- [x] `eth2_ssz_derive`

- [x] `eth2_serde_util`

- [x] `eth2_hashing`

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Add functionality in the validator monitor to provide sync committee related metrics for monitored validators.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

NA

## Proposed Changes

Missed head votes on attestations is a well-known issue. The primary cause is a block getting set as the head *after* the attestation deadline.

This PR aims to shorten the overall time between "block received" and "block set as head" by:

1. Persisting the head and fork choice *after* setting the canonical head

- Informal measurements show this takes ~200ms

1. Pruning the op pool *after* setting the canonical head.

1. No longer persisting the op pool to disk during `BeaconChain::fork_choice`

- Informal measurements show this can take up to 1.2s.

I also add some metrics to help measure the effect of these changes.

Persistence changes like this run the risk of breaking assumptions downstream. However, I have considered these risks and I think we're fine here. I will describe my reasoning for each change.

## Reasoning

### Change 1: Persisting the head and fork choice *after* setting the canonical head

For (1), although the function is called `persist_head_and_fork_choice`, it only persists:

- Fork choice

- Head tracker

- Genesis block root

Since `BeaconChain::fork_choice_internal` does not modify these values between the original time we were persisting it and the current time, I assert that the change I've made is non-substantial in terms of what ends up on-disk. There's the possibility that some *other* thread has modified fork choice in the extra time we've given it, but that's totally fine.

Since the only time we *read* those values from disk is during startup, I assert that this has no impact during runtime.

### Change 2: Pruning the op pool after setting the canonical head

Similar to the argument above, we don't modify the op pool during `BeaconChain::fork_choice_internal` so it shouldn't matter when we prune. This change should be non-substantial.

### Change 3: No longer persisting the op pool to disk during `BeaconChain::fork_choice`

This change *is* substantial. With the proposed changes, we'll only be persisting the op pool to disk when we shut down cleanly (i.e., the `BeaconChain` gets dropped). This means we'll save disk IO and time during usual operation, but a `kill -9` or similar "crash" will probably result in an out-of-date op pool when we reboot. An out-of-date op pool can only have an impact when producing blocks or aggregate attestations/sync committees.

I think it's pretty reasonable that a crash might result in an out-of-date op pool, since:

- Crashes are fairly rare. Practically the only time I see LH suffer a full crash is when the OOM killer shows up, and that's a very serious event.

- It's generally quite rare to produce a block/aggregate immediately after a reboot. Just a few slots of runtime is probably enough to have a decent-enough op pool again.

## Additional Info

Credits to @macladson for the timings referenced here.

## Issue Addressed

Resolves#2033

## Proposed Changes

Adds a flag to enable shutting down beacon node right after sync is completed.

## Additional Info

Will need modification after weak subjectivity sync is enabled to change definition of a fully synced node.

## Issue Addressed

Closes#2526

## Proposed Changes

If the head block fails to decode on start up, do two things:

1. Revert all blocks between the head and the most recent hard fork (to `fork_slot - 1`).

2. Reset fork choice so that it contains the new head, and all blocks back to the new head's finalized checkpoint.

## Additional Info

I tweaked some of the beacon chain test harness stuff in order to make it generic enough to test with a non-zero slot clock on start-up. In the process I consolidated all the various `new_` methods into a single generic one which will hopefully serve all future uses 🤞

## Issue Addressed

Resolves#2114

Swapped out the ctrlc crate for tokio signals to hook register handlers for SIGPIPE and SIGHUP along with SIGTERM and SIGINT.

## Proposed Changes

- Swap out the ctrlc crate for tokio signals for unix signal handing

- Register signals for SIGPIPE and SHIGUP that trigger the same shutdown procedure as SIGTERM and SIGINT

## Additional Info

I tested these changes against the examples in the original issue and noticed some interesting behavior on my machine. When running `lighthouse bn --network pyrmont |& tee -a pyrmont_bn.log` or `lighthouse bn --network pyrmont 2>&1 | tee -a pyrmont_bn.log` none of the above signals are sent to the lighthouse program in a way I was able to observe.

The only time it seems that the signal gets sent to the lighthouse program is if there is no redirection of stderr to stdout. I'm not as familiar with the details of how unix signals work in linux with a redirect like that so I'm not sure if this is a bug in the program or expected behavior.

Signals are correctly received without the redirection and if the above signals are sent directly to the program with something like `kill`.

## Issue Addressed

Resolves#2487

## Proposed Changes

Logs a message once in every invocation of `Eth1Service::update` method if the primary endpoint is unavailable for some reason.

e.g.

```log

Aug 03 00:09:53.517 WARN Error connecting to eth1 node endpoint action: trying fallbacks, endpoint: http://localhost:8545/, service: eth1_rpc

Aug 03 00:09:56.959 INFO Fetched data from fallback fallback_number: 1, service: eth1_rpc

```

The main aim of this PR is to have an accompanying message to the "action: trying fallbacks" error message that is returned when checking the endpoint for liveness. This is mainly to indicate to the user that the fallback was live and reachable.

## Additional info

This PR is not meant to be a catch all for all cases where the primary endpoint failed. For instance, this won't log anything if the primary node was working fine during endpoint liveness checking and failed during deposit/block fetching. This is done intentionally to reduce number of logs while initial deposit/block sync and to avoid more complicated logic.

## Issue Addressed

NA

## Proposed Changes

This PR expands the time that entries exist in the gossip-sub duplicate cache. Recent investigations found that this cache is one slot (12s) shorter than the period for which an attestation is permitted to propagate on the gossip network.

Before #2540, this was causing peers to be unnecessarily down-scored for sending old attestations. Although that issue has been fixed, the duplicate cache time is increased here to avoid such messages from getting any further up the networking stack then required.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

A Discord user presented logs which indicated a drop in their peer count caused by a variety of peers sending attestations where we'd already seen an attestation for that validator. It's presently unclear how this case came about, but during our investigation I noticed that we are down-voting peers for sending such attestations.

There are three scenarios where we may receive duplicate unagg. attestations from the same validator:

1. The validator is committing a slashable offense.

2. The gossipsub message-deduping functionality is not working as expected.

3. We received the message via the HTTP prior to seeing it via gossip.

Scenario (1) would be so costly for an attacker that I don't think we need to add DoS protection for it.

Scenario (2) seems feasible. Our "seen message" caches in gossipsub might fill up/expire and let through these duplicates. There are also cases involving message ID mismatches with the other peers. In both these cases, I don't think we should be doing 1 attestation == -1 point down-voting.

Scenario (3) is not necessarily a fault of the peer and we shouldn't down-score them for it.

## Additional Info

NA

Due to the altair fork, in principle we can now subscribe to up to 148 topics. This bypasses our original limit and we can end up rejecting subscriptions.

This PR increases the limit to account for the fork.

## Issue Addressed

N/A

## Proposed Changes

Adds a cli option to disable packet filter in `lighthouse bootnode`. This is useful in running local testnets as the bootnode bans requests from the same ip(localhost) if the packet filter is enabled.

## Issue Addressed

Resolves#2524

## Proposed Changes

- Return all known forks in the `/config/fork_schedule`, previously returned only the head of the chain's fork.

- Deleted the `StateId::head` method because it was only previously used in this endpoint.

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Refactor discovery queries such that only `QueryType::Subnet` queries are queued for grouping. `QueryType::FindPeers` is always made regardless of the number of active `Subnet` queries (max = 2). This prevents `FindPeers` queries from getting starved if `Subnet` queries start queuing up.

Also removes `GroupedQueryType` struct and use `QueryType` for all queuing and processing of discovery requests.

## Additional Info

Currently, no distinction is made between subnet discovery queries for attestation and sync committee subnets when grouping queries. Potentially we could prioritise attestation queries over sync committee queries in the future.

## Issue Addressed

NA

## Proposed Changes

- Version bump

- Increase queue sizes for aggregated attestations and re-queued attestations.

## Additional Info

NA

## Issue Addressed

Which issue # does this PR address?

## Proposed Changes

- Add a counter metric to log when a block is received late from gossip.

- Also push a `DEBG` log for the above condition.

- Use Debug (`?`) instead of Display (`%`) for a bunch of logs in the beacon processor, so we don't have to deal with concatenated block roots.

- Add new ERRO and CRIT to HTTP API to alert users when they're publishing late blocks.

## Additional Info

NA

## Proposed Changes

* Consolidate Tokio versions: everything now uses the latest v1.10.0, no more `tokio-compat`.

* Many semver-compatible changes via `cargo update`. Notably this upgrades from the yanked v0.8.0 version of crossbeam-deque which is present in v1.5.0-rc.0

* Many semver incompatible upgrades via `cargo upgrades` and `cargo upgrade --workspace pkg_name`. Notable ommissions:

- Prometheus, to be handled separately: https://github.com/sigp/lighthouse/issues/2485

- `rand`, `rand_xorshift`: the libsecp256k1 package requires 0.7.x, so we'll stick with that for now

- `ethereum-types` is pinned at 0.11.0 because that's what `web3` is using and it seems nice to have just a single version

## Additional Info

We still have two versions of `libp2p-core` due to `discv5` depending on the v0.29.0 release rather than `master`. AFAIK it should be OK to release in this state (cc @AgeManning )

## Issue Addressed

NA

## Proposed Changes

- Bump to `v1.5.0-rc.0`.

- Increase attestation reprocessing queue size (I saw this filling up on Prater).

- Reduce error log for full attn reprocessing queue to warn.

## TODO

- [x] Manual testing

- [x] Resolve https://github.com/sigp/lighthouse/pull/2493

- [x] Include https://github.com/sigp/lighthouse/pull/2501

## Issue Addressed

- Resolves#2457

- Resolves#2443

## Proposed Changes

Target the (presently unreleased) head of `libp2p/rust-libp2p:master` in order to obtain the fix from https://github.com/libp2p/rust-libp2p/pull/2175.

Additionally:

- `libsecp256k1` needed to be upgraded to satisfy the new version of `libp2p`.

- There were also a handful of minor changes to `eth2_libp2p` to suit some interface changes.

- Two `cargo audit --ignore` flags were remove due to libp2p upgrades.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

When testing our (not-yet-released) Doppelganger implementation, I noticed that we aren't detecting attestations included in blocks (only those on the gossip network).

This is because during [block processing](e8c0d1f19b/beacon_node/beacon_chain/src/beacon_chain.rs (L2168)) we only update the `observed_attestations` cache with each attestation, but not the `observed_attesters` cache. This is the correct behaviour when we consider the [p2p spec](https://github.com/ethereum/eth2.0-specs/blob/v1.0.1/specs/phase0/p2p-interface.md):

> [IGNORE] There has been no other valid attestation seen on an attestation subnet that has an identical attestation.data.target.epoch and participating validator index.

We're doing the right thing here and still allowing attestations on gossip that we've seen in a block. However, this doesn't work so nicely for Doppelganger.

To resolve this, I've taken the following steps:

- Add a `observed_block_attesters` cache.

- Rename `observed_attesters` to `observed_gossip_attesters`.

## TODO

- [x] Add a test to ensure a validator that's been seen in a block attestation (but not a gossip attestation) returns `true` for `BeaconChain::validator_seen_at_epoch`.

- [x] Add a test to ensure `observed_block_attesters` isn't polluted via gossip attestations and vice versa.

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Proposed Changes

* Implement the validator client and HTTP API changes necessary to support Altair

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

Resolves#2069

## Proposed Changes

- Adds a `--doppelganger-detection` flag

- Adds a `lighthouse/seen_validators` endpoint, which will make it so the lighthouse VC is not interopable with other client beacon nodes if the `--doppelganger-detection` flag is used, but hopefully this will become standardized. Relevant Eth2 API repo issue: https://github.com/ethereum/eth2.0-APIs/issues/64

- If the `--doppelganger-detection` flag is used, the VC will wait until the beacon node is synced, and then wait an additional 2 epochs. The reason for this is to make sure the beacon node is able to subscribe to the subnets our validators should be attesting on. I think an alternative would be to have the beacon node subscribe to all subnets for 2+ epochs on startup by default.

## Additional Info

I'd like to add tests and would appreciate feedback.

TODO: handle validators started via the API, potentially make this default behavior

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

N/A

## Proposed Changes

- Removing a bunch of unnecessary references

- Updated `Error::VariantError` to `Error::Variant`

- There were additional enum variant lints that I ignored, because I thought our variant names were fine

- removed `MonitoredValidator`'s `pubkey` field, because I couldn't find it used anywhere. It looks like we just use the string version of the pubkey (the `id` field) if there is no index

## Additional Info

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

- Resolves#2169

## Proposed Changes

Adds the `AttesterCache` to allow validators to produce attestations for older slots. Presently, some arbitrary restrictions can force validators to receive an error when attesting to a slot earlier than the present one. This can cause attestation misses when there is excessive load on the validator client or time sync issues between the VC and BN.

## Additional Info

NA

## Proposed Changes

Add the `sync_aggregate` from `BeaconBlock` to the bulk signature verifier for blocks. This necessitates a new signature set constructor for the sync aggregate, which is different from the others due to the use of [`eth2_fast_aggregate_verify`](https://github.com/ethereum/eth2.0-specs/blob/v1.1.0-alpha.7/specs/altair/bls.md#eth2_fast_aggregate_verify) for sync aggregates, per [`process_sync_aggregate`](https://github.com/ethereum/eth2.0-specs/blob/v1.1.0-alpha.7/specs/altair/beacon-chain.md#sync-aggregate-processing). I made the choice to return an optional signature set, with `None` representing the case where the signature is valid on account of being the point at infinity (requires no further checking).

To "dogfood" the changes and prevent duplication, the consensus logic now uses the signature set approach as well whenever it is required to verify signatures (which should only be in testing AFAIK). The EF tests pass with the code as it exists currently, but failed before I adapted the `eth2_fast_aggregate_verify` changes (which is good).

As a result of this change Altair block processing should be a little faster, and importantly, we will no longer accidentally verify signatures when replaying blocks, e.g. when replaying blocks from the database.

## Issue Addressed

NA

## Proposed Changes

This PR addresses two things:

1. Allows the `ValidatorMonitor` to work with Altair states.

1. Optimizes `altair::process_epoch` (see [code](https://github.com/paulhauner/lighthouse/blob/participation-cache/consensus/state_processing/src/per_epoch_processing/altair/participation_cache.rs) for description)

## Breaking Changes

The breaking changes in this PR revolve around one premise: