While testing some code on Windows, I ran into a failure when using `clippy` via (`make lint`):

```

error: this expression borrows a reference (`&str`) that is immediately dereferenced by the compiler

--> common/filesystem/src/lib.rs:105:43

|

105 | let mut acl = ACL::from_file_path(&path_str, false).map_err(Error::UnableToRetrieveACL)?;

| ^^^^^^^^^ help: change this to: `path_str`

|

= note: `-D clippy::needless-borrow` implied by `-D warnings`

= help: for further information visit https://rust-lang.github.io/rust-clippy/master/index.html#needless_borrow

error: could not compile `filesystem` due to previous error

```

## Proposed Changes

Remove the unnecessary borrow as suggested.

## Additional Info

Since we are only running `clippy` in CI on Ubuntu, I believe we don't have any way (in CI) to detect these Windows specific lint errors (either from new code, or from linting changes from new Rust versions.

This is because code marked as `#[cfg(windows)]` is not checked on `unix` systems and vice versa.

I'm conscious that our CI runs are already taking a long time, and that adding a new Windows `clippy` run would add a non-negligible amount of time to the runs (not sure if this topic has already been discussed), but it something to be aware of.

## Extra Note

I don't think this is the case, but it might be worth someone else running `make lint` on their Windows machines to eliminate the possibility that this is an error specific to my setup.

## Proposed Changes

* Add the `Eth-Consensus-Version` header to the HTTP API for the block and state endpoints. This is part of the v2.1.0 API that was recently released: https://github.com/ethereum/beacon-APIs/pull/170

* Add tests for the above. I refactored the `eth2` crate's helper functions to make this more straight-forward, and introduced some new mixin traits that I think greatly improve readability and flexibility.

* Add a new `map_with_fork!` macro which is useful for decoding a superstruct type without naming all its variants. It is now used for SSZ-decoding `BeaconBlock` and `BeaconState`, and for JSON-decoding `SignedBeaconBlock` in the API.

## Additional Info

The `map_with_fork!` changes will conflict with the Merge changes, but when resolving the conflict the changes from this branch should be preferred (it is no longer necessary to enumerate every fork). The merge fork _will_ need to be added to `map_fork_name_with`.

I have been in the process of debugging libp2p tasks as there is something locking our executor.

This addition adds a metric allowing us to track all tasks within lighthouse allowing us to identify various sections of Lighthouse code that may be taking longer than normal to process.

## Issue Addressed

When compiling with Rust 1.56.0 the compiler generates 3 instances of this warning:

```

warning: trailing semicolon in macro used in expression position

--> common/eth2_network_config/src/lib.rs:181:24

|

181 | })?;

| ^

...

195 | let deposit_contract_deploy_block = load_from_file!(DEPLOY_BLOCK_FILE);

| ---------------------------------- in this macro invocation

|

= note: `#[warn(semicolon_in_expressions_from_macros)]` on by default

= warning: this was previously accepted by the compiler but is being phased out; it will become a hard error in a future release!

= note: for more information, see issue #79813 <https://github.com/rust-lang/rust/issues/79813>

= note: this warning originates in the macro `load_from_file` (in Nightly builds, run with -Z macro-backtrace for more info)

```

This warning is completely harmless, but will be visible to users compiling Lighthouse v2.0.1 (or earlier) with Rust 1.56.0 (to be released October 21st). It is **completely safe** to ignore this warning, it's just a superficial change to Rust's syntax.

## Proposed Changes

This PR removes the semi-colon as recommended, and fixes the new Clippy lints from 1.56.0

## Description

The `eth2_libp2p` crate was originally named and designed to incorporate a simple libp2p integration into lighthouse. Since its origins the crates purpose has expanded dramatically. It now houses a lot more sophistication that is specific to lighthouse and no longer just a libp2p integration.

As of this writing it currently houses the following high-level lighthouse-specific logic:

- Lighthouse's implementation of the eth2 RPC protocol and specific encodings/decodings

- Integration and handling of ENRs with respect to libp2p and eth2

- Lighthouse's discovery logic, its integration with discv5 and logic about searching and handling peers.

- Lighthouse's peer manager - This is a large module handling various aspects of Lighthouse's network, such as peer scoring, handling pings and metadata, connection maintenance and recording, etc.

- Lighthouse's peer database - This is a collection of information stored for each individual peer which is specific to lighthouse. We store connection state, sync state, last seen ips and scores etc. The data stored for each peer is designed for various elements of the lighthouse code base such as syncing and the http api.

- Gossipsub scoring - This stores a collection of gossipsub 1.1 scoring mechanisms that are continuously analyssed and updated based on the ethereum 2 networks and how Lighthouse performs on these networks.

- Lighthouse specific types for managing gossipsub topics, sync status and ENR fields

- Lighthouse's network HTTP API metrics - A collection of metrics for lighthouse network monitoring

- Lighthouse's custom configuration of all networking protocols, RPC, gossipsub, discovery, identify and libp2p.

Therefore it makes sense to rename the crate to be more akin to its current purposes, simply that it manages the majority of Lighthouse's network stack. This PR renames this crate to `lighthouse_network`

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

- Update versions to `v2.0.1` in anticipation for a release early next week.

- Add `--ignore` to `cargo audit`. See #2727.

## Additional Info

NA

Currently, the beacon node has no ability to serve the HTTP API over TLS.

Adding this functionality would be helpful for certain use cases, such as when you need a validator client to connect to a backup beacon node which is outside your local network, and the use of an SSH tunnel or reverse proxy would be inappropriate.

## Proposed Changes

- Add three new CLI flags to the beacon node

- `--http-enable-tls`: enables TLS

- `--http-tls-cert`: to specify the path to the certificate file

- `--http-tls-key`: to specify the path to the key file

- Update the HTTP API to optionally use `warp`'s [`TlsServer`](https://docs.rs/warp/0.3.1/warp/struct.TlsServer.html) depending on the presence of the `--http-enable-tls` flag

- Update tests and docs

- Use a custom branch for `warp` to ensure proper error handling

## Additional Info

Serving the API over TLS should currently be considered experimental. The reason for this is that it uses code from an [unmerged PR](https://github.com/seanmonstar/warp/pull/717). This commit provides the `try_bind_with_graceful_shutdown` method to `warp`, which is helpful for controlling error flow when the TLS configuration is invalid (cert/key files don't exist, incorrect permissions, etc).

I've implemented the same code in my [branch here](https://github.com/macladson/warp/tree/tls).

Once the code has been reviewed and merged upstream into `warp`, we can remove the dependency on my branch and the feature can be considered more stable.

Currently, the private key file must not be password-protected in order to be read into Lighthouse.

## Issue Addressed

Fix `cargo audit` failures on `unstable`

Closes#2698

## Proposed Changes

The main culprit is `nix`, which is vulnerable for versions below v0.23.0. We can't get by with a straight-forward `cargo update` because `psutil` depends on an old version of `nix` (cf. https://github.com/rust-psutil/rust-psutil/pull/93). Hence I've temporarily forked `psutil` under the `sigp` org, where I've included the update to `nix` v0.23.0.

Additionally, I took the chance to update the `time` dependency to v0.3, which removed a bunch of stale deps including `stdweb` which is no longer maintained. Lighthouse only uses the `time` crate in the notifier to do some pretty printing, and so wasn't affected by any of the breaking changes in v0.3 ([changelog here](https://github.com/time-rs/time/blob/main/CHANGELOG.md#030-2021-07-30)).

## Proposed Changes

While investigating memory usage I noticed that the malloc metrics were going negative once they passed 2GiB. This is because the underlying `mallinfo` function returns a `i32`, and we were casting it straight to an `i64`, preserving the sign.

The long-term fix will be to move to `mallinfo2`, but it's still not yet widely available.

## Issue Addressed

Fix#2585

## Proposed Changes

Provide a canonical version of test_logger that can be used

throughout lighthouse.

## Additional Info

This allows tests to conditionally emit logging data by adding

test_logger as the default logger. And then when executing

`cargo test --features logging/test_logger` log output

will be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test --features logging/test_logger

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-e20115db6a5e3714)

running 1 test

Sep 10 12:53:45.212 INFO hi, module: test_logger:8

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Or, in normal scenarios where logging isn't needed, executing

`cargo test` the log output will not be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-02e02f8d41e8cf8a)

running 1 test

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

## Proposed Changes

Cut the first release candidate for v2.0.0, in preparation for testing and release this week

## Additional Info

Builds on #2632, which should either be merged first or in the same batch

## Issue Addressed

Closes#2528

## Proposed Changes

- Add `BlockTimesCache` to provide block timing information to `BeaconChain`. This allows additional metrics to be calculated for blocks that are set as head too late.

- Thread the `seen_timestamp` of blocks received from RPC responses (except blocks from syncing) through to the sync manager, similar to what is done for blocks from gossip.

## Additional Info

This provides the following additional metrics:

- `BEACON_BLOCK_OBSERVED_SLOT_START_DELAY_TIME`

- The delay between the start of the slot and when the block was first observed.

- `BEACON_BLOCK_IMPORTED_OBSERVED_DELAY_TIME`

- The delay between when the block was first observed and when the block was imported.

- `BEACON_BLOCK_HEAD_IMPORTED_DELAY_TIME`

- The delay between when the block was imported and when the block was set as head.

The metric `BEACON_BLOCK_IMPORTED_SLOT_START_DELAY_TIME` was removed.

A log is produced when a block is set as head too late, e.g.:

```

Aug 27 03:46:39.006 DEBG Delayed head block set_as_head_delay: Some(21.731066ms), imported_delay: Some(119.929934ms), observed_delay: Some(3.864596988s), block_delay: 4.006257988s, slot: 1931331, proposer_index: 24294, block_root: 0x937602c89d3143afa89088a44bdf4b4d0d760dad082abacb229495c048648a9e, service: beacon

```

## Issue Addressed

NA

## Proposed Changes

As `cargo audit` astutely pointed out, the version of `zeroize_derive` were were using had a vulnerability:

```

Crate: zeroize_derive

Version: 1.1.0

Title: `#[zeroize(drop)]` doesn't implement `Drop` for `enum`s

Date: 2021-09-24

ID: RUSTSEC-2021-0115

URL: https://rustsec.org/advisories/RUSTSEC-2021-0115

Solution: Upgrade to >=1.2.0

```

This PR updates `zeroize` and `zeroize_derive` to appease `cargo audit`.

`tiny-bip39` was also updated to allow compile.

## Additional Info

I don't believe this vulnerability actually affected the Lighthouse code-base directly. However, `tiny-bip39` may have been affected which may have resulted in some uncleaned memory in Lighthouse. Whilst this is not ideal, it's not a major issue. Zeroization is a nice-to-have since it only protects from sophisticated attacks or attackers that already have a high level of access already.

## Issue Addressed

NA

## Proposed Changes

Implements the "union" type from the SSZ spec for `ssz`, `ssz_derive`, `tree_hash` and `tree_hash_derive` so it may be derived for `enums`:

https://github.com/ethereum/consensus-specs/blob/v1.1.0-beta.3/ssz/simple-serialize.md#union

The union type is required for the merge, since the `Transaction` type is defined as a single-variant union `Union[OpaqueTransaction]`.

### Crate Updates

This PR will (hopefully) cause CI to publish new versions for the following crates:

- `eth2_ssz_derive`: `0.2.1` -> `0.3.0`

- `eth2_ssz`: `0.3.0` -> `0.4.0`

- `eth2_ssz_types`: `0.2.0` -> `0.2.1`

- `tree_hash`: `0.3.0` -> `0.4.0`

- `tree_hash_derive`: `0.3.0` -> `0.4.0`

These these crates depend on each other, I've had to add a workspace-level `[patch]` for these crates. A follow-up PR will need to remove this patch, ones the new versions are published.

### Union Behaviors

We already had SSZ `Encode` and `TreeHash` derive for enums, however it just did a "transparent" pass-through of the inner value. Since the "union" decoding from the spec is in conflict with the transparent method, I've required that all `enum` have exactly one of the following enum-level attributes:

#### SSZ

- `#[ssz(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[ssz(enum_behaviour = "transparent")]`

- maintains existing functionality

- not supported for `Decode` (never was)

#### TreeHash

- `#[tree_hash(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[tree_hash(enum_behaviour = "transparent")]`

- maintains existing functionality

This means that we can maintain the existing transparent behaviour, but all existing users will get a compile-time error until they explicitly opt-in to being transparent.

### Legacy Option Encoding

Before this PR, we already had a union-esque encoding for `Option<T>`. However, this was with the *old* SSZ spec where the union selector was 4 bytes. During merge specification, the spec was changed to use 1 byte for the selector.

Whilst the 4-byte `Option` encoding was never used in the spec, we used it in our database. Writing a migrate script for all occurrences of `Option` in the database would be painful, especially since it's used in the `CommitteeCache`. To avoid the migrate script, I added a serde-esque `#[ssz(with = "module")]` field-level attribute to `ssz_derive` so that we can opt into the 4-byte encoding on a field-by-field basis.

The `ssz::legacy::four_byte_impl!` macro allows a one-liner to define the module required for the `#[ssz(with = "module")]` for some `Option<T> where T: Encode + Decode`.

Notably, **I have removed `Encode` and `Decode` impls for `Option`**. I've done this to force a break on downstream users. Like I mentioned, `Option` isn't used in the spec so I don't think it'll be *that* annoying. I think it's nicer than quietly having two different union implementations or quietly breaking the existing `Option` impl.

### Crate Publish Ordering

I've modified the order in which CI publishes crates to ensure that we don't publish a crate without ensuring we already published a crate that it depends upon.

## TODO

- [ ] Queue a follow-up `[patch]`-removing PR.

## Issue Addressed

Closes#1891Closes#1784

## Proposed Changes

Implement checkpoint sync for Lighthouse, enabling it to start from a weak subjectivity checkpoint.

## Additional Info

- [x] Return unavailable status for out-of-range blocks requested by peers (#2561)

- [x] Implement sync daemon for fetching historical blocks (#2561)

- [x] Verify chain hashes (either in `historical_blocks.rs` or the calling module)

- [x] Consistency check for initial block + state

- [x] Fetch the initial state and block from a beacon node HTTP endpoint

- [x] Don't crash fetching beacon states by slot from the API

- [x] Background service for state reconstruction, triggered by CLI flag or API call.

Considered out of scope for this PR:

- Drop the requirement to provide the `--checkpoint-block` (this would require some pretty heavy refactoring of block verification)

Co-authored-by: Diva M <divma@protonmail.com>

Sigma Prime is transitioning our mainnet bootnodes and this PR represents the transition of our bootnodes.

After a few releases, old boot-nodes will be deprecated.

[EIP-3030]: https://eips.ethereum.org/EIPS/eip-3030

[Web3Signer]: https://consensys.github.io/web3signer/web3signer-eth2.html

## Issue Addressed

Resolves#2498

## Proposed Changes

Allows the VC to call out to a [Web3Signer] remote signer to obtain signatures.

## Additional Info

### Making Signing Functions `async`

To allow remote signing, I needed to make all the signing functions `async`. This caused a bit of noise where I had to convert iterators into `for` loops.

In `duties_service.rs` there was a particularly tricky case where we couldn't hold a write-lock across an `await`, so I had to first take a read-lock, then grab a write-lock.

### Move Signing from Core Executor

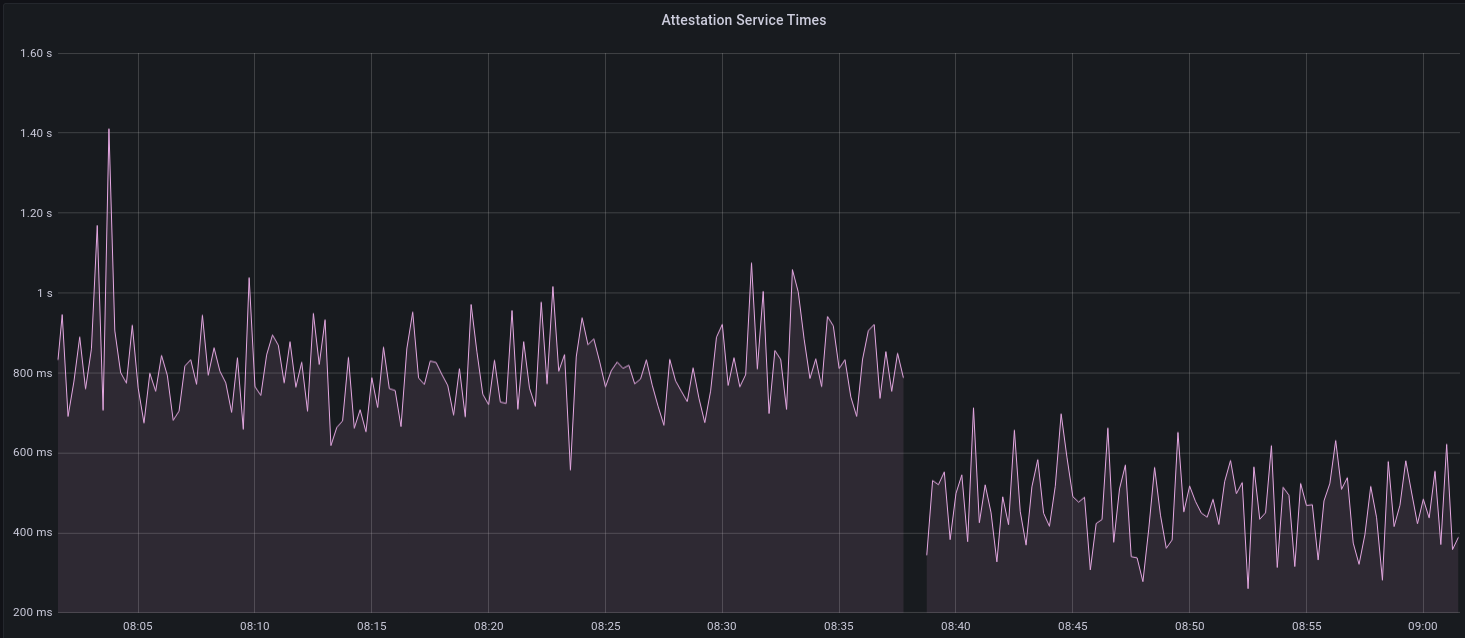

Whilst implementing this feature, I noticed that we signing was happening on the core tokio executor. I suspect this was causing the executor to temporarily lock and occasionally trigger some HTTP timeouts (and potentially SQL pool timeouts, but I can't verify this). Since moving all signing into blocking tokio tasks, I noticed a distinct drop in the "atttestations_http_get" metric on a Prater node:

I think this graph indicates that freeing the core executor allows the VC to operate more smoothly.

### Refactor TaskExecutor

I noticed that the `TaskExecutor::spawn_blocking_handle` function would fail to spawn tasks if it were unable to obtain handles to some metrics (this can happen if the same metric is defined twice). It seemed that a more sensible approach would be to keep spawning tasks, but without metrics. To that end, I refactored the function so that it would still function without metrics. There are no other changes made.

## TODO

- [x] Restructure to support multiple signing methods.

- [x] Add calls to remote signer from VC.

- [x] Documentation

- [x] Test all endpoints

- [x] Test HTTPS certificate

- [x] Allow adding remote signer validators via the API

- [x] Add Altair support via [21.8.1-rc1](https://github.com/ConsenSys/web3signer/releases/tag/21.8.1-rc1)

- [x] Create issue to start using latest version of web3signer. (See #2570)

## Notes

- ~~Web3Signer doesn't yet support the Altair fork for Prater. See https://github.com/ConsenSys/web3signer/issues/423.~~

- ~~There is not yet a release of Web3Signer which supports Altair blocks. See https://github.com/ConsenSys/web3signer/issues/391.~~

## Proposed Changes

* Modify the `TaskExecutor` so that it spawns a "monitor" future for each future spawned by `spawn` or `spawn_blocking`. This monitor future joins the handle of the child future and shuts down the executor if it detects a panic.

* Enable backtraces by default by setting the environment variable `RUST_BACKTRACE`.

* Spawn the `ProductionBeaconNode` on the `TaskExecutor` so that if a panic occurs during start-up it will take down the whole process. Previously we were using a raw Tokio `spawn`, but I can't see any reason not to use the executor (perhaps someone else can).

## Additional Info

I considered using [`std::panic::set_hook`](https://doc.rust-lang.org/std/panic/fn.set_hook.html) to instantiate a custom panic handler, however this doesn't allow us to send a shutdown signal because `Fn` functions can't move variables (i.e. the shutdown sender) out of their environment. This also prevents it from receiving a `Logger`. Hence I decided to leave the panic handler untouched, but with backtraces turned on by default.

I did a run through the code base with all the raw Tokio spawn functions disallowed by Clippy, and found only two instances where we bypass the `TaskExecutor`: the HTTP API and `InitializedValidators` in the VC. In both places we use `spawn_blocking` and handle the return value, so I figured that was OK for now.

In terms of performance I think the overhead should be minimal. The monitor tasks will just get parked by the executor until their child resolves.

I've checked that this covers Discv5, as the `TaskExecutor` gets injected into Discv5 here: f9bba92db3/beacon_node/src/lib.rs (L125-L126)

## Proposed Changes

This PR deletes all `remote_signer` code from Lighthouse, for the following reasons:

* The `remote_signer` code is unused, and we have no plans to use it now that we're moving to supporting the Web3Signer APIs: #2522

* It represents a significant maintenance burden. The HTTP API tests have been prone to platform-specific failures, and breakages due to dependency upgrades, e.g. #2400.

Although the code is deleted it remains in the Git history should we ever want to recover it. For ease of reference:

- The last commit containing remote signer code: 5a3bcd2904

- The last Lighthouse version: v1.5.1

## Issue Addressed

Resolves#2438Resolves#2437

## Proposed Changes

Changes the permissions for validator client http server api token file and secret key to 600 from 644. Also changes the permission for logfiles generated using the `--logfile` cli option to 600.

Logs the path to the api token instead of the actual api token. Updates docs to reflect the change.

## Issue Addressed

Related to: #2259

Made an attempt at all the necessary updates here to publish the crates to crates.io. I incremented the minor versions on all the crates that have been previously published. We still might run into some issues as we try to publish because I'm not able to test this out but I think it's a good starting point.

## Proposed Changes

- Add description and license to `ssz_types` and `serde_util`

- rename `serde_util` to `eth2_serde_util`

- increment minor versions

- remove path dependencies

- remove patch dependencies

## Additional Info

Crates published:

- [x] `tree_hash` -- need to publish `tree_hash_derive` and `eth2_hashing` first

- [x] `eth2_ssz_types` -- need to publish `eth2_serde_util` first

- [x] `tree_hash_derive`

- [x] `eth2_ssz`

- [x] `eth2_ssz_derive`

- [x] `eth2_serde_util`

- [x] `eth2_hashing`

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Add functionality in the validator monitor to provide sync committee related metrics for monitored validators.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

NA

## Proposed Changes

This PR adds some more fancy macro magic to make it easier to add a new built-in (aka "baked-in") testnet config to the `lighthouse` binary.

Previously, a user needed to modify several files and repeat themselves several times. Now, they only need to add a single definition in the `eth2_config` crate. No repetition 🎉

## Issue Addressed

Resolves#2487

## Proposed Changes

Logs a message once in every invocation of `Eth1Service::update` method if the primary endpoint is unavailable for some reason.

e.g.

```log

Aug 03 00:09:53.517 WARN Error connecting to eth1 node endpoint action: trying fallbacks, endpoint: http://localhost:8545/, service: eth1_rpc

Aug 03 00:09:56.959 INFO Fetched data from fallback fallback_number: 1, service: eth1_rpc

```

The main aim of this PR is to have an accompanying message to the "action: trying fallbacks" error message that is returned when checking the endpoint for liveness. This is mainly to indicate to the user that the fallback was live and reachable.

## Additional info

This PR is not meant to be a catch all for all cases where the primary endpoint failed. For instance, this won't log anything if the primary node was working fine during endpoint liveness checking and failed during deposit/block fetching. This is done intentionally to reduce number of logs while initial deposit/block sync and to avoid more complicated logic.

## Issue Addressed

https://github.com/eth2-clients/eth2-networks/pull/58

## Proposed Changes

Add the fork epoch for Altair on Prater: 36660

## Additional Info

This `config.yaml` is copied exactly from upstream. Large parts already matched due to our preemptive move to the new config style.

## Issue Addressed

NA

## Proposed Changes

- Version bump

- Increase queue sizes for aggregated attestations and re-queued attestations.

## Additional Info

NA

## Proposed Changes

* Consolidate Tokio versions: everything now uses the latest v1.10.0, no more `tokio-compat`.

* Many semver-compatible changes via `cargo update`. Notably this upgrades from the yanked v0.8.0 version of crossbeam-deque which is present in v1.5.0-rc.0

* Many semver incompatible upgrades via `cargo upgrades` and `cargo upgrade --workspace pkg_name`. Notable ommissions:

- Prometheus, to be handled separately: https://github.com/sigp/lighthouse/issues/2485

- `rand`, `rand_xorshift`: the libsecp256k1 package requires 0.7.x, so we'll stick with that for now

- `ethereum-types` is pinned at 0.11.0 because that's what `web3` is using and it seems nice to have just a single version

## Additional Info

We still have two versions of `libp2p-core` due to `discv5` depending on the v0.29.0 release rather than `master`. AFAIK it should be OK to release in this state (cc @AgeManning )

## Issue Addressed

NA

## Proposed Changes

- Bump to `v1.5.0-rc.0`.

- Increase attestation reprocessing queue size (I saw this filling up on Prater).

- Reduce error log for full attn reprocessing queue to warn.

## TODO

- [x] Manual testing

- [x] Resolve https://github.com/sigp/lighthouse/pull/2493

- [x] Include https://github.com/sigp/lighthouse/pull/2501

## Issue Addressed

- Resolves#2457

- Resolves#2443

## Proposed Changes

Target the (presently unreleased) head of `libp2p/rust-libp2p:master` in order to obtain the fix from https://github.com/libp2p/rust-libp2p/pull/2175.

Additionally:

- `libsecp256k1` needed to be upgraded to satisfy the new version of `libp2p`.

- There were also a handful of minor changes to `eth2_libp2p` to suit some interface changes.

- Two `cargo audit --ignore` flags were remove due to libp2p upgrades.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

Adds the Altair fork schedule for Pyrmont, as per https://github.com/eth2-clients/eth2-networks/pull/56 (credits to @ajsutton).

## Additional Info

- I've marked this as `do-not-merge` until the upstream PR is merged.

- I've tagged this for `v1.5.0` because I expect the upstream PR to be merged soon, and I think it would be great if v1.5.0 shipped fully ready for the Pyrmont fork.

## Proposed Changes

* Implement the validator client and HTTP API changes necessary to support Altair

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

Resolves#2069

## Proposed Changes

- Adds a `--doppelganger-detection` flag

- Adds a `lighthouse/seen_validators` endpoint, which will make it so the lighthouse VC is not interopable with other client beacon nodes if the `--doppelganger-detection` flag is used, but hopefully this will become standardized. Relevant Eth2 API repo issue: https://github.com/ethereum/eth2.0-APIs/issues/64

- If the `--doppelganger-detection` flag is used, the VC will wait until the beacon node is synced, and then wait an additional 2 epochs. The reason for this is to make sure the beacon node is able to subscribe to the subnets our validators should be attesting on. I think an alternative would be to have the beacon node subscribe to all subnets for 2+ epochs on startup by default.

## Additional Info

I'd like to add tests and would appreciate feedback.

TODO: handle validators started via the API, potentially make this default behavior

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

N/A

## Proposed Changes

- Removing a bunch of unnecessary references

- Updated `Error::VariantError` to `Error::Variant`

- There were additional enum variant lints that I ignored, because I thought our variant names were fine

- removed `MonitoredValidator`'s `pubkey` field, because I couldn't find it used anywhere. It looks like we just use the string version of the pubkey (the `id` field) if there is no index

## Additional Info

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

NA

## Proposed Changes

This PR addresses two things:

1. Allows the `ValidatorMonitor` to work with Altair states.

1. Optimizes `altair::process_epoch` (see [code](https://github.com/paulhauner/lighthouse/blob/participation-cache/consensus/state_processing/src/per_epoch_processing/altair/participation_cache.rs) for description)

## Breaking Changes

The breaking changes in this PR revolve around one premise:

*After the Altair fork, it's not longer possible (given only a `BeaconState`) to identify if a validator had *any* attestation included during some epoch. The best we can do is see if that validator made the "timely" source/target/head flags.*

Whilst this seems annoying, it's not actually too bad. Finalization is based upon "timely target" attestations, so that's really the most important thing. Although there's *some* value in knowing if a validator had *any* attestation included, it's far more important to know about "timely target" participation, since this is what affects finality and justification.

For simplicity and consistency, I've also removed the ability to determine if *any* attestation was included from metrics and API endpoints. Now, all Altair and non-Altair states will simply report on the head/target attestations.

The following section details where we've removed fields and provides replacement values.

### Breaking Changes: Prometheus Metrics

Some participation metrics have been removed and replaced. Some were removed since they are no longer relevant to Altair (e.g., total attesting balance) and others replaced with gwei values instead of pre-computed values. This provides more flexibility at display-time (e.g., Grafana).

The following metrics were added as replacements:

- `beacon_participation_prev_epoch_head_attesting_gwei_total`

- `beacon_participation_prev_epoch_target_attesting_gwei_total`

- `beacon_participation_prev_epoch_source_attesting_gwei_total`

- `beacon_participation_prev_epoch_active_gwei_total`

The following metrics were removed:

- `beacon_participation_prev_epoch_attester`

- instead use `beacon_participation_prev_epoch_source_attesting_gwei_total / beacon_participation_prev_epoch_active_gwei_total`.

- `beacon_participation_prev_epoch_target_attester`

- instead use `beacon_participation_prev_epoch_target_attesting_gwei_total / beacon_participation_prev_epoch_active_gwei_total`.

- `beacon_participation_prev_epoch_head_attester`

- instead use `beacon_participation_prev_epoch_head_attesting_gwei_total / beacon_participation_prev_epoch_active_gwei_total`.

The `beacon_participation_prev_epoch_attester` endpoint has been removed. Users should instead use the pre-existing `beacon_participation_prev_epoch_target_attester`.

### Breaking Changes: HTTP API

The `/lighthouse/validator_inclusion/{epoch}/{validator_id}` endpoint loses the following fields:

- `current_epoch_attesting_gwei` (use `current_epoch_target_attesting_gwei` instead)

- `previous_epoch_attesting_gwei` (use `previous_epoch_target_attesting_gwei` instead)

The `/lighthouse/validator_inclusion/{epoch}/{validator_id}` endpoint lose the following fields:

- `is_current_epoch_attester` (use `is_current_epoch_target_attester` instead)

- `is_previous_epoch_attester` (use `is_previous_epoch_target_attester` instead)

- `is_active_in_current_epoch` becomes `is_active_unslashed_in_current_epoch`.

- `is_active_in_previous_epoch` becomes `is_active_unslashed_in_previous_epoch`.

## Additional Info

NA

## TODO

- [x] Deal with total balances

- [x] Update validator_inclusion API

- [ ] Ensure `beacon_participation_prev_epoch_target_attester` and `beacon_participation_prev_epoch_head_attester` work before Altair

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

Resolves#2313

## Proposed Changes

Provide `BeaconNodeHttpClient` with a dedicated `Timeouts` struct.

This will allow granular adjustment of the timeout duration for different calls made from the VC to the BN. These can either be a constant value, or as a ratio of the slot duration.

Improve timeout performance by using these adjusted timeout duration's only whenever a fallback endpoint is available.

Add a CLI flag called `use-long-timeouts` to revert to the old behavior.

## Additional Info

Additionally set the default `BeaconNodeHttpClient` timeouts to the be the slot duration of the network, rather than a constant 12 seconds. This will allow it to adjust to different network specifications.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Proposed Changes

Implement the consensus changes necessary for the upcoming Altair hard fork.

## Additional Info

This is quite a heavy refactor, with pivotal types like the `BeaconState` and `BeaconBlock` changing from structs to enums. This ripples through the whole codebase with field accesses changing to methods, e.g. `state.slot` => `state.slot()`.

Co-authored-by: realbigsean <seananderson33@gmail.com>

This updates some older dependencies to address a few cargo audit warnings.

The majority of warnings come from network dependencies which will be addressed in #2389.

This PR contains some minor dep updates that are not network related.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

Closes#1661

## Proposed Changes

Add a dummy package called `target_check` which gets compiled early in the build and fails if the target is 32-bit

## Additional Info

You can test the efficacy of this check with:

```

cross build --release --manifest-path lighthouse/Cargo.toml --target i686-unknown-linux-gnu

```

In which case this compilation error is shown:

```

error: Lighthouse requires a 64-bit CPU and operating system

--> common/target_check/src/lib.rs:8:1

|

8 | / assert_cfg!(

9 | | target_pointer_width = "64",

10 | | "Lighthouse requires a 64-bit CPU and operating system",

11 | | );

| |__^

```

## Issue Addressed

`make lint` failing on rust 1.53.0.

## Proposed Changes

1.53.0 updates

## Additional Info

I haven't figure out why yet, we were now hitting the recursion limit in a few crates. So I had to add `#![recursion_limit = "256"]` in a few places

Co-authored-by: realbigsean <seananderson33@gmail.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

NA

## Proposed Changes

I've noticed some of the SigP Prater nodes struggling on v1.4.0-rc.0. I suspect this is due to the changes in #2296. Specifically, the trade-off which lowered the memory footprint whilst increasing runtime on some functions.

Presently, this PR is documenting my testing on Prater.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

Bump versions.

## Additional Info

This is not exactly the v1.4.0 release described in [Lighthouse Update #36](https://lighthouse.sigmaprime.io/update-36.html).

Whilst it contains:

- Beta Windows support

- A reduction in Eth1 queries

- A reduction in memory footprint

It does not contain:

- Altair

- Doppelganger Protection

- The remote signer

We have decided to release some features early. This is primarily due to the desire to allow users to benefit from the memory saving improvements as soon as possible.

## TODO

- [x] Wait for #2340, #2356 and #2376 to merge and then rebase on `unstable`.

- [x] Ensure discovery issues are fixed (see #2388)

- [x] Ensure https://github.com/sigp/lighthouse/pull/2382 is merged/removed.

- [x] Ensure https://github.com/sigp/lighthouse/pull/2383 is merged/removed.

- [x] Ensure https://github.com/sigp/lighthouse/pull/2384 is merged/removed.

- [ ] Double-check eth1 cache is carried between boots

## Issue Addressed

NA

## Proposed Changes

Reverts #2345 in the interests of getting v1.4.0 out this week. Once we have released that, we can go back to testing this again.

## Additional Info

NA

## Issue Addressed

#2282

## Proposed Changes

Reduce the outbound requests made to eth1 endpoints by caching the results from `eth_chainId` and `net_version`.

Further reduce the overall request count by increasing `auto_update_interval_millis` from `7_000` (7 seconds) to `60_000` (1 minute).

This will result in a reduction from ~2000 requests per hour to 360 requests per hour (during normal operation). A reduction of 82%.

## Additional Info

If an endpoint fails, its state is dropped from the cache and the `eth_chainId` and `net_version` calls will be made for that endpoint again during the regular update cycle (once per minute) until it is back online.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

Modify the configuration of [GNU malloc](https://www.gnu.org/software/libc/manual/html_node/The-GNU-Allocator.html) to reduce memory footprint.

- Set `M_ARENA_MAX` to 4.

- This reduces memory fragmentation at the cost of contention between threads.

- Set `M_MMAP_THRESHOLD` to 2mb

- This means that any allocation >= 2mb is allocated via an anonymous mmap, instead of on the heap/arena. This reduces memory fragmentation since we don't need to keep growing the heap to find big contiguous slabs of free memory.

- ~~Run `malloc_trim` every 60 seconds.~~

- ~~This shaves unused memory from the top of the heap, preventing the heap from constantly growing.~~

- Removed, see: https://github.com/sigp/lighthouse/pull/2299#issuecomment-825322646

*Note: this only provides memory savings on the Linux (glibc) platform.*

## Additional Info

I'm going to close#2288 in favor of this for the following reasons:

- I've managed to get the memory footprint *smaller* here than with jemalloc.

- This PR seems to be less of a dramatic change than bringing in the jemalloc dep.

- The changes in this PR are strictly runtime changes, so we can create CLI flags which disable them completely. Since this change is wide-reaching and complex, it's nice to have an easy "escape hatch" if there are undesired consequences.

## TODO

- [x] Allow configuration via CLI flags

- [x] Test on Mac

- [x] Test on RasPi.

- [x] Determine if GNU malloc is present?

- I'm not quite sure how to detect for glibc.. This issue suggests we can't really: https://github.com/rust-lang/rust/issues/33244

- [x] Make a clear argument regarding the affect of this on CPU utilization.

- [x] Test with higher `M_ARENA_MAX` values.

- [x] Test with longer trim intervals

- [x] Add some stats about memory savings

- [x] Remove `malloc_trim` calls & code

## Issue Addressed

Windows incompatibility.

## Proposed Changes

On windows, lighthouse needs to default to STDIN as tty doesn't exist. Also Windows uses ACLs for file permissions. So to mirror chmod 600, we will remove every entry in a file's ACL and add only a single SID that is an alias for the file owner.

Beyond that, there were several changes made to different unit tests because windows has slightly different error messages as well as frustrating nuances around killing a process :/

## Additional Info

Tested on my Windows VM and it appears to work, also compiled & tested on Linux with these changes. Permissions look correct on both platforms now. Just waiting for my validator to activate on Prater so I can test running full validator client on windows.

Co-authored-by: ethDreamer <37123614+ethDreamer@users.noreply.github.com>

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

N/A

## Proposed Changes

Add unit tests for the various CLI flags associated with the beacon node and validator client. These changes require the addition of two new flags: `dump-config` and `immediate-shutdown`.

## Additional Info

Both `dump-config` and `immediate-shutdown` are marked as hidden since they should only be used in testing and other advanced use cases.

**Note:** This requires changing `main.rs` so that the flags can adjust the program behavior as necessary.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

#2276

## Proposed Changes

Add the `SensitiveUrl` struct which wraps `Url` and implements custom `Display` and `Debug` traits to redact user secrets from being logged in eth1 endpoints, beacon node endpoints and metrics.

## Additional Info

This also includes a small rewrite of the eth1 crate to make requests using `Url` instead of `&str`.

Some error messages have also been changed to remove `Url` data.

## Issue Addressed

None

## Proposed Changes

Adds support for downloading the deposit contract from a different location

by setting the environement variables `LIGHTHOUSE_DEPOSIT_CONTRACT_SPEC_URL`

and `LIGHTHOUSE_DEPOSIT_CONTRACT_TESTNET_URL`.

It also adds support to fetch the content from a local file:// URL.

This allows pre fetching to build in an environment without network access.

## Additional Info

Being able to build without network access is required to package the application for https://nixos.org/. But I imagine it might be useful for other distributions too.

## Issue Addressed

NA

## Proposed Changes

Bump versions.

## Additional Info

This is a minor release (not patch) due to the very slight change introduced by #2291.

## Issue Addressed

NA

## Proposed Changes

- Adds a specific log and metric for when a block is enshrined as head with a delay that will caused bad attestations

- We *technically* already expose this information, but it's a little tricky to determine during debugging. This makes it nice and explicit.

- Fixes a minor reporting bug with the validator monitor where it was expecting agg. attestations too early (at half-slot rather than two-thirds-slot).

## Additional Info

NA

## Issue Addressed

Closes#2274

## Proposed Changes

* Modify the `YamlConfig` to collect unknown fields into an `extra_fields` map, instead of failing hard.

* Log a debug message if there are extra fields returned to the VC from one of its BNs.

This restores Lighthouse's compatibility with Teku beacon nodes (and therefore Infura)

## Issue Addressed

#2224

## Proposed Changes

Add a `--password-file` option to the `lighthouse account validator import` command. The flag requires `--reuse-password` and will copy the password over to the `validator_definitions.yml` file. I used #2070 as a guide for validating the password as UTF-8 and stripping newlines.

## Additional Info

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

Resolves#1944

## Proposed Changes

Adds a "graffiti" key to the `validator_definitions.yml`. Setting the key will override anything passed through the validator `--graffiti` flag.

Returns an error if the value for the graffiti key is > 32 bytes instead of silently truncating.

## Proposed Changes

When building the release binaries with Cross, Ubuntu 16.04 is used, which uses an old verison of Git lacking support for `--exclude`. This PR changes `lighthouse_version` to use `--match` instead.

## Issue Addressed

NA

## Problem this PR addresses

There's an issue where Lighthouse is banning a lot of peers due to the following sequence of events:

1. Gossip block 0xabc arrives ~200ms early

- It is propagated across the network, with respect to [`MAXIMUM_GOSSIP_CLOCK_DISPARITY`](https://github.com/ethereum/eth2.0-specs/blob/v1.0.0/specs/phase0/p2p-interface.md#why-is-there-maximum_gossip_clock_disparity-when-validating-slot-ranges-of-messages-in-gossip-subnets).

- However, it is not imported to our database since the block is early.

2. Attestations for 0xabc arrive, but the block was not imported.

- The peer that sent the attestation is down-voted.

- Each unknown-block attestation causes a score loss of 1, the peer is banned at -100.

- When the peer is on an attestation subnet there can be hundreds of attestations, so the peer is banned quickly (before the missed block can be obtained via rpc).

## Potential solutions

I can think of three solutions to this:

1. Wait for attestation-queuing (#635) to arrive and solve this.

- Easy

- Not immediate fix.

- Whilst this would work, I don't think it's a perfect solution for this particular issue, rather (3) is better.

1. Allow importing blocks with a tolerance of `MAXIMUM_GOSSIP_CLOCK_DISPARITY`.

- Easy

- ~~I have implemented this, for now.~~

1. If a block is verified for gossip propagation (i.e., signature verified) and it's within `MAXIMUM_GOSSIP_CLOCK_DISPARITY`, then queue it to be processed at the start of the appropriate slot.

- More difficult

- Feels like the best solution, I will try to implement this.

**This PR takes approach (3).**

## Changes included

- Implement the `block_delay_queue`, based upon a [`DelayQueue`](https://docs.rs/tokio-util/0.6.3/tokio_util/time/delay_queue/struct.DelayQueue.html) which can store blocks until it's time to import them.

- Add a new `DelayedImportBlock` variant to the `beacon_processor::WorkEvent` enum to handle this new event.

- In the `BeaconProcessor`, refactor a `tokio::select!` to a struct with an explicit `Stream` implementation. I experienced some issues with `tokio::select!` in the block delay queue and I also found it hard to debug. I think this explicit implementation is nicer and functionally equivalent (apart from the fact that `tokio::select!` randomly chooses futures to poll, whereas now we're deterministic).

- Add a testing framework to the `beacon_processor` module that tests this new block delay logic. I also tested a handful of other operations in the beacon processor (attns, slashings, exits) since it was super easy to copy-pasta the code from the `http_api` tester.

- To implement these tests I added the concept of an optional `work_journal_tx` to the `BeaconProcessor` which will spit out a log of events. I used this in the tests to ensure that things were happening as I expect.

- The tests are a little racey, but it's hard to avoid that when testing timing-based code. If we see CI failures I can revise. I haven't observed *any* failures due to races on my machine or on CI yet.

- To assist with testing I allowed for directly setting the time on the `ManualSlotClock`.

- I gave the `beacon_processor::Worker` a `Toolbox` for two reasons; (a) it avoids changing tons of function sigs when you want to pass a new object to the worker and (b) it seemed cute.

## Proposed Changes

Somehow since Lighthouse v1.1.3 the behaviour of `git-describe` has changed so that it includes the version tag, the number of commits since that tag, _and_ the commit. According to the docs this is how it should always have behaved?? Weird!

https://git-scm.com/docs/git-describe/2.30.1

Anyway, this lead to `lighthouse_version` producing this monstrosity of a version string when building #2194:

```

Lighthouse/v1.1.3-v1.1.3-5-gac07

```

Observe it in the wild here: https://pyrmont.beaconcha.in/block/694880

Adding `--exclude="*"` prevents `git-describe` from trying to include the tag, and on that troublesome commit from #2194 it now produces the correct version string.

## Issue Addressed

N/A

## Proposed Changes

This is mostly a UX improvement.

Currently, when recursively finding keystores, we only ignore keystores with same path.This leads to potential issues while copying datadirs (e.g. copying datadir to a new ssd with more storage). After copying new datadir and starting the vc, we will discover the copied keystores as new keystores and add it to the definitions file leading to duplicate entries.

This PR avoids duplicate keystores being discovered as new keystore by checking for duplicate pubkeys as well.

## Issue Addressed

resolves#2129resolves#2099

addresses some of #1712

unblocks #2076

unblocks #2153

## Proposed Changes

- Updates all the dependencies mentioned in #2129, except for web3. They haven't merged their tokio 1.0 update because they are waiting on some dependencies of their own. Since we only use web3 in tests, I think updating it in a separate issue is fine. If they are able to merge soon though, I can update in this PR.

- Updates `tokio_util` to 0.6.2 and `bytes` to 1.0.1.

- We haven't made a discv5 release since merging tokio 1.0 updates so I'm using a commit rather than release atm. **Edit:** I think we should merge an update of `tokio_util` to 0.6.2 into discv5 before this release because it has panic fixes in `DelayQueue` --> PR in discv5: https://github.com/sigp/discv5/pull/58

## Additional Info

tokio 1.0 changes that required some changes in lighthouse:

- `interval.next().await.is_some()` -> `interval.tick().await`

- `sleep` future is now `!Unpin` -> https://github.com/tokio-rs/tokio/issues/3028

- `try_recv` has been temporarily removed from `mpsc` -> https://github.com/tokio-rs/tokio/issues/3350

- stream features have moved to `tokio-stream` and `broadcast::Receiver::into_stream()` has been temporarily removed -> `https://github.com/tokio-rs/tokio/issues/2870

- I've copied over the `BroadcastStream` wrapper from this PR, but can update to use `tokio-stream` once it's merged https://github.com/tokio-rs/tokio/pull/3384

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

NA

## Proposed Changes

Adds some metrics to track delays regarding:

- LH processing of blocks

- delays receiving blocks from other nodes.

## Additional Info

NA

## Issue Addressed

- Resolves#2064

## Proposed Changes

Adds a `ValidatorMonitor` struct which provides additional logging and Grafana metrics for specific validators.

Use `lighthouse bn --validator-monitor` to automatically enable monitoring for any validator that hits the [subnet subscription](https://ethereum.github.io/eth2.0-APIs/#/Validator/prepareBeaconCommitteeSubnet) HTTP API endpoint.

Also, use `lighthouse bn --validator-monitor-pubkeys` to supply a list of validators which will always be monitored.

See the new docs included in this PR for more info.

## TODO

- [x] Track validator balance, `slashed` status, etc.

- [x] ~~Register slashings in current epoch, not offense epoch~~

- [ ] Publish Grafana dashboard, update TODO link in docs

- [x] ~~#2130 is merged into this branch, resolve that~~

## Issue Addressed

NA

## Proposed Changes

Copied from #2083, changes the config milliseconds_per_slot to seconds_per_slot to avoid errors when slot duration is not a multiple of a second. To avoid deserializing old serialized data (with milliseconds instead of seconds) the Serialize and Deserialize derive got removed from the Spec struct (isn't currently used anyway).

This PR replaces #2083 for the purpose of fixing a merge conflict without requiring the input of @blacktemplar.

## Additional Info

NA

Co-authored-by: blacktemplar <blacktemplar@a1.net>

## Issue Addressed

`test_dht_persistence` failing

## Proposed Changes

Bind `NetworkService::start` to an underscore prefixed variable rather than `_`. `_` was causing it to be dropped immediately

This was failing 5/100 times before this update, but I haven't been able to get it to fail after updating it

Co-authored-by: realbigsean <seananderson33@gmail.com>