## Issue Addressed

Fix `cargo audit` failures on `unstable`

Closes#2698

## Proposed Changes

The main culprit is `nix`, which is vulnerable for versions below v0.23.0. We can't get by with a straight-forward `cargo update` because `psutil` depends on an old version of `nix` (cf. https://github.com/rust-psutil/rust-psutil/pull/93). Hence I've temporarily forked `psutil` under the `sigp` org, where I've included the update to `nix` v0.23.0.

Additionally, I took the chance to update the `time` dependency to v0.3, which removed a bunch of stale deps including `stdweb` which is no longer maintained. Lighthouse only uses the `time` crate in the notifier to do some pretty printing, and so wasn't affected by any of the breaking changes in v0.3 ([changelog here](https://github.com/time-rs/time/blob/main/CHANGELOG.md#030-2021-07-30)).

## Proposed Changes

While investigating memory usage I noticed that the malloc metrics were going negative once they passed 2GiB. This is because the underlying `mallinfo` function returns a `i32`, and we were casting it straight to an `i64`, preserving the sign.

The long-term fix will be to move to `mallinfo2`, but it's still not yet widely available.

## Issue Addressed

Resolves#2563

Replacement for #2653 as I'm not able to reopen that PR after force pushing.

## Proposed Changes

Fixes all broken api links. Cherry picked changes in #2590 and updated a few more links.

Co-authored-by: Mason Stallmo <masonstallmo@gmail.com>

## Issue Addressed

Resolves#2555

## Proposed Changes

Don't log errors on resubscribing to topics. Also don't log errors if we are setting already set attnet/syncnet bits.

## Issue Addressed

Fix#2585

## Proposed Changes

Provide a canonical version of test_logger that can be used

throughout lighthouse.

## Additional Info

This allows tests to conditionally emit logging data by adding

test_logger as the default logger. And then when executing

`cargo test --features logging/test_logger` log output

will be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test --features logging/test_logger

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-e20115db6a5e3714)

running 1 test

Sep 10 12:53:45.212 INFO hi, module: test_logger:8

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Or, in normal scenarios where logging isn't needed, executing

`cargo test` the log output will not be visible:

wink@3900x:~/lighthouse/common/logging/tests/test-feature-test_logger (Add-test_logger-as-feature-to-logging)

$ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.02s

Running unittests (target/debug/deps/test_logger-02e02f8d41e8cf8a)

running 1 test

test tests::test_fn_with_logging ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests test-logger

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

## Issue Addressed

N/A

## Proposed Changes

We set a `$TAG` env variable in the github actions workflow, and then re-use this name in the `publish.sh` script. It makes this check `if [[ -z "$TAG" ]]` return true, when it should return false on the first time it's hit.

## Additional Info

N/A

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

This PR addresses an issue found by @YorickDowne during testing of v2.0.0-rc.0.

Due to a lack of atomic database writes on checkpoint sync start-up, it was possible for the database to get into an inconsistent state from which it couldn't recover without `--purge-db`. The core of the issue was that the store's anchor info was being stored _before_ the `PersistedBeaconChain`. If a crash occured so that anchor info was stored but _not_ the `PersistedBeaconChain`, then on restart Lighthouse would think the database was unitialized and attempt to compare-and-swap a `None` value, but would actually find the stale info from the previous run.

## Proposed Changes

The issue is fixed by writing the anchor info, the split point, and the `PersistedBeaconChain` atomically on start-up. Some type-hinting ugliness was required, which could possibly be cleaned up in future refactors.

## Issue Addressed

This PR addresses issue #2657

## Proposed Changes

Changes `/eth/v1/config/deposit_contract` endpoint to return the chain ID from the loaded chain spec instead of eth1::DEFAULT_NETWORK_ID which is the Goerli chain ID of 5.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Proposed Changes

Cut the first release candidate for v2.0.0, in preparation for testing and release this week

## Additional Info

Builds on #2632, which should either be merged first or in the same batch

## Issue Addressed

N/A

## Proposed Changes

When peers switching to a disconnecting state, decrement the disconnected peers counter. This also downgrades some crit logs to errors.

I've also added a re-sync point when peers get unbanned the disconnected peer count will match back to the number of disconnected peers if it has gone out of sync previously.

## Issue Addressed

Closes#2528

## Proposed Changes

- Add `BlockTimesCache` to provide block timing information to `BeaconChain`. This allows additional metrics to be calculated for blocks that are set as head too late.

- Thread the `seen_timestamp` of blocks received from RPC responses (except blocks from syncing) through to the sync manager, similar to what is done for blocks from gossip.

## Additional Info

This provides the following additional metrics:

- `BEACON_BLOCK_OBSERVED_SLOT_START_DELAY_TIME`

- The delay between the start of the slot and when the block was first observed.

- `BEACON_BLOCK_IMPORTED_OBSERVED_DELAY_TIME`

- The delay between when the block was first observed and when the block was imported.

- `BEACON_BLOCK_HEAD_IMPORTED_DELAY_TIME`

- The delay between when the block was imported and when the block was set as head.

The metric `BEACON_BLOCK_IMPORTED_SLOT_START_DELAY_TIME` was removed.

A log is produced when a block is set as head too late, e.g.:

```

Aug 27 03:46:39.006 DEBG Delayed head block set_as_head_delay: Some(21.731066ms), imported_delay: Some(119.929934ms), observed_delay: Some(3.864596988s), block_delay: 4.006257988s, slot: 1931331, proposer_index: 24294, block_root: 0x937602c89d3143afa89088a44bdf4b4d0d760dad082abacb229495c048648a9e, service: beacon

```

## Issue Addressed

Resolves#2552

## Proposed Changes

Offers some improvement in inclusion distance calculation in the validator monitor.

When registering an attestation from a block, instead of doing `block.slot() - attesstation.data.slot()` to get the inclusion distance, we now pass the parent block slot from the beacon chain and do `parent_slot.saturating_sub(attestation.data.slot())`. This allows us to give best effort inclusion distance in scenarios where the attestation was included right after a skip slot. Note that this does not give accurate results in scenarios where the attestation was included few blocks after the skip slot.

In this case, if the attestation slot was `b1` and was included in block `b2` with a skip slot in between, we would get the inclusion delay as 0 (by ignoring the skip slot) which is the best effort inclusion delay.

```

b1 <- missed <- b2

```

Here, if the attestation slot was `b1` and was included in block `b3` with a skip slot and valid block `b2` in between, then we would get the inclusion delay as 2 instead of 1 (by ignoring the skip slot).

```

b1 <- missed <- b2 <- b3

```

A solution for the scenario 2 would be to count number of slots between included slot and attestation slot ignoring the skip slots in the beacon chain and pass the value to the validator monitor. But I'm concerned that it could potentially lead to db accesses for older blocks in extreme cases.

This PR also uses the validator monitor data for logging per epoch inclusion distance. This is useful as we won't get inclusion data in post-altair summaries.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Proposed Changes

Instead of checking for strict equality between a BN's spec and the VC's local spec, just check the genesis fork version. This prevents us from failing eagerly for minor differences, while still protecting the VC from connecting to a completely incompatible BN.

A warning is retained for the previous case where the specs are not exactly equal, which is to be expected if e.g. running against Infura before Infura configures the mainnet Altair fork epoch.

## Proposed Changes

Add tooling to lcli to provide a way to measure the attestation packing efficiency of historical blocks by querying a beacon node API endpoint.

## Additional Info

Since block rewards are proportional to the number of unique attestations included in the block, a measure of efficiency can be calculated by comparing the number of unique attestations that could have been included into a block vs the number of unique attestations that were actually included.

This lcli tool provides the following data per block:

- Slot Number

- Proposer Index and Grafitti (if any)

- Available Unique Attestations

- Included Unique Attestations

- Best-effort estimate of the number of offline validators for the epoch. This means we can normalize the calculated efficiency, removing offline validators from the available attestation set.

The data is outputted as a csv file.

## Usage

Install lcli:

```

make install-lcli

```

Alternatively install with the `fake_crypto` feature to skip signature verification which improves performance:

```

cargo install --path lcli --features=fake_crypto --force --locked

```

Ensure a Lighthouse beacon node is running and synced. A non-default API endpoint can be passed with the `--endpoint` flag.

Run:

```

lcli etl-block-efficiency --output /path/to/output.csv --start-epoch 40 --end-epoch 80

```

## Issue Addressed

NA

## Proposed Changes

As `cargo audit` astutely pointed out, the version of `zeroize_derive` were were using had a vulnerability:

```

Crate: zeroize_derive

Version: 1.1.0

Title: `#[zeroize(drop)]` doesn't implement `Drop` for `enum`s

Date: 2021-09-24

ID: RUSTSEC-2021-0115

URL: https://rustsec.org/advisories/RUSTSEC-2021-0115

Solution: Upgrade to >=1.2.0

```

This PR updates `zeroize` and `zeroize_derive` to appease `cargo audit`.

`tiny-bip39` was also updated to allow compile.

## Additional Info

I don't believe this vulnerability actually affected the Lighthouse code-base directly. However, `tiny-bip39` may have been affected which may have resulted in some uncleaned memory in Lighthouse. Whilst this is not ideal, it's not a major issue. Zeroization is a nice-to-have since it only protects from sophisticated attacks or attackers that already have a high level of access already.

## Issue Addressed

NA

## Proposed Changes

Implements the "union" type from the SSZ spec for `ssz`, `ssz_derive`, `tree_hash` and `tree_hash_derive` so it may be derived for `enums`:

https://github.com/ethereum/consensus-specs/blob/v1.1.0-beta.3/ssz/simple-serialize.md#union

The union type is required for the merge, since the `Transaction` type is defined as a single-variant union `Union[OpaqueTransaction]`.

### Crate Updates

This PR will (hopefully) cause CI to publish new versions for the following crates:

- `eth2_ssz_derive`: `0.2.1` -> `0.3.0`

- `eth2_ssz`: `0.3.0` -> `0.4.0`

- `eth2_ssz_types`: `0.2.0` -> `0.2.1`

- `tree_hash`: `0.3.0` -> `0.4.0`

- `tree_hash_derive`: `0.3.0` -> `0.4.0`

These these crates depend on each other, I've had to add a workspace-level `[patch]` for these crates. A follow-up PR will need to remove this patch, ones the new versions are published.

### Union Behaviors

We already had SSZ `Encode` and `TreeHash` derive for enums, however it just did a "transparent" pass-through of the inner value. Since the "union" decoding from the spec is in conflict with the transparent method, I've required that all `enum` have exactly one of the following enum-level attributes:

#### SSZ

- `#[ssz(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[ssz(enum_behaviour = "transparent")]`

- maintains existing functionality

- not supported for `Decode` (never was)

#### TreeHash

- `#[tree_hash(enum_behaviour = "union")]`

- matches the spec used for the merge

- `#[tree_hash(enum_behaviour = "transparent")]`

- maintains existing functionality

This means that we can maintain the existing transparent behaviour, but all existing users will get a compile-time error until they explicitly opt-in to being transparent.

### Legacy Option Encoding

Before this PR, we already had a union-esque encoding for `Option<T>`. However, this was with the *old* SSZ spec where the union selector was 4 bytes. During merge specification, the spec was changed to use 1 byte for the selector.

Whilst the 4-byte `Option` encoding was never used in the spec, we used it in our database. Writing a migrate script for all occurrences of `Option` in the database would be painful, especially since it's used in the `CommitteeCache`. To avoid the migrate script, I added a serde-esque `#[ssz(with = "module")]` field-level attribute to `ssz_derive` so that we can opt into the 4-byte encoding on a field-by-field basis.

The `ssz::legacy::four_byte_impl!` macro allows a one-liner to define the module required for the `#[ssz(with = "module")]` for some `Option<T> where T: Encode + Decode`.

Notably, **I have removed `Encode` and `Decode` impls for `Option`**. I've done this to force a break on downstream users. Like I mentioned, `Option` isn't used in the spec so I don't think it'll be *that* annoying. I think it's nicer than quietly having two different union implementations or quietly breaking the existing `Option` impl.

### Crate Publish Ordering

I've modified the order in which CI publishes crates to ensure that we don't publish a crate without ensuring we already published a crate that it depends upon.

## TODO

- [ ] Queue a follow-up `[patch]`-removing PR.

## Issue Addressed

NA

## Proposed Changes

Adds the ability to verify batches of aggregated/unaggregated attestations from the network.

When the `BeaconProcessor` finds there are messages in the aggregated or unaggregated attestation queues, it will first check the length of the queue:

- `== 1` verify the attestation individually.

- `>= 2` take up to 64 of those attestations and verify them in a batch.

Notably, we only perform batch verification if the queue has a backlog. We don't apply any artificial delays to attestations to try and force them into batches.

### Batching Details

To assist with implementing batches we modify `beacon_chain::attestation_verification` to have two distinct categories for attestations:

- *Indexed* attestations: those which have passed initial validation and were valid enough for us to derive an `IndexedAttestation`.

- *Verified* attestations: those attestations which were indexed *and also* passed signature verification. These are well-formed, interesting messages which were signed by validators.

The batching functions accept `n` attestations and then return `n` attestation verification `Result`s, where those `Result`s can be any combination of `Ok` or `Err`. In other words, we attempt to verify as many attestations as possible and return specific per-attestation results so peer scores can be updated, if required.

When we batch verify attestations, we first try to map all those attestations to *indexed* attestations. If any of those attestations were able to be indexed, we then perform batch BLS verification on those indexed attestations. If the batch verification succeeds, we convert them into *verified* attestations, disabling individual signature checking. If the batch fails, we convert to verified attestations with individual signature checking enabled.

Ultimately, we optimistically try to do a batch verification of attestation signatures and fall-back to individual verification if it fails. This opens an attach vector for "poisoning" the attestations and causing us to waste a batch verification. I argue that peer scoring should do a good-enough job of defending against this and the typical-case gains massively outweigh the worst-case losses.

## Additional Info

Before this PR, attestation verification took the attestations by value (instead of by reference). It turns out that this was unnecessary and, in my opinion, resulted in some undesirable ergonomics (e.g., we had to pass the attestation back in the `Err` variant to avoid clones). In this PR I've modified attestation verification so that it now takes a reference.

I refactored the `beacon_chain/tests/attestation_verification.rs` tests so they use a builder-esque "tester" struct instead of a weird macro. It made it easier for me to test individual/batch with the same set of tests and I think it was a nice tidy-up. Notably, I did this last to try and make sure my new refactors to *actual* production code would pass under the existing test suite.

## Issue Addressed

Closes#1891Closes#1784

## Proposed Changes

Implement checkpoint sync for Lighthouse, enabling it to start from a weak subjectivity checkpoint.

## Additional Info

- [x] Return unavailable status for out-of-range blocks requested by peers (#2561)

- [x] Implement sync daemon for fetching historical blocks (#2561)

- [x] Verify chain hashes (either in `historical_blocks.rs` or the calling module)

- [x] Consistency check for initial block + state

- [x] Fetch the initial state and block from a beacon node HTTP endpoint

- [x] Don't crash fetching beacon states by slot from the API

- [x] Background service for state reconstruction, triggered by CLI flag or API call.

Considered out of scope for this PR:

- Drop the requirement to provide the `--checkpoint-block` (this would require some pretty heavy refactoring of block verification)

Co-authored-by: Diva M <divma@protonmail.com>

In previous network updates we have made our libp2p connections more lean by limiting the maximum number of connections a lighthouse node will accept before libp2p rejects new connections.

However, we still maintain the logic that at maximum connections, we try to dial extra peers if they are needed by a validator client to publish messages on a specific subnet. The dials typically result in failures due the libp2p connection limits.

This PR adds an extra factor, `PRIORITY_PEER_EXCESS` which sets aside a new allocation of peers we are able to dial in case we need these peers for the VC client. This allocation sits along side the excess peer (which allows extra incoming peers on top of our target peer limit).

The drawback here, is that libp2p now allows extra peers to connect to us (beyond the standard peer limit) which the peer manager should subsequently reject.

## Issue Addressed

N/A

## Proposed Changes

Add a note to the Doppelganger Protection docs about how it is not interoperable until an endpoint facilitating it is standardized (https://github.com/ethereum/beacon-APIs/pull/131).

## Additional Info

N/A

Co-authored-by: realbigsean <seananderson33@gmail.com>

This PR in general improves the handling around peer banning.

Specifically there were issues when multiple peers under a single IP connected to us after we banned the IP for poor behaviour.

This PR should now handle these peers gracefully as well as make some improvements around how we previously disconnected and banned peers.

The logic now goes as follows:

- Once a peer gets banned, its gets registered with its known IP addresses

- Once enough banned peers exist under a single IP that IP is banned

- We retain connections with existing peers under this IP

- Any new connections under this IP are rejected

## Issue Addressed

N/A

## Proposed Changes

Add a fork_digest to `ForkContext` only if it is set in the config.

Reject gossip messages on post fork topics before the fork happens.

Edit: Instead of rejecting gossip messages on post fork topics, we now subscribe to post fork topics 2 slots before the fork.

Co-authored-by: Age Manning <Age@AgeManning.com>

Sigma Prime is transitioning our mainnet bootnodes and this PR represents the transition of our bootnodes.

After a few releases, old boot-nodes will be deprecated.

# Description

A few changes have been made to discovery. In particular a custom re-write of an LRU cache which previously was read/write O(N) for all our sessions ~5k, to a more reasonable hashmap-style O(1).

Further there has been reported issues in the current discv5, so added error handling to help identify the issue has been added.

[EIP-3030]: https://eips.ethereum.org/EIPS/eip-3030

[Web3Signer]: https://consensys.github.io/web3signer/web3signer-eth2.html

## Issue Addressed

Resolves#2498

## Proposed Changes

Allows the VC to call out to a [Web3Signer] remote signer to obtain signatures.

## Additional Info

### Making Signing Functions `async`

To allow remote signing, I needed to make all the signing functions `async`. This caused a bit of noise where I had to convert iterators into `for` loops.

In `duties_service.rs` there was a particularly tricky case where we couldn't hold a write-lock across an `await`, so I had to first take a read-lock, then grab a write-lock.

### Move Signing from Core Executor

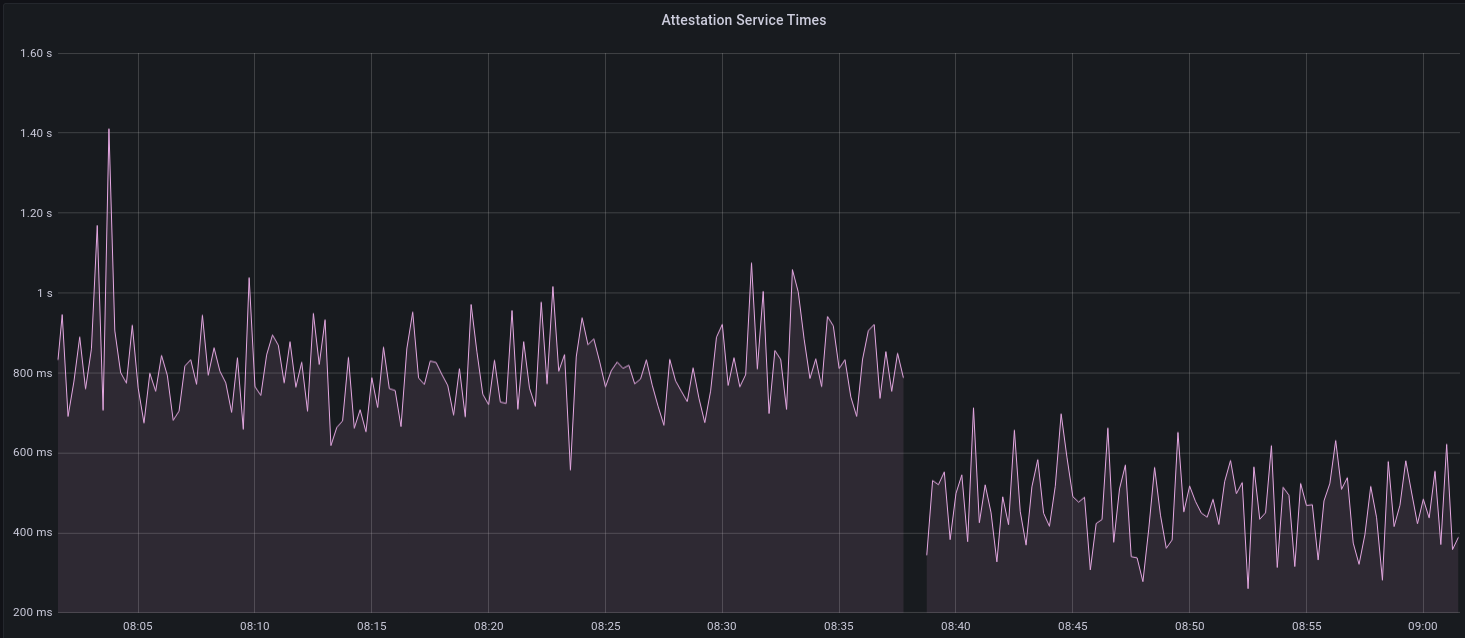

Whilst implementing this feature, I noticed that we signing was happening on the core tokio executor. I suspect this was causing the executor to temporarily lock and occasionally trigger some HTTP timeouts (and potentially SQL pool timeouts, but I can't verify this). Since moving all signing into blocking tokio tasks, I noticed a distinct drop in the "atttestations_http_get" metric on a Prater node:

I think this graph indicates that freeing the core executor allows the VC to operate more smoothly.

### Refactor TaskExecutor

I noticed that the `TaskExecutor::spawn_blocking_handle` function would fail to spawn tasks if it were unable to obtain handles to some metrics (this can happen if the same metric is defined twice). It seemed that a more sensible approach would be to keep spawning tasks, but without metrics. To that end, I refactored the function so that it would still function without metrics. There are no other changes made.

## TODO

- [x] Restructure to support multiple signing methods.

- [x] Add calls to remote signer from VC.

- [x] Documentation

- [x] Test all endpoints

- [x] Test HTTPS certificate

- [x] Allow adding remote signer validators via the API

- [x] Add Altair support via [21.8.1-rc1](https://github.com/ConsenSys/web3signer/releases/tag/21.8.1-rc1)

- [x] Create issue to start using latest version of web3signer. (See #2570)

## Notes

- ~~Web3Signer doesn't yet support the Altair fork for Prater. See https://github.com/ConsenSys/web3signer/issues/423.~~

- ~~There is not yet a release of Web3Signer which supports Altair blocks. See https://github.com/ConsenSys/web3signer/issues/391.~~

## Proposed Changes

* Modify the `TaskExecutor` so that it spawns a "monitor" future for each future spawned by `spawn` or `spawn_blocking`. This monitor future joins the handle of the child future and shuts down the executor if it detects a panic.

* Enable backtraces by default by setting the environment variable `RUST_BACKTRACE`.

* Spawn the `ProductionBeaconNode` on the `TaskExecutor` so that if a panic occurs during start-up it will take down the whole process. Previously we were using a raw Tokio `spawn`, but I can't see any reason not to use the executor (perhaps someone else can).

## Additional Info

I considered using [`std::panic::set_hook`](https://doc.rust-lang.org/std/panic/fn.set_hook.html) to instantiate a custom panic handler, however this doesn't allow us to send a shutdown signal because `Fn` functions can't move variables (i.e. the shutdown sender) out of their environment. This also prevents it from receiving a `Logger`. Hence I decided to leave the panic handler untouched, but with backtraces turned on by default.

I did a run through the code base with all the raw Tokio spawn functions disallowed by Clippy, and found only two instances where we bypass the `TaskExecutor`: the HTTP API and `InitializedValidators` in the VC. In both places we use `spawn_blocking` and handle the return value, so I figured that was OK for now.

In terms of performance I think the overhead should be minimal. The monitor tasks will just get parked by the executor until their child resolves.

I've checked that this covers Discv5, as the `TaskExecutor` gets injected into Discv5 here: f9bba92db3/beacon_node/src/lib.rs (L125-L126)

The identify network debug logs can get quite noisy and are unnecessary to print on every request/response.

This PR reduces debug noise by only printing messages for identify messages that offer some new information.

## Issue Addressed

Resolves#2582

## Proposed Changes

Use `quoted_u64` and `quoted_u64_vec` custom serde deserializers from `eth2_serde_utils` to support the proper Eth2.0 API spec for `/eth/v1/validator/sync_committee_subscriptions`

## Additional Info

N/A

Move the contents of book/src/local-testnets.md into book/src/setup.md

to make it more discoverable.

Also, the link to scripts/local_testnet was missing `/local_testnet`.

## Proposed Changes

Cache the total active balance for the current epoch in the `BeaconState`. Computing this value takes around 1ms, and this was negatively impacting block processing times on Prater, particularly when reconstructing states.

With a large number of attestations in each block, I saw the `process_attestations` function taking 150ms, which means that reconstructing hot states can take up to 4.65s (31 * 150ms), and reconstructing freezer states can take up to 307s (2047 * 150ms).

I opted to add the cache to the beacon state rather than computing the total active balance at the start of state processing and threading it through. Although this would be simpler in a way, it would waste time, particularly during block replay, as the total active balance doesn't change for the duration of an epoch. So we save ~32ms for hot states, and up to 8.1s for freezer states (using `--slots-per-restore-point 8192`).

## Proposed Changes

This PR deletes all `remote_signer` code from Lighthouse, for the following reasons:

* The `remote_signer` code is unused, and we have no plans to use it now that we're moving to supporting the Web3Signer APIs: #2522

* It represents a significant maintenance burden. The HTTP API tests have been prone to platform-specific failures, and breakages due to dependency upgrades, e.g. #2400.

Although the code is deleted it remains in the Git history should we ever want to recover it. For ease of reference:

- The last commit containing remote signer code: 5a3bcd2904

- The last Lighthouse version: v1.5.1

## Proposed Changes

Remove the SIGPIPE handler added in #2486.

We saw some of the testnet nodes running under `systemd` being stopped due to `journald` restarts. The systemd docs state:

> If systemd-journald.service is stopped, the stream connections associated with all services are terminated. Further writes to those streams by the service will result in EPIPE errors. In order to react gracefully in this case it is recommended that programs logging to standard output/error ignore such errors. If the SIGPIPE UNIX signal handler is not blocked or turned off, such write attempts will also result in such process signals being generated, see signal(7).

From https://www.freedesktop.org/software/systemd/man/systemd-journald.service.html

## Additional Info

It turned out that the issue described in #2114 was due to `tee`'s behaviour rather than Lighthouse's, so the SIGPIPE handler isn't required for any current use case. An alternative to disabling it all together would be to exit with a non-zero code so that systemd knows to restart the process, but it seems more desirable to tolerate journald glitches than to restart frequently.

## Issue Addressed

N/A

## Proposed Changes

Set a valid fork epoch in the simulator and run checks on

1. If all nodes transitioned at the fork

2. If all altair block sync aggregates are full

## Issue Addressed

Resolves#2438Resolves#2437

## Proposed Changes

Changes the permissions for validator client http server api token file and secret key to 600 from 644. Also changes the permission for logfiles generated using the `--logfile` cli option to 600.

Logs the path to the api token instead of the actual api token. Updates docs to reflect the change.

## Issue Addressed

Related to: #2259

Made an attempt at all the necessary updates here to publish the crates to crates.io. I incremented the minor versions on all the crates that have been previously published. We still might run into some issues as we try to publish because I'm not able to test this out but I think it's a good starting point.

## Proposed Changes

- Add description and license to `ssz_types` and `serde_util`

- rename `serde_util` to `eth2_serde_util`

- increment minor versions

- remove path dependencies

- remove patch dependencies

## Additional Info

Crates published:

- [x] `tree_hash` -- need to publish `tree_hash_derive` and `eth2_hashing` first

- [x] `eth2_ssz_types` -- need to publish `eth2_serde_util` first

- [x] `tree_hash_derive`

- [x] `eth2_ssz`

- [x] `eth2_ssz_derive`

- [x] `eth2_serde_util`

- [x] `eth2_hashing`

Co-authored-by: realbigsean <seananderson33@gmail.com>