If a node is also a bootnode it can try to add itself to its own local routing table which will emit an error.

The error is entirely harmless but we would prefer to avoid emitting the error.

This PR does not attempt to add a boot node ENR if that ENR corresponds to our local peer-id/node-id.

## Issue Addressed

Resolves#4061

## Proposed Changes

Adds a message to tell users to check their EE.

## Additional Info

I really struggled to come up with something succinct and complete, so I'm totally open to feedback.

## Issue Addressed

NA

## Proposed Changes

When producing a block from a builder, there are two points where we could consider the block "broadcast":

1. When the blinded block is published to the builder.

2. When the un-blinded block is published to the P2P network (this is always *after* the previous step).

Our logging for late block broadcasts was using (2) for builder-blocks, which was creating a lot of false-positive logs. This is because the builder publishes the block on the P2P network themselves before returning it to us and we perform (2). For clarity, the logs were false-positives because we claim that the block was published late by us when it was actually published earlier by the builder.

This PR changes our logging behavior so we do our logging at (1) instead. It also updates our metrics for block broadcast to distinguish between local and builder blocks. I believe the metrics change will be natively compatible with existing Grafana dashboards.

## Additional Info

One could argue that the builder *should* return the block to us faster, however that's not the case. I think it's more important that we don't desensitize users with false-positives.

## Issue Addressed

Closes#3814, replaces #3818.

## Proposed Changes

* Add a WARN log for the case where we are attempting to sync chain segments but can't process them because they're building on an invalid parent. The most common case where we see this is when the execution node database is corrupt, causing sync to stall mysteriously (because we're currently logging the failure only at debug level).

* Additionally I've bumped up the logging for invalid execution payloads to `WARN`. This may result in some duplicate logs as we log errors from the `beacon_chain` and then again from the beacon processor. Invalid payloads and corrupt DBs _should_ be rare enough that this doesn't produce overwhelming log volume.

## Issue Addressed

Added note in lighthouse book to instruct users to use a min lighthouse requirement to run Siren Ui.

Which issue # does this PR address?

## Proposed Changes

Please list or describe the changes introduced by this PR.

## Additional Info

Please provide any additional information. For example, future considerations

or information useful for reviewers.

There is a race condition which occurs when multiple discovery queries return at almost the exact same time and they independently contain a useful peer we would like to connect to.

The condition can occur that we can add the same peer to the dial queue, before we get a chance to process the queue.

This ends up displaying an error to the user:

```

ERRO Dialing an already dialing peer

```

Although this error is harmless it's not ideal.

There are two solutions to resolving this:

1. As we decide to dial the peer, we change the state in the peer-db to dialing (before we add it to the queue) which would prevent other requests from adding to the queue.

2. We prevent duplicates in the dial queue

This PR has opted for 2. because 1. will complicate the code in that we are changing states in non-intuitive places. Although this technically adds a very slight performance cost, its probably a cleaner solution as we can keep the state-changing logic in one place.

## Issue Addressed

#3938

## Proposed Changes

- `network::Processor` is deleted and all it's logic is moved to `network::Router`.

- The `network::Router` module is moved to a single file.

- The following functions are deleted: `on_disconnect` `send_status` `on_status_response` `on_blocks_by_root_request` `on_lightclient_bootstrap` `on_blocks_by_range_request` `on_block_gossip` `on_unaggregated_attestation_gossip` `on_aggregated_attestation_gossip` `on_voluntary_exit_gossip` `on_proposer_slashing_gossip` `on_attester_slashing_gossip` `on_sync_committee_signature_gossip` `on_sync_committee_contribution_gossip` `on_light_client_finality_update_gossip` `on_light_client_optimistic_update_gossip`. This deletions are possible because the updated `Router` allows the underlying methods to be called directly.

## Issue Addressed

- Add a complete match for `Protocol` here.

- The incomplete match was causing us not to append context bytes to the light client protocols

- This is the relevant part of the spec and it looks like context bytes are defined https://github.com/ethereum/consensus-specs/blob/dev/specs/altair/light-client/p2p-interface.md#getlightclientbootstrap

Disclaimer: I have no idea if people are using it but it shouldn't have been working so not sure why it wasn't caught

Co-authored-by: realbigsean <seananderson33@gmail.com>

## Issue Addressed

In #4027 I forgot to add the `parent_block_number` to the payload attributes SSE.

## Proposed Changes

Compute the parent block number while computing the pre-payload attributes. Pass it on to the SSE stream.

## Additional Info

Not essential for v3.5.1 as I suspect most builders don't need the `parent_block_root`. I would like to use it for my dummy no-op builder however.

## Issue Addressed

Add support for ipv6 and dual stack in lighthouse.

## Proposed Changes

From an user perspective, now setting an ipv6 address, optionally configuring the ports should feel exactly the same as using an ipv4 address. If listening over both ipv4 and ipv6 then the user needs to:

- use the `--listen-address` two times (ipv4 and ipv6 addresses)

- `--port6` becomes then required

- `--discovery-port6` can now be used to additionally configure the ipv6 udp port

### Rough list of code changes

- Discovery:

- Table filter and ip mode set to match the listening config.

- Ipv6 address, tcp port and udp port set in the ENR builder

- Reported addresses now check which tcp port to give to libp2p

- LH Network Service:

- Can listen over Ipv6, Ipv4, or both. This uses two sockets. Using mapped addresses is disabled from libp2p and it's the most compatible option.

- NetworkGlobals:

- No longer stores udp port since was not used at all. Instead, stores the Ipv4 and Ipv6 TCP ports.

- NetworkConfig:

- Update names to make it clear that previous udp and tcp ports in ENR were Ipv4

- Add fields to configure Ipv6 udp and tcp ports in the ENR

- Include advertised enr Ipv6 address.

- Add type to model Listening address that's either Ipv4, Ipv6 or both. A listening address includes the ip, udp port and tcp port.

- UPnP:

- Kept only for ipv4

- Cli flags:

- `--listen-addresses` now can take up to two values

- `--port` will apply to ipv4 or ipv6 if only one listening address is given. If two listening addresses are given it will apply only to Ipv4.

- `--port6` New flag required when listening over ipv4 and ipv6 that applies exclusively to Ipv6.

- `--discovery-port` will now apply to ipv4 and ipv6 if only one listening address is given.

- `--discovery-port6` New flag to configure the individual udp port of ipv6 if listening over both ipv4 and ipv6.

- `--enr-udp-port` Updated docs to specify that it only applies to ipv4. This is an old behaviour.

- `--enr-udp6-port` Added to configure the enr udp6 field.

- `--enr-tcp-port` Updated docs to specify that it only applies to ipv4. This is an old behaviour.

- `--enr-tcp6-port` Added to configure the enr tcp6 field.

- `--enr-addresses` now can take two values.

- `--enr-match` updated behaviour.

- Common:

- rename `unused_port` functions to specify that they are over ipv4.

- add functions to get unused ports over ipv6.

- Testing binaries

- Updated code to reflect network config changes and unused_port changes.

## Additional Info

TODOs:

- use two sockets in discovery. I'll get back to this and it's on https://github.com/sigp/discv5/pull/160

- lcli allow listening over two sockets in generate_bootnodes_enr

- add at least one smoke flag for ipv6 (I have tested this and works for me)

- update the book

## Proposed Changes

The current `/lighthouse/nat` implementation checks for _zero_ address updated messages, when it should check for a _non-zero_ number. This was spotted while debugging an issue on Discord where a user's ports weren't forwarded but `/lighthouse/nat` was still returning `true`.

## Issue Addressed

#3435

## Proposed Changes

Fire a warning with the path of JWT to be created when the path given by --execution-jwt is not found

Currently, the same error is logged if the jwt is found but doesn't match the execution client's jwt, and if no jwt was found at the given path. This makes it very hard to tell if you accidentally typed the wrong path, as a new jwt is created silently that won't match the execution client's jwt. So instead, it will now fire a warning stating that a jwt is being generated at the given path.

## Additional Info

In the future, it may be smarter to handle this case by adding an InvalidJWTPath member to the Error enum in lib.rs or auth.rs

that can be handled during upcheck()

This is my first PR and first project with rust. so thanks to anyone who looks at this for their patience and help!

Co-authored-by: Sebastian Richel <47844429+sebastianrich18@users.noreply.github.com>

## Proposed Changes

Two tiny updates to satisfy Clippy 1.68

Plus refactoring of the `http_api` into less complex types so the compiler can chew and digest them more easily.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

#4040

## Proposed Changes

- Add the `always_prefer_builder_payload` field to `Config` in `beacon_node/client/src/config.rs`.

- Add that same field to `Inner` in `beacon_node/execution_layer/src/lib.rs`

- Modify the logic for picking the payload in `beacon_node/execution_layer/src/lib.rs`

- Add the `always-prefer-builder-payload` flag to the beacon node CLI

- Test the new flags in `lighthouse/tests/beacon_node.rs`

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

In #4024 we added metrics to expose the latency measurements from a VC to each BN. Whilst playing with these new metrics on our infra I realised it would be great to have a single metric to make sure that the primary BN for each VC has a reasonable latency. With the current "metrics for all BNs" it's hard to tell which is the primary.

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

- Adds a `WARN` statement for Capella, just like the previous forks.

- Adds a hint message to all those WARNs to suggest the user update the BN or VC.

## Additional Info

NA

## Proposed Changes

Fix the cargo audit failure caused by [RUSTSEC-2023-0018](https://rustsec.org/advisories/RUSTSEC-2023-0018) which we were exposed to via `tempfile`.

## Additional Info

I've held back the libp2p crate for now because it seemed to introduce another duplicate dependency on libp2p-core, for a total of 3 copies. Maybe that's fine, but we can sort it out later.

## Issue Addressed

Closes#3963 (hopefully)

## Proposed Changes

Compute attestation selection proofs gradually each slot rather than in a single `join_all` at the start of each epoch. On a machine with 5k validators this replaces 5k tasks signing 5k proofs with 1 task that signs 5k/32 ~= 160 proofs each slot.

Based on testing with Goerli validators this seems to reduce the average time to produce a signature by preventing Tokio and the OS from falling over each other trying to run hundreds of threads. My testing so far has been with local keystores, which run on a dynamic pool of up to 512 OS threads because they use [`spawn_blocking`](https://docs.rs/tokio/1.11.0/tokio/task/fn.spawn_blocking.html) (and we haven't changed the default).

An earlier version of this PR hyper-optimised the time-per-signature metric to the detriment of the entire system's performance (see the reverted commits). The current PR is conservative in that it avoids touching the attestation service at all. I think there's more optimising to do here, but we can come back for that in a future PR rather than expanding the scope of this one.

The new algorithm for attestation selection proofs is:

- We sign a small batch of selection proofs each slot, for slots up to 8 slots in the future. On average we'll sign one slot's worth of proofs per slot, with an 8 slot lookahead.

- The batch is signed halfway through the slot when there is unlikely to be contention for signature production (blocks are <4s, attestations are ~4-6 seconds, aggregates are 8s+).

## Performance Data

_See first comment for updated graphs_.

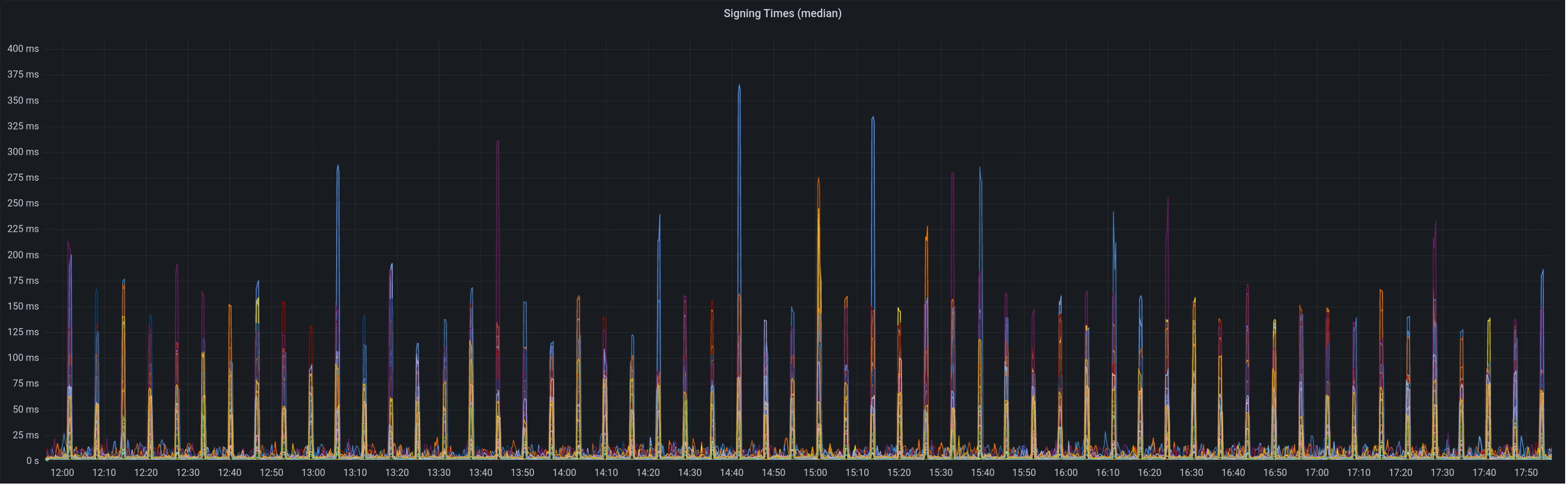

Graph of median signing times before this PR:

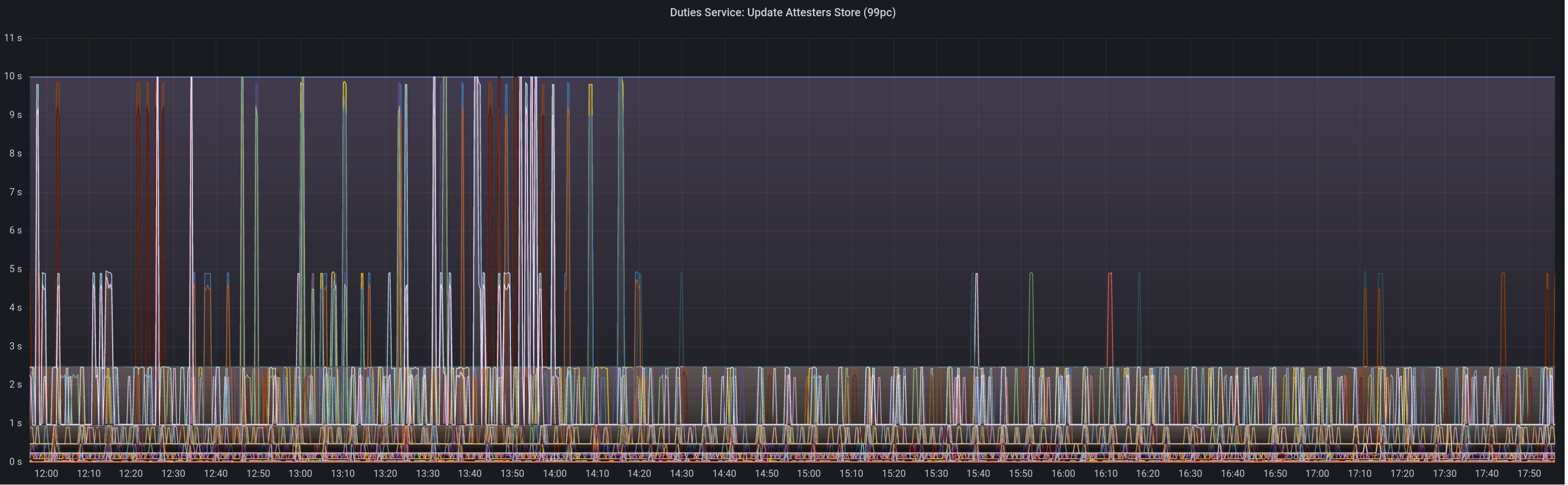

Graph of update attesters metric (includes selection proof signing) before this PR:

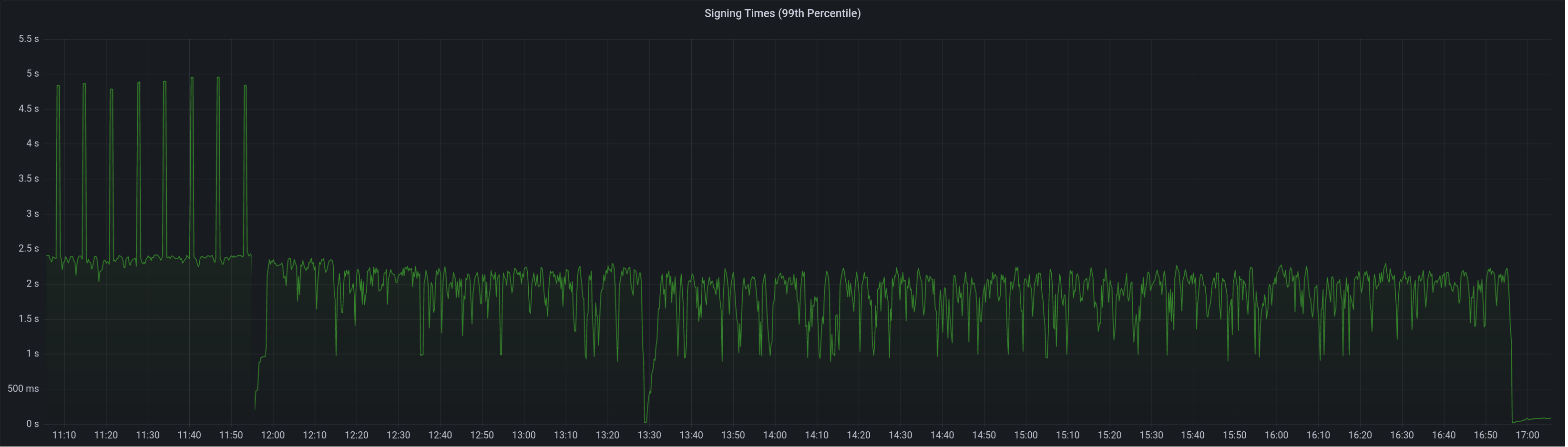

Median signing time after this PR (prototype from 12:00, updated version from 13:30):

99th percentile on signing times (bounded attestation signing from 16:55, now removed):

Attester map update timing after this PR:

Selection proof signings per second change:

## Link to late blocks

I believe this is related to the slow block signings because logs from Stakely in #3963 show these two logs almost 5 seconds apart:

> Feb 23 18:56:23.978 INFO Received unsigned block, slot: 5862880, service: block, module: validator_client::block_service:393

> Feb 23 18:56:28.552 INFO Publishing signed block, slot: 5862880, service: block, module: validator_client::block_service:416

The only thing that happens between those two logs is the signing of the block:

0fb58a680d/validator_client/src/block_service.rs (L410-L414)

Helpfully, Stakely noticed this issue without any Lighthouse BNs in the mix, which pointed to a clear issue in the VC.

## TODO

- [x] Further testing on testnet infrastructure.

- [x] Make the attestation signing parallelism configurable.

## Issue Addressed

Closes#3896Closes#3998Closes#3700

## Proposed Changes

- Optimise the calculation of withdrawals for payload attributes by avoiding state clones, avoiding unnecessary state advances and reading from the snapshot cache if possible.

- Use the execution layer's payload attributes cache to avoid re-calculating payload attributes. I actually implemented a new LRU cache just for withdrawals but it had the exact same key and most of the same data as the existing payload attributes cache, so I deleted it.

- Add a new SSE event that fires when payloadAttributes are calculated. This is useful for block builders, a la https://github.com/ethereum/beacon-APIs/issues/244.

- Add a new CLI flag `--always-prepare-payload` which forces payload attributes to be sent with every fcU regardless of connected proposers. This is intended for use by builders/relays.

For maximum effect, the flags I've been using to run Lighthouse in "payload builder mode" are:

```

--always-prepare-payload \

--prepare-payload-lookahead 12000 \

--suggested-fee-recipient 0x0000000000000000000000000000000000000000

```

The fee recipient is required so Lighthouse has something to pack in the payload attributes (it can be ignored by the builder). The lookahead causes fcU to be sent at the start of every slot rather than at 8s. As usual, fcU will also be sent after each change of head block. I think this combination is sufficient for builders to build on all viable heads. Often there will be two fcU (and two payload attributes) sent for the same slot: one sent at the start of the slot with the head from `n - 1` as the parent, and one sent after the block arrives with `n` as the parent.

Example usage of the new event stream:

```bash

curl -N "http://localhost:5052/eth/v1/events?topics=payload_attributes"

```

## Additional Info

- [x] Tests added by updating the proposer re-org tests. This has the benefit of testing the proposer re-org code paths with withdrawals too, confirming that the new changes don't interact poorly.

- [ ] Benchmarking with `blockdreamer` on devnet-7 showed promising results but I'm yet to do a comparison to `unstable`.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

NA

## Proposed Changes

Adds a service which periodically polls (11s into each mainnet slot) the `node/version` endpoint on each BN and roughly measures the round-trip latency. The latency is exposed as a `DEBG` log and a Prometheus metric.

The `--latency-measurement-service` has been added to the VC, with the following options:

- `--latency-measurement-service true`: enable the service (default).

- `--latency-measurement-service`: (without a value) has the same effect.

- `--latency-measurement-service false`: disable the service.

## Additional Info

Whilst looking at our staking setup, I think the BN+VC latency is contributing to late blocks. Now that we have to wait for the builders to respond it's nice to try and do everything we can to reduce that latency. Having visibility is the first step.

## Issue Addressed

NA

## Proposed Changes

As discovered in #4034, Lighthouse is not accepting `latest_valid_hash == None` in an `INVALID` response to `newPayload`. The `null`/`None` response *was* illegal at one point, however it was added in https://github.com/ethereum/execution-apis/pull/254.

This PR brings Lighthouse in line with the standard and should fix the root cause of what #4034 patched around.

## Additional Info

NA

## Issue Addressed

Cleaner resolution for #4006

## Proposed Changes

We are currently subscribing to core topics of new forks way before the actual fork since we had just a single `CORE_TOPICS` array. This PR separates the core topics for every fork and subscribes to only required topics based on the current fork.

Also adds logic for subscribing to the core topics of a new fork only 2 slots before the fork happens.

2 slots is to give enough time for the gossip meshes to form.

Currently doesn't add logic to remove topics from older forks in new forks. For e.g. in the coupled 4844 world, we had to remove the `BeaconBlock` topic in favour of `BeaconBlocksAndBlobsSidecar` at the 4844 fork. It should be easy enough to add though. Not adding it because I'm assuming that #4019 will get merged before this PR and we won't require any deletion logic. Happy to add it regardless though.

## Proposed Changes

Remove built-in support for Ropsten and Kiln via the `--network` flag. Both testnets are long dead and deprecated.

This shaves about 30MiB off the binary size, from 135MiB to 103MiB (maxperf), or 165MiB to 135MiB (release).

## Issue Addressed

Cleans up all the remnants of 4844 in capella. This makes sure when 4844 is reviewed there is nothing we are missing because it got included here

## Proposed Changes

drop a bomb on every 4844 thing

## Additional Info

Merge process I did (locally) is as follows:

- squash merge to produce one commit

- in new branch off unstable with the squashed commit create a `git revert HEAD` commit

- merge that new branch onto 4844 with `--strategy ours`

- compare local 4844 to remote 4844 and make sure the diff is empty

- enjoy

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

Adds two new `DEBG` logs to the HTTP API:

1. As soon as we are requested to produce a block.

2. As soon as a signed block is received.

In #3858 we added some very helpful logs to the VC so we could see when things are happening with block proposals in the VC. After doing some more debugging, I found that I can tell when the VC is sending a block but I *can't* tell the time that the BN receives it (I can only get the time after the BN has started doing some work with the block). Knowing when the VC published and as soon as the BN receives is useful for determining the delays introduced by network latency (and some other things like JSON decoding, etc).

## Additional Info

NA

## Issue Addressed

NA

## Proposed Changes

Updates our `ef_tests` to use: https://github.com/ethereum/consensus-specs/releases/tag/v1.3.0-rc.3

This required:

- Skipping a `merkle_proof_validity` test (see #4022)

- Account for the `eip4844` tests changing name to `deneb`

- My IDE did some Python linting during this change. It seemed simple and nice so I left it there.

## Additional Info

NA

This fixes issues with certain metrics scrapers, which might error if the content-type is not correctly set.

## Issue Addressed

Fixes https://github.com/sigp/lighthouse/issues/3437

## Proposed Changes

Simply set header: `Content-Type: text/plain` on metrics server response. Seems like the errored branch does this correctly already.

## Additional Info

This is needed also to enable influx-db metric scraping which work very nicely with Geth.

## Proposed Changes

Allowing compiling without MDBX by running:

```bash

CARGO_INSTALL_EXTRA_FLAGS="--no-default-features" make

```

The reasons to do this are several:

- Save compilation time if the slasher won't be used

- Work around compilation errors in slasher backend dependencies (our pinned version of MDBX is currently not compiling on FreeBSD with certain compiler versions).

## Additional Info

When I opened this PR we were using resolver v1 which [doesn't disable default features in dependencies](https://doc.rust-lang.org/cargo/reference/features.html#resolver-version-2-command-line-flags), and `mdbx` is default for the `slasher` crate. Even after the resolver got changed to v2 in #3697 compiling with `--no-default-features` _still_ wasn't turning off the slasher crate's default features, so I added `default-features = false` in all the places we depend on it.

Co-authored-by: Michael Sproul <micsproul@gmail.com>

## Issue Addressed

NA

## Proposed Changes

- Bump versions

## Sepolia Capella Upgrade

This release will enable the Capella fork on Sepolia. We are planning to publish this release on the 23rd of Feb 2023.

Users who can build from source and wish to do pre-release testing can use this branch.

## Additional Info

- [ ] Requires further testing

This is a correction to #3757.

The correction registers a peer that is being disconnected in the local peer manager db to ensure we are tracking the correct state.

## Issue Addressed

N/A

## Proposed Changes

The doppelganger tests were failing silently since the `PROPOSER_BOOST` config was not set. Sets the config and script returns an error if any subprocess fails.

## Issue Addressed

#3804

## Proposed Changes

- Add `total_balance` to the validator monitor and adjust the number of historical epochs which are cached.

- Allow certain values in the cache to be served out via the HTTP API without requiring a state read.

## Usage

```

curl -X POST "http://localhost:5052/lighthouse/ui/validator_info" -d '{"indices": [0]}' -H "Content-Type: application/json" | jq

```

```

{

"data": {

"validators": {

"0": {

"info": [

{

"epoch": 172981,

"total_balance": 36566388519

},

...

{

"epoch": 172990,

"total_balance": 36566496513

}

]

},

"1": {

"info": [

{

"epoch": 172981,

"total_balance": 36355797968

},

...

{

"epoch": 172990,

"total_balance": 36355905962

}

]

}

}

}

}

```

## Additional Info

This requires no historical states to operate which mean it will still function on the freshly checkpoint synced node, however because of this, the values will populate each epoch (up to a maximum of 10 entries).

Another benefit of this method, is that we can easily cache any other values which would normally require a state read and serve them via the same endpoint. However, we would need be cautious about not overly increasing block processing time by caching values from complex computations.

This also caches some of the validator metrics directly, rather than pulling them from the Prometheus metrics when the API is called. This means when the validator count exceeds the individual monitor threshold, the cached values will still be available.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* Modify comment to only include 4844

Capella only modifies per epoch processing by adding

`process_historical_summaries_update`, which does not change the realization of

justification or finality.

Whilst 4844 does not currently modify realization, the spec is not yet final

enough to say that it never will.

* Clarify address change verification comment

The verification of the address change doesn't really have anything to do with

the current epoch. I think this was just a copy-paste from a function like

`verify_exit`.

* Add extra encoding/decoding tests

* Remove TODO

The method LGTM

* Remove `FreeAttestation`

This is an ancient relic, I'm surprised it still existed!

* Add paranoid check for eip4844 code

This is not technically necessary, but I think it's nice to be explicit about

EIP4844 consensus code for the time being.

* Reduce big-O complexity of address change pruning

I'm not sure this is *actually* useful, but it might come in handy if we see a

ton of address changes at the fork boundary. I know the devops team have been

testing with ~100k changes, so maybe this will help in that case.

* Revert "Reduce big-O complexity of address change pruning"

This reverts commit e7d93e6cc7cf1b92dd5a9e1966ce47d4078121eb.

* Remove CapellaReadiness::NotSynced

Some EEs have a habit of flipping between synced/not-synced, which causes some

spurious "Not read for the merge" messages back before the merge. For the

merge, if the EE wasn't synced the CE simple wouldn't go through the transition

(due to optimistic sync stuff). However, we don't have that hard requirement

for Capella; the CE will go through the fork and just wait for the EE to catch

up. I think that removing `NotSynced` here will avoid false-positives on the

"Not ready logs..". We'll be creating other WARN/ERRO logs if the EE isn't

synced, anyway.

* Change some Capella readiness logging

There's two changes here:

1. Shorten the log messages, for readability.

2. Change the hints.

Connecting a Capella-ready LH to a non-Capella-ready EE gives this log:

```

WARN Not ready for Capella info: The execution endpoint does not appear to support the required engine api methods for Capella: Required Methods Unsupported: engine_getPayloadV2 engine_forkchoiceUpdatedV2 engine_newPayloadV2, service: slot_notifier

```

This variant of error doesn't get a "try updating" style hint, when it's the

one that needs it. This is because we detect the method-not-found reponse from

the EE and return default capabilities, rather than indicating that the request

fails. I think it's fair to say that an EE upgrade is required whenever it

doesn't provide the required methods.

I changed the `ExchangeCapabilitiesFailed` message since that can only happen

when the EE fails to respond with anything other than success or not-found.