## Issue Addressed

#1590

## Proposed Changes

This is a temporary workaround that prevents finalized chain sync from swapping chains. I'm merging this in now until the full solution is ready.

## Issue Addressed

Malicious users could request very large block ranges, more than we expect. Although technically legal, we are now quadraticaly weighting large step sizes in the filter. Therefore users may request large skips, but not a large number of blocks, to prevent requests forcing us to do long chain lookups.

## Proposed Changes

Weight the step parameter in the RPC filter and prevent any overflows that effect us in the step parameter.

## Additional Info

## Issue Addressed

N/A

## Proposed Changes

Adds extended metrics to get a better idea of what is happening at the gossipsub layer of lighthouse. This provides information about mesh statistics per topics, subscriptions and peer scores.

## Additional Info

## Issue Addressed

#1172

## Proposed Changes

* updates the libp2p dependency

* small adaptions based on changes in libp2p

* report not just valid messages but also invalid and distinguish between `IGNORE`d messages and `REJECT`ed messages

Co-authored-by: Age Manning <Age@AgeManning.com>

The PR:

* Adds the ability to generate a crucial test scenario that isn't possible with `BeaconChainHarness` (i.e. two blocks occupying the same slot; previously forks necessitated skipping slots):

* New testing API: Instead of repeatedly calling add_block(), you generate a sorted `Vec<Slot>` and leave it up to the framework to generate blocks at those slots.

* Jumping backwards to an earlier epoch is a hard error, so that tests necessarily generate blocks in a epoch-by-epoch manner.

* Configures the test logger so that output is printed on the console in case a test fails. The logger also plays well with `--nocapture`, contrary to the existing testing framework

* Rewrites existing fork pruning tests to use the new API

* Adds a tests that triggers finalization at a non epoch boundary slot

* Renamed `BeaconChainYoke` to `BeaconChainTestingRig` because the former has been too confusing

* Fixed multiple tests (e.g. `block_production_different_shuffling_long`, `delete_blocks_and_states`, `shuffling_compatible_simple_fork`) that relied on a weird (and accidental) feature of the old `BeaconChainHarness` that attestations aren't produced for epochs earlier than the current one, thus masking potential bugs in test cases.

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

## Issue Addressed

N/A

## Proposed Changes

Refactor attestation service to send out requests to find peers for subnets as soon as we get attestation duties.

Earlier, we had much more involved logic to send the discovery requests to the discovery service only 6 slots before the attestation slot. Now that discovery is much smarter with grouped queries, the complexity in attestation service can be reduced considerably.

Co-authored-by: Age Manning <Age@AgeManning.com>

## Issue Addressed

#1494

## Proposed Changes

- Give the TaskExecutor the sender side of a channel that a task can clone to request shutting down

- The receiver side of this channel is in environment and now we block until ctrl+c or an internal shutdown signal is received

- The swarm now informs when it has reached 0 listeners

- The network receives this message and requests the shutdown

## Issue Addressed

NA

## Proposed Changes

- Refactors the `BeaconProcessor` to remove some excessive nesting and file bloat

- Sorry about the noise from this, it's all contained in 4d3f8c5 though.

- Adds exits, proposer slashings, attester slashings to the `BeaconProcessor` so we don't get overwhelmed with large amounts of slashings (which happened a few hours ago).

## Additional Info

NA

## Description

This PR improves some logging for the end-user.

It downgrades some warning logs and removes the slots per second sync speed if we are syncing and the speed is 0. This is likely because we are syncing from a finalised checkpoint and the head doesn't change.

## Description

There can be many head chains queued up to complete. Currently we try and process all of these to completion before we consider the node synced.

In a chaotic network, there can be many of these and processing them to completion can be very expensive and slow. This PR removes any non-syncing head chains from the queue, and re-status's the peers. If, after we have synced to head on one chain, there is still a valid head chain to download, it will be re-established once the status has been returned.

This should assist with getting nodes to sync on medalla faster.

## Overview

There are forked chains which get referenced by blocks and attestations on a network. Typically if these chains are very long, we stop looking up the chain and downvote the peer. In extreme circumstances, many peers are on many chains, the chains can be very deep and become time consuming performing lookups.

This PR adds a cache to known failed chain lookups. This prevents us from starting a parent-lookup (or stopping one half way through) if we have attempted the chain lookup in the past.

## Description

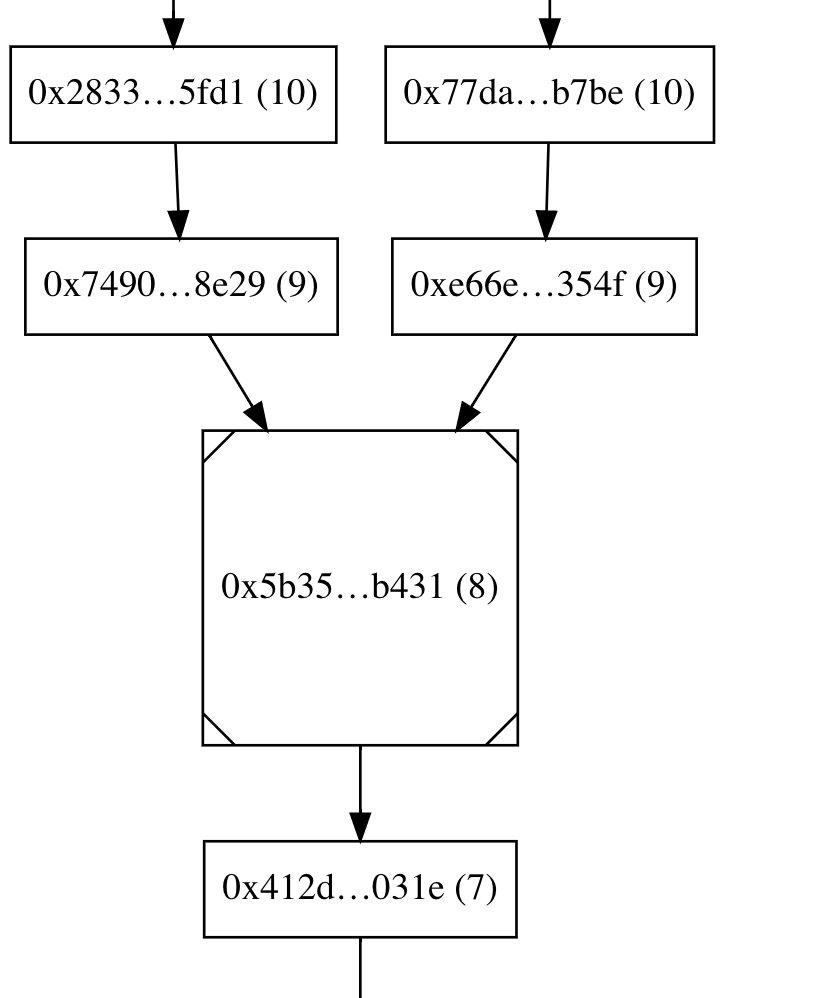

Currently lighthouse load-balances across peers a single finalized chain. The chain is selected via the most peers. Once synced to the latest finalized epoch Lighthouse creates chains amongst its peers and syncs them all in parallel amongst each peer (grouped by their current head block).

This is typically fast and relatively efficient under normal operations. However if the chain has not finalized in a long time, the head chains can grow quite long. Peer's head chains will update every slot as new blocks are added to the head. Syncing all head chains in parallel is a bottleneck and highly inefficient in block duplication leads to RPC timeouts when attempting to handle all new heads chains at once.

This PR limits the parallelism of head syncing chains to 2. We now sync at most two head chains at a time. This allows for the possiblity of sync progressing alongside a peer being slow and holding up one chain via RPC timeouts.

The changes are somewhat simple but should solve two issues:

- When quickly changing between chains once and a second time back again, batchIds would collide and cause havoc.

- If we got an out of range response from a peer, sync would remain in syncing but without advancing

Changes:

- remove the batch id. Identify each batch (inside a chain) by its starting epoch. Target epochs for downloading and processing now advance by EPOCHS_PER_BATCH

- for the same reason, move the "to_be_downloaded_id" to be an epoch

- remove a sneaky line that dropped an out of range batch without downloading it

- bonus: put the chain_id in the log given to the chain. This is why explicitly logging the chain_id is removed

## Proposed Changes

To mitigate the impact of minority forks on RAM and disk usage, this change rejects blocks whose parent lies more than 320 slots (10 epochs, ~1 hour) in the past. The behaviour is configurable via `lighthouse bn --max-skip-slots N`, and can be turned off entirely using `--max-skip-slots none`.

Co-authored-by: Paul Hauner <paul@paulhauner.com>

## Issue Addressed

NA

## Proposed Changes

Moves beacon block processing over to the newly-added `GossipProcessor`. This moves the task off the core executor onto the blocking one.

## Additional Info

- With this PR, gossip blocks are being ignored during sync.

## Issue Addressed

#1384

Only catch, as currently implemented, when dialing the multiaddr nodes, there is no way to ask the peer manager if they are already connected or dialing

## Issue Addressed

N/A

## Proposed Changes

Introduces the `GossipProcessor`, a multi-threaded (multi-tasked?), non-blocking processor for some messages from the network which require verification and import into the `BeaconChain`.

Initial testing indicates that this massively improves system stability by (a) moving block tasks from the normal executor (b) spreading out attestation load.

## Additional Info

TBC

## Issue Addressed

#1028

A bit late, but I think if `BlockError` had a kind (the current `BlockError` minus everything on the variants that comes directly from the block) and the original block, more clones could be removed

## Issue Addressed

Sync was breaking occasionally. The root cause appears to be identify crashing as events we being sent to the protocol after nodes were banned. Have not been able to reproduce sync issues since this update.

## Proposed Changes

Only send messages to sub-behaviour protocols if the peer manager thinks the peer is connected. All other messages are dropped.

## Issue Addressed

Recurring sync loop and invalid batch downloading

## Proposed Changes

Shifts the batches to include the first slot of each epoch. This ensures the finalized is always downloaded once a chain has completed syncing.

Also add in logic to prevent re-dialing disconnected peers. Non-performant peers get disconnected during sync, this prevents re-connection to these during sync.

## Additional Info

N/A

## Issue Addressed

N/A

## Proposed Changes

This provides a number of corrections and improvements to gossipsub. Specifically

- Enables options for greater privacy around the message author

- Provides greater flexibility on message validation

- Prevents unvalidated messages from being gossiped

- Shifts the duplicate cache to a time-based cache inside gossipsub

- Updates the message-id to handle bytes

- Bug fixes related to mesh maintenance and topic subscription. This should improve our attestation inclusion rate.

## Issue Addressed

#1388 partially (eth2_libp2p & network)

## Proposed Changes

TLDR at the end

- *Complex types* are 3 on the handlers/Behaviours but the types are `Poll<ComplexType>` where `ComplexType` comes from the traits of libp2p. Those, I don't thing are worth an alias. A couple more were from using tokio combinators and were removed writing things the async way and using [`BoxFuture`](https://docs.rs/futures/0.3.5/futures/future/type.BoxFuture.html)

- The *cognitive complexity*.. I tried to address those before (they come from the poll functions too) and tbh they are cognitively simpler to understand the way they are now. Moving separate parts to functions doesn't add much since that code is not repeated and they all do early returns. If moved those returns would now need to be wrapped in an Option, probably, and checked to be returned again. I would leave them like that but that's just preference.

- *Too many arguments*: They are not easily put together in a wrapping struct since the parameters don't relate semantically (Ex: fn new with a log, a reference to the chain, a peer, etc) but some may differ.

- *Needless returns* were indeed needless

## Additional Info

TLDR: removed needless return, used BoxFuture and async, left the rest untouched since those lgtm

* Process exits and slashings off the network

* Fix rest_api tests

* Add op verification tests

* Add tests for pruning of slashings in the op pool

* Address Paul's review comments

* Update `milagro_bls` to new release (#1183)

* Update milagro_bls to new release

Signed-off-by: Kirk Baird <baird.k@outlook.com>

* Tidy up fake cryptos

Signed-off-by: Kirk Baird <baird.k@outlook.com>

* move SecretHash to bls and put plaintext back

Signed-off-by: Kirk Baird <baird.k@outlook.com>

* Update v0.12.0 to v0.12.1

* Add compute_subnet_for_attestation

* Replace CommitteeIndex topic with Attestation

* Fix warnings

* Fix attestation service tests

* fmt

* Appease clippy

* return error from validator_subscriptions

* move state out of loop

* Fix early break on error

* Get state from slot clock

* Fix beacon state in attestation tests

* Add failing test for lookahead > 1

* Minor change

* Address some review comments

* Add subnet verification to beacon chain

* Move subnet verification to processor

* Pass committee_count_at_slot to ValidatorDuty and ValidatorSubscription

* Pass subnet id for publishing attestations

* Fix attestation service tests

* Fix more tests

* Fix fork choice test

* Remove unused code

* Remove more unused and expensive code

Co-authored-by: Kirk Baird <baird.k@outlook.com>

Co-authored-by: Michael Sproul <michael@sigmaprime.io>

Co-authored-by: Age Manning <Age@AgeManning.com>

Co-authored-by: Paul Hauner <paul@paulhauner.com>

* Layer do_atomically() abstractions properly

* Reduce allocs and DRY get_key_for_col()

* Parameterize HotColdDB with hot and cold item stores

* -impl Store for MemoryStore

* Replace Store uses with HotColdDB

* Ditch Store trait

* cargo fmt

* Style fix

* Readd missing dep that broke the build